Gengshan Yang

@gengshanY

Followers

495

Following

143

Media

11

Statuses

49

3D computer vision, inverse rendering, physics, control, behavior

Joined August 2014

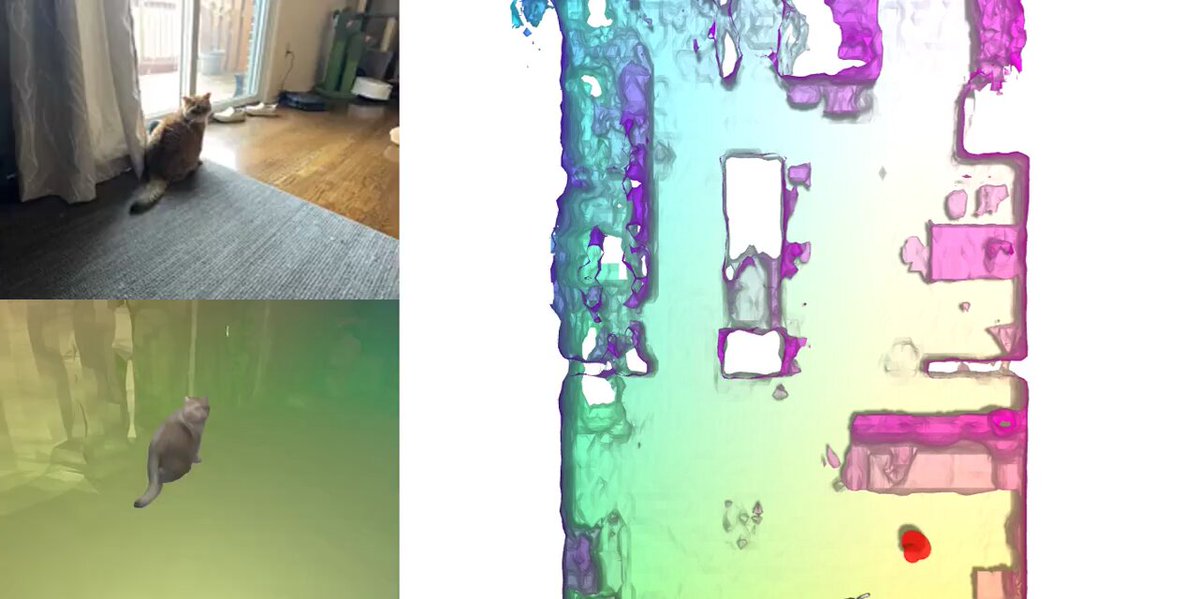

Sharing my recent project, agent-to-sim: From monocular videos taken over a long time horizon (e.g., 1 month), we learn an interactive behavior model of an agent (e.g., a 🐱) grounded in 3D. https://t.co/Q1aqledjys

8

57

376

A picture now is worth more than a thousand words in genAI; it can be turned into a full 3D world! And you can stroll in this garden endlessly long, it will still be there.

148

326

3K

Turn old photos into videos and see friends and family come to life. Try Grok Imagine, free for a limited time.

732

1K

5K

spark v0.1.4 is out! featuring a few new upgrades and open-sourced examples, like depth-of-field effects... 1/n

6

17

141

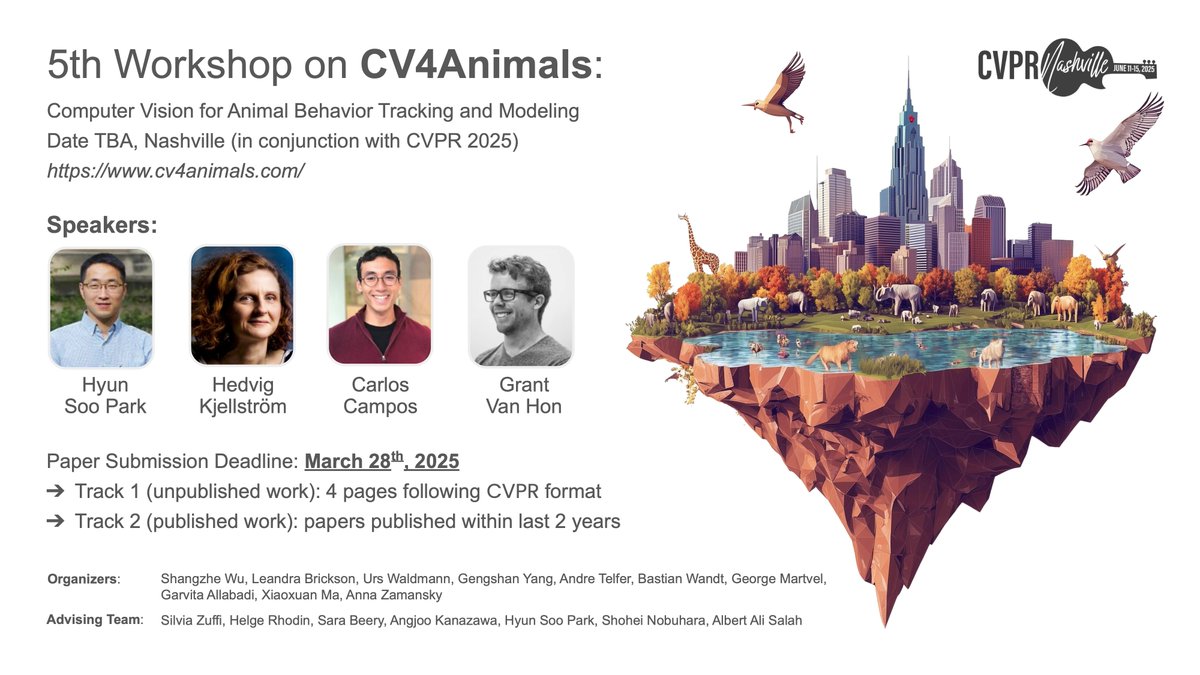

Another fantastic #CV4Animals workshop in the books! Huge thanks to our incredible speakers, @Grant_Van_Horn, Tilo, Hedvig, Carlos, Vivek, and the amazing organizing team! Hope to see everyone again next year!

0

10

40

During the development of Spark, we built a demo to showcase the potential of using full scale AI generated 3D worlds as immersive VR environments.

5

37

148

Join us for the 4D Vision Workshop @CVPR on June 11 starting at 9:20am! We'll have an incredible lineup of speakers discussing the frontier of 3D computer vision techniques for dynamic world modeling across spatial AI, robotics, astrophysics, and more. https://t.co/PxAj0kwtrJ

0

20

97

Open Sourcing Forge: 3D Gaussian splat rendering for web developers! 3DGS has become a dominant paradigm for differentiable rendering, combining high visual quality and real-time rendering. However, support for splatting on the web still lags behind its adoption in AI.

12

58

245

For years, I’ve been tuning parameters for robot designs and controllers on specific tasks. Now we can automate this on dataset-scale. Introducing Co-Design of Soft Gripper with Neural Physics - a soft gripper trained in simulation to deform while handling load.

7

37

130

Submissions are due in 2 weeks on March 28! Both 4-page and 8-page submissions are welcome. Check out the website for more detail: https://t.co/PxAj0kvVCb See you all at @CVPR!

Really excited to put together this @CVPR workshop on "4D Vision: Modeling the Dynamic World" -- one of the most fascinating areas in computer vision today! We've invited incredible researchers who are leading fantastic work at various related fields. https://t.co/PxAj0kvVCb

0

4

17

In case you didn't notice, we're offering a number of FREE conference and workshop registrations to the outstanding submissions :) Submit your work by March 28!

cv4animals.com

About

Excited to bring the 5th CV4Animals Workshop to #CVPR2025 @CVPR We welcome submissions in 2 tracks: 1) unpublished work up to 4 pages 2) papers published within last 2 years Submit by Mar 28 & join us with amazing talks in Nashville: https://t.co/nyLEXmn5pV 🦒🪼🐬🐿️🦩🐢🦘🦜🦥🦋

0

3

15

Really excited to put together this @CVPR workshop on "4D Vision: Modeling the Dynamic World" -- one of the most fascinating areas in computer vision today! We've invited incredible researchers who are leading fantastic work at various related fields. https://t.co/PxAj0kvVCb

2

20

103

Excited to bring the 5th CV4Animals Workshop to #CVPR2025 @CVPR We welcome submissions in 2 tracks: 1) unpublished work up to 4 pages 2) papers published within last 2 years Submit by Mar 28 & join us with amazing talks in Nashville: https://t.co/nyLEXmn5pV 🦒🪼🐬🐿️🦩🐢🦘🦜🦥🦋

2

19

89

3D content creation with touch! We exploit tactile sensing to enhance geometric details for text- and image-to-3D generation. Check out our #NeurIPS2024 work on Tactile DreamFusion: Exploiting Tactile Sensing for 3D Generation: https://t.co/QyW1TkdvSo 1/3

2

22

76

We’ve been busy building an AI system to generate 3D worlds from a single image. Check out some early results on our site, where you can interact with our scenes directly in the browser! https://t.co/ASD6ZHMwxI 1/n

198

690

3K

I'm building a new research lab @Cambridge_Eng focusing on 4D computer vision and generative models. Interested in joining us as a PhD student? Apply to the Engineering program by Dec 3 🗓️ https://t.co/SDJEz2XiZp ChatGPT's "portrait of my current life"👇 https://t.co/qcnSgqYMWr

4

43

216

Can we enable free-viewpoint renderings of scenes under various lighting conditions? Yes, we can! And just from SINGLE videos. Here are 🌃 night simulations from input videos with hard shadows.

2

26

178

See webpage ( https://t.co/MNXUaEooy7) for other examples and results on more categories (🧑🏻💻🐰🐶). Finally, shout out to my amazing collaborators!! @andrea_bajcsy @psyth91 @akanazawa

1

0

8

- From the dynamic 3D reconstruction, we query pairs of "perception" and motion data of the agent to train a behavior generator (a diffusion model). It generates goals, paths, and body poses sequentially.

1

0

8

- Fine: Run inverse rendering to refine the cameras and produce dynamic 3D structures. Left: surfaces from NeRF Right: gaussian splatting refinement

1

0

5

Technical takeaways - Learning behavior needs 3D tracking and space registration, which is hard over long time horizon & at scale (~20k frames), so we solve it coarse-to-fine. - Coarse: Train camera pose estimators on "personalized" data to provide rough guesses. The

1

0

6