Ferdinand Schlatt

@fschlatt1

Followers

148

Following

124

Media

8

Statuses

78

PhD Student, efficient and effective neural IR models 🧠🔎

Joined October 2017

RT @webis_de: Honored to win the ICTIR Best Paper Honorable Mention Award for "Axioms for Retrieval-Augmented Generation"!.Our new axioms a….

0

4

0

RT @ReNeuIRWorkshop: The fourth ReNeuIR Workshop @ #SIGIR2025 is about to start. Join us! Program of the day is here: .

reneuir.org

Workshop on Reaching Efficiency in Neural Information Retrieval

0

2

0

RT @webis_de: Happy to share that our paper "The Viability of Crowdsourcing for RAG Evaluation" received the Best Paper Honourable Mention….

0

6

0

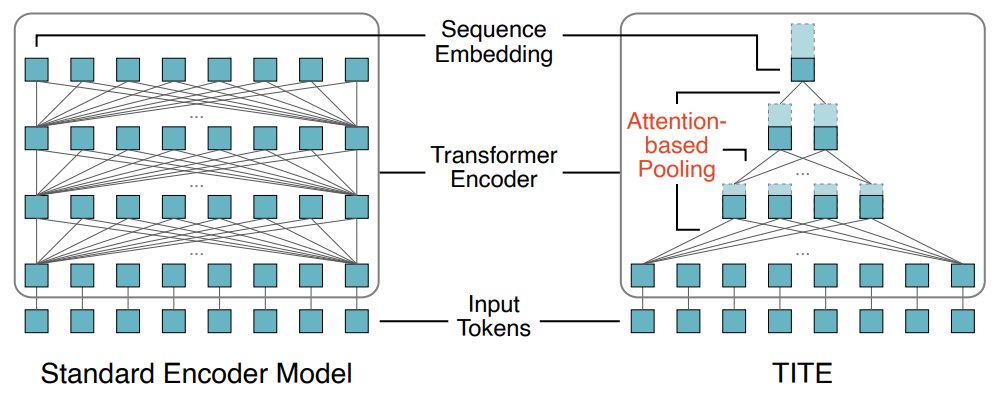

Want to know how to make bi-encoders more than 3x faster with a new backbone encoder model? Check out our talk on the Token-Independent Text Encoder (TITE) #SIGIR2025 in the efficiency track. It pools vectors within the model to improve efficiency

0

11

61

RT @MrParryParry: Really like this work, if you haven't read it yet, have a look: PSA from Ian Soboroff! move to K….

0

1

0

RT @ir_glasgow: Now it’s @MrParryParry presenting the reproducibility efforts of a large team of researchers in relation to the shelf life….

0

5

0

Thank you @cadurosar for shout-out of Lightning IR in the LSR tutorial at #SIGIR2025. If you want to fine your own LSR models, check out our framework at

0

2

12

@maik_froebe @hscells @ShengyaoZhuang @bevan_koopman @guidozuc @bennostein @martinpotthast @matthias_hagen Short: Rank-DistiLLM: Closing the Effectiveness Gap Between Cross-Encoders and LLMs for Passage Re-ranking Full: Set-Encoder: Permutation-Invariant Inter-Passage Attention for Listwise Passage Re-Ranking with Cross-Encoders

0

7

29

What an honor to receive both the best short paper award and the best paper honourable mention award at #ECIR2025. Thank you to all the co-authors @maik_froebe @hscells @ShengyaoZhuang @bevan_koopman @guidozuc @bennostein @martinpotthast @matthias_hagen 🥳.

4

6

43

RT @antonio_mallia: It was a really pleasant surprise to learn that our paper “Efficient Constant-Space Multi-Vector Retrieval” aka ConstBE….

0

8

0

Next up at #ECIR2025, @maik_froebe presenting his fantastic work on corpus sub sampling and how to more efficiently evaluate retrieval systems

0

4

24

RT @MrParryParry: Now accepted at #SIGIR2025! looking forward to discussing evaluation with LLMs at #ECIR2025 this week and of course in Pa….

0

7

0

RT @tomaarsen: I've just ported the excellent monoELECTRA-{base, large} reranker models from @fschlatt1 & the research network Webis Group….

0

18

0

RT @MrParryParry: 🚨 New Pre-Print! 🚨 Reviewer 2 has once again asked for DL’19, what can you say in rebuttal? We have re-annotated DL’19 i….

0

11

0

Happy to share our framework for fine-tuning and running neural ranking models, Lightning IR, was accepted as a demo at #WSDM25 🥳. Pre-print: .Code: Docs: A quick rundown of Lightning IR's main features:.

github.com

One-stop shop for running and fine-tuning transformer-based language models for retrieval - webis-de/lightning-ir

2

7

47