Francesca Lucchetti

@fran_lucc

Followers

71

Following

15

Media

2

Statuses

24

CS PhD Student at Northeastern University

Massachusetts, USA

Joined October 2022

RT @ArjunGuha: We present a new benchmark for reasoning models that reveals capability gaps and failure modes that are not evident in exist….

0

11

0

RT @jadenfk23: 🚀 New NNsight features launching today! If you’re conducting research on LLM internals, NNsight 0.3 is now available. This u….

0

19

0

RT @jadenfk23: Frontier LLMs have capabilities that smaller AIs don't, but up to now there's been no way to crack them open. Now that #Lla….

ndif.us

NDIF is a research computing project that enables researchers and students to crack open the mysteries inside large-scale AI systems.

0

25

0

RT @ellev3n11: Llama-3.1 trains on synthetic translations of Python to low-resource languages (e.g., PHP) to improve performance on MultiPL….

0

4

0

RT @_akhaliq: NNsight and NDIF. Democratizing Access to Foundation Model Internals. The enormous scale of state-of-the-art foundation model….

0

25

0

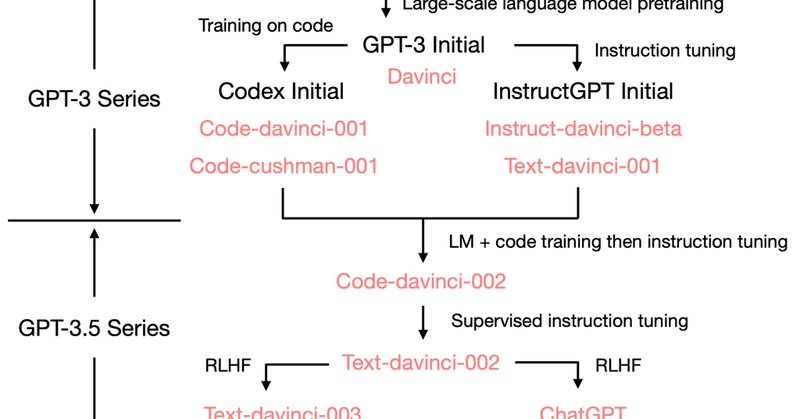

RT @Francis_YAO_: How did the initial #GPT3 evolve to today's #ChatGPT ? Where do the amazing abilities of #GPT3.5 come from? What is enabl….

yaofu.notion.site

Yao Fu | Website | Blog

0

323

0

RT @DeepMind: Introducing a generalist neural algorithmic learner, capable of carrying out 30 different reasoning tasks, with a 𝘴𝘪𝘯𝘨𝘭𝘦 grap….

0

242

0

RT @sewon__min: Most if not all language models use a softmax that gives a categorical probability distribution over a finite vocab. We int….

0

80

0

RT @linguistMasoud: Ok I think it is time to share my "foundations of linguistics" syllabus with you here. It took me a long time to work o….

0

23

0

RT @MetaAI: 4️⃣ Papers we presented at #NeurIPS2022 that you should know about (and how you can learn more even if you’re not at the confer….

0

35

0

RT @schwabpa: You couldn't make it to #NeurIPS2022 this year?. Nothing to worry - I curated a summary for you below focussing on key papers….

0

149

0