Federico Cassano

@ellev3n11

Followers

2K

Following

579

Media

18

Statuses

395

big model trainer @cursor_ai. licensed fisherman @californiadfw. gpu connoisseur. emergent opera singer.

San Francisco - Milan

Joined September 2020

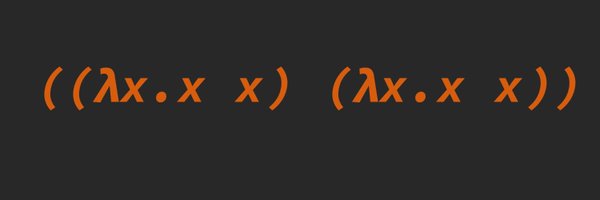

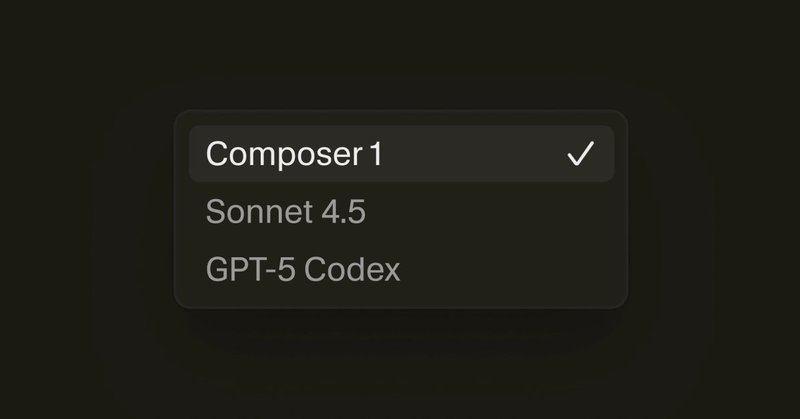

so excited to share Composer with the world! Composer is Cursor's own agentic coding model. it plans, edits, and builds software alongside you with precision, keeping you in flow with incredible speed. i started this project on the side while working on a bug-finder prototype

32

11

466

the thing i dislike about all the cli coding agents out today is that they all break the https://t.co/wNUYOG1Q8n philosophy. cli tools should be minimal, snappy, and hackable.

4

0

31

next ship: orchestra

1

0

22

2026/2027 will be the age of fault tolerance, health checks, and CP+DP->EP

Remember how we were stuck with 80GB HBM for a really long time? This pattern is breaking - copious GPU memory will be the new norm in high end gpus in 26/27 - Nvidia Ultra Rubin - 1024GB HBM - Qualcomm MI200 - 768GB LPDDR - AMD MI400x - 432GB HBM So ML performance

3

2

44

More on our blog post:

cursor.com

Built to make you extraordinarily productive, Cursor is the best way to code with AI.

so excited to share Composer with the world! Composer is Cursor's own agentic coding model. it plans, edits, and builds software alongside you with precision, keeping you in flow with incredible speed. i started this project on the side while working on a bug-finder prototype

0

0

45

i have not updated by arch laptop in months; should i do it chat?

1

0

3

The world expert in SDC issues for LLM training is, of course, AWS:

arxiv.org

As the scale of training large language models (LLMs) increases, one emergent failure is silent data corruption (SDC), where hardware produces incorrect computations without explicit failure...

0

1

7

incredibly grateful. thank you to everyone that helped me get here. especially to @cursor_ai for truly making it happen and to @ArjunGuha for being such a great inspiration.

19

3

213

checked-out the puppies at @VoltagePark IRL. H100s are pretty blocky, unlike gaming graphics cards

2

3

55

MoE layers can be really slow. When training our coding models @cursor_ai, they ate up 27–53% of training time. So we completely rebuilt it at the kernel level and transitioned to MXFP8. The result: 3.5x faster MoE layer and 1.5x end-to-end training speedup. We believe our

29

105

884

GPT-5 is now available in Cursor. It’s the most intelligent coding model our team has tested. We're launching it for free for the time being. Enjoy!

247

535

6K

Cursor is now in your terminal! It’s an early beta. Access all models. Move easily between your CLI and editor.

304

546

7K