brandon wang

@fluorane

Followers

750

Following

6K

Media

14

Statuses

211

@cartesia_ai | prev undergrad @miteecs and @mitbiology, @janestreetgroup @broadinstitute @novid

san francisco

Joined April 2021

happy to announce that we've gotten rid of tokenizers!. especially excited with what we've replaced them with: end-to-end trainable modules that not only learn to group characters into (sub)words, but can iterate to group words into phrases and further higher-order concepts. see.

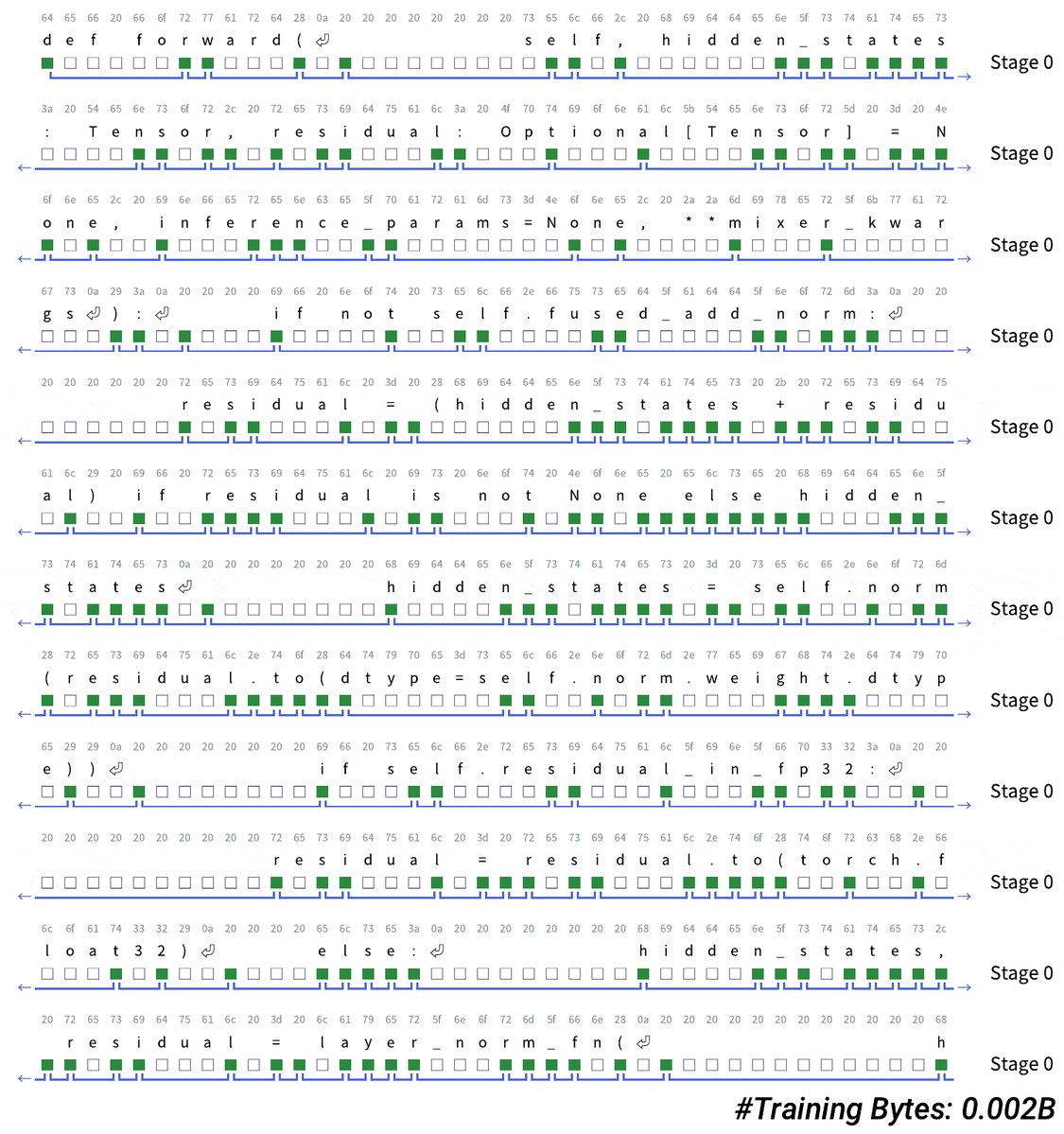

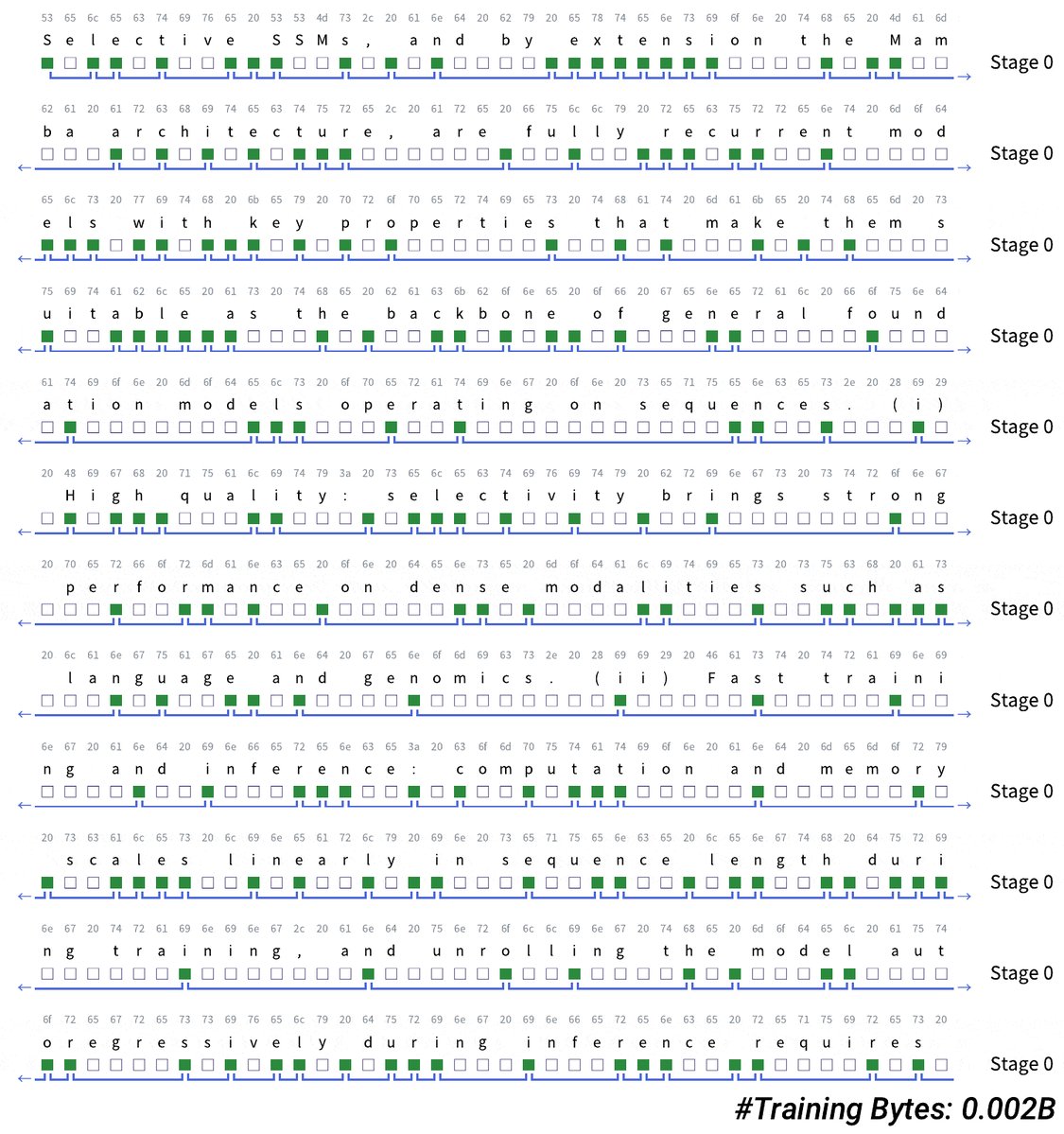

Tokenization has been the final barrier to truly end-to-end language models. We developed the H-Net: a hierarchical network that replaces tokenization with a dynamic chunking process directly inside the model, automatically discovering and operating over meaningful units of data

12

52

770

RT @cartesia_ai: 🚨 As many of you know, Play AI is in the process of shutting down after their acquisition a few weeks ago. Their API is al….

0

8

0

@_albertgu interpretable methods in comp bio seem quite important to yielding real biological insights -- really excited about the potential for using h-net to find structured blocks in less (visibly) structured parts of the genome.

0

0

5

this is actually my hypothesis as to why h-net scales so well on DNA -- non-coding DNA has lots of uninformative/noisy bps, and h-net is able to filter these out effectively . (notice that this is very similar to the synthetic @_albertgu proposes in ).

@AmberZqt @NiraliSomia @stevenyuyy Tokenizing nucleotides/kmers and treating each token equally is like injecting lots of random words between every word in a sentence and hope that a LLM will learn the structure of the english language.

2

1

42

on a more personal note, this was my first real taste of ml research, and a super invigorating project at that. i had so much fun and learned an incredible amount from working with the amazing @sukjun_hwang. (and @_albertgu is pretty great too!).

2

0

47

we'll be at icml next week! i'll be there wed-sat and will be hanging out at the booth wednesday at 2pm (among other times probably). come say hi!.

🚨 𝗖𝗮𝗿𝘁𝗲𝘀𝗶𝗮 𝗶𝘀 𝗵𝗲𝗮𝗱𝗶𝗻𝗴 𝘁𝗼 𝗜𝗖𝗠𝗟! 🚨 . We’ll be on the exhibitor floor all week — come say hi! 👋 . Check out what we're building in voice, meet the team, and geek out with us on the future of AI architectures. Whether you’re a researcher, engineer, or just

0

0

46

RT @alantomusiak: In my three years of being on Twitter, this is the tweet that I come back to the most often. In the age of AI, this als….

0

35

0

RT @krandiash: Exciting news, we're officially building Cartesia's India team in Bangalore. We'll start with a 5 person team in-person in B….

0

35

0

RT @cartesia_ai: Introducing Pro Voice Cloning. Fine-tune our ultra-fast Sonic model on your own voice data to create hyperrealistic replic….

0

9

0

RT @elipughresearch: Also check out the comparison here - tl;dr is.cartesia: 180ms, 2% WER.gpt4o-audio-preview: 330….

0

1

0

RT @avivbick: 🔥 Llama-level performance with <0.1% of the training data 🔥. Together with @cartesia_ai, we introduce Llamba—a family of recu….

0

22

0

RT @BlancheMinerva: It's really disappointing to watch US media orgs consistently push out drivel about DeepSeek. The US is so obsessed wi….

0

51

0

RT @cartesia_ai: Today we are launching a new model powering Cartesia's Voice Changer – the ultimate tool to transform, clone, and localize….

0

21

0