Eduardo Sánchez

@eduardosg_ai

Followers

216

Following

267

Media

30

Statuses

48

Research Scientist at @Meta. PhD Student at @ucl_nlp. Formerly MSc AI at @UM_DACS & BSc CS at @MatCom_UH. Working on Low-Resource MT and linguistic reasoning.

London/Paris

Joined May 2022

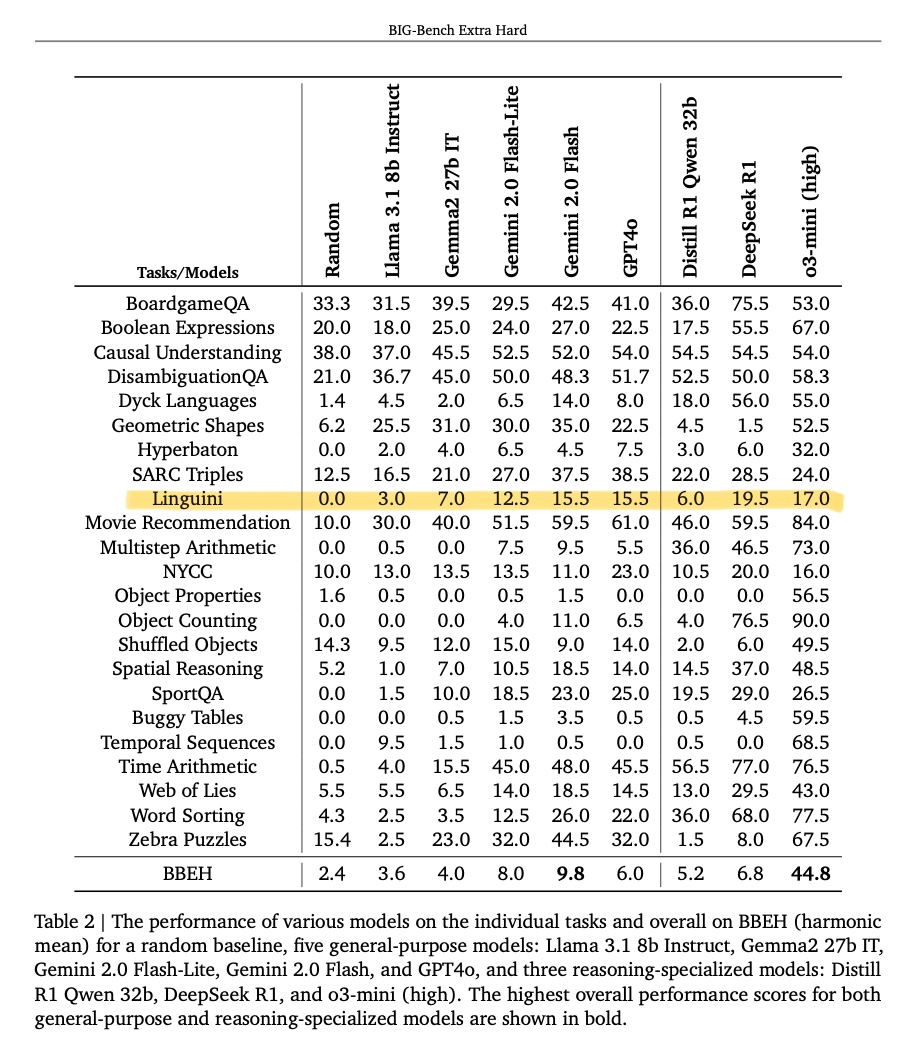

🚨NEW BENCHMARK🚨. Are LLMs good at linguistic reasoning if we minimize the chance of prior language memorization?. We introduce Linguini🍝, a benchmark for linguistic reasoning in which SOTA models perform below 25%. w/ @b_alastruey, @artetxem, @costajussamarta et al. 🧵(1/n)

3

23

117

Some interesting work quantifying the curse of multilinguality! Congrats, @b_alastruey and @JoaoMJaneiro!.

🚀New paper alert! 🚀. In our work @AIatMeta we dive into the struggles of mixing languages in largely multilingual Transformer encoders and use the analysis as a tool to better design multilingual models to obtain optimal performance. 📄: 🧵(1/n)

0

0

2

Linguini is available on GitHub ( and on Huggingface (. You can read our paper here 👉 and BBEH's paper here 👉 Happy saturating! 🍝.

github.com

Linguini is a benchmark to measure a language model’s linguistic reasoning skills without relying on pre-existing language-specific knowledge, based on the International Linguistic Olympiad problem...

0

0

1

RT @javifer_96: New ICLR 2025 (Oral) paper🚨. Do LLMs know what they don’t know?.We observed internal mechanisms suggesting models recognize….

0

44

0

RT @AIatMeta: New research from Meta FAIR: Large Concept Models (LCM) is a fundamentally different paradigm for language modeling that deco….

0

558

0

Happy to share our team's work on the Large Concept Model, an alternative to token-based LLMs that operates in a multilingual embedding space, unlocking zero-shot generalization and outperforming similarly sized SOTA LLMs for several languages in various summarization tasks.

Wrapping up the year and coinciding with #NeurIPS2024, today at Meta FAIR we’re releasing a collection of nine new open source AI research artifacts across our work in developing agents, robustness & safety and new architectures. More in the video from @jpineau1. All of this

1

1

13

RT @b_alastruey: 🚨New #EMNLP Main paper🚨. What is the impact of ASR pretraining in Direct Speech Translation models?🤔. In our work we use….

0

4

0

To build a benchmark of linguistic challenges in languages LLMs are unlikely to have seen before, we extracted problems from the @IOLing_official, a contest where participants must solve linguistic puzzles in (mostly) extremely low-resource languages. 🧵(2/n)

1

1

5