Eugene Bagdasarian

@ebagdasa

Followers

1K

Following

941

Media

62

Statuses

372

Challenge AI security and privacy practices. Asst Prof at UMass @manningcics. Researcher at @GoogleAI. he/him 🇦🇲 (opinions mine)

Amherst, MA

Joined April 2014

Filtering names w LLMs is easy, right? Plenty of privacy solutions out there claiming how well things work. However, our paper led by @dzungvietpham shows that things get tricky once we go to rare names in ambiguous contexts -- which could result in real harm if overlooked.

🙋 Can LLMs reliably detect PII such as person names?.‼️ Not really, especially if the context has ambiguity. 🖇️ Our work shows that LLMs can struggle to recognize person names in barely ambiguous contexts.

0

0

11

Thanks @niloofar_mire for moderating the session 😀! Thanks @EarlenceF, @jhasomesh , @christodorescu for organizing this awesome SAGAI workshop (and also inviting me, haha)!.

Join us at the SAGAI workshop @IEEESSP, @ebagdasa is talking about contextual integrity and security for AI agents!

1

0

8

RT @EarlenceF: Our @IEEESSP SAGAI workshop on systems-oriented security for AI agents has speaker details (abs/bio) on the website now: ht….

0

4

0

@tingwei_zhang1 @rishi_d_jha and OverThink: Slowdown Attacks on Reasoning LLMs ( led by @abhinav_kumar26 with awesome help by @JaechulRoh and @AliNaseh6

0

0

3

Papers I've got a chance to present:.1. Adversarial Illusions in Multi-Modal Embeddings ( led by @tingwei_zhang1 and @rishi_d_jha! (this paper got Distinguished Award at USENIX Security'24).

@USENIXSecurity @tingwei_zhang1 @rishi_d_jha @cornell_tech @manningcics @AIatMeta However, this design creates an opportunity for adversaries to compromise all pipelines at the same time! We show that it's possible to craft an "illusion" -- a perturbation that replaces embedding of the original input with an embedding of an adversary-chosen one. (6/n)

1

0

3

Amazing forward-looking paper on how collaboration could be done where you and I have different perspectives.

Suppose you and I both have different features about the same instance. Maybe I have CT scans and you have physician notes. We'd like to collaborate to make predictions that are more accurate than possible from either feature set alone, while only having to train on our own data.

0

1

12

RT @nandofioretto: The Privacy Preserving AI workshop is back! And is happening on Monday. I am excited about our program and lineup of in….

0

6

0

RT @egor_zverev_ai: (1/n) In our #ICLR2025 paper, we explore a fundamental issue that enables prompt injections: 𝐋𝐋𝐌𝐬’ 𝐢𝐧𝐚𝐛𝐢𝐥𝐢𝐭𝐲 𝐭𝐨 𝐬𝐞𝐩𝐚𝐫𝐚….

0

13

0

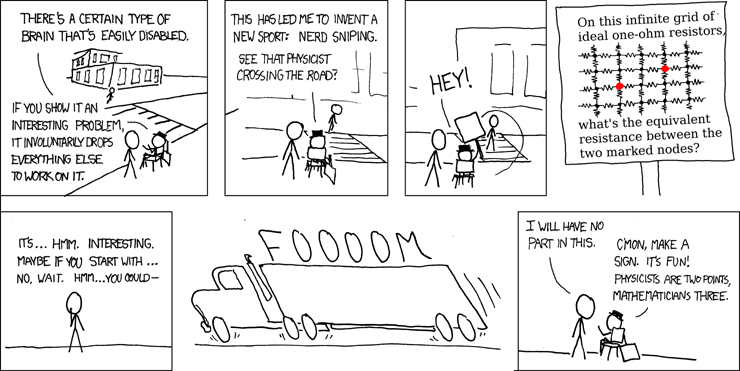

Nerd sniping is probably the coolest description of this phenomena ( @woj_zaremba et al described it recently), but in our case overthinking didn't lead to any drastic consequences besides higher costs.

Ha! You can nerdsnipe reasoning models with decoy problems to make them overthink and slow them down/make them more expensive to run.

0

0

7

How Sudokus can waste your money? If you are using reasoning LLMs with public data, adversaries could pollute it with nonsense (but perfectly safe!) tasks that will slow down reasoning and amplify overheads 💰 (as you pay but not see reasoning tokens) while keeping answers intact.

🧠💸 "We made reasoning models overthink — and it's costing them big time.". Meet 🤯 #OVERTHINK 🤯 — our new attack that forces reasoning LLMs to "overthink," slowing models like OpenAI's o1, o3-mini & DeepSeek-R1 by up to 46× by amplifying number of reasoning tokens. 🛠️ Key

1

2

11

RT @sahar_abdelnabi: OpenAI Operator enables users to automate complex tasks, e.g., travel plans. Services, e.g., Expedia, use chatbots.….

0

19

0

🧙 I am recruiting PhD students and postdocs to work together on making sure AI Systems and Agents are built safe and respect privacy (+ other social values). Apply to UMass Amherst @manningcics and enjoy a beautiful town in Western Massachusetts. Reach out if you have questions!

0

25

78