David A Roberts

@david_ar

Followers

403

Following

9K

Media

52

Statuses

583

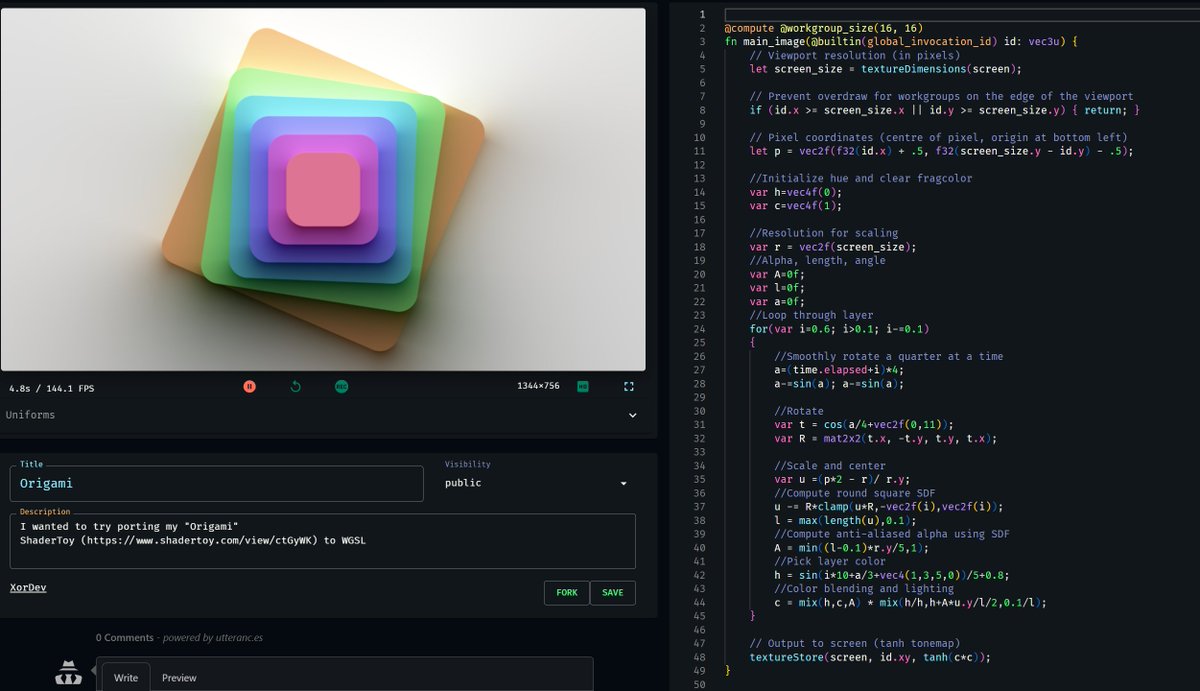

RT @Michael_Moroz_: finally got the Slang compiler integrated, which means its finally time to ditch WGSL 🎉🎉.I've p….

0

10

0

RT @Michael_Moroz_: Made a mixed radix FFT implementation in It is neat, but I still wonder….

compute.toys

Forked from https://compute.toys/view/1914 Faster version

0

4

0

RT @jon_barron: Optical illusions with diffusion models. There are so many good gifs on this page but honestly I would like several million….

0

75

0

RT @Michael_Moroz_: Made a really weird fractal flame based on a mandelbulb, looks like a moving stardust cloud. (oh the codec aint gonna l….

0

23

0

Likewise when people ask whether LLMs *have* a world model, they're fundamentally getting it inside out. An LLM *is* a world model. RLHF/SFT tries to collapse it down to a single character, but it's still a model of the world focused on that character, rather than vice versa.

@anthrupad Yeah, the exclusive focus on a superposition of single agents/characters is another vestige of anthropomorphism

1

0

7

This seems promising, porting code between languages is one of GPT-4's stronger skills (it's a kind of translation task after all) and they're able to formally verify its correctness to keep iterating until it gets it right.

In our latest blog, Galois Research Engineer Adam Karvonen writes about his experiment applying GPT-4 to the task of refolding macros into the Rust program.

0

0

1