Volodymyr Kyrylov

@darkproger

Followers

3K

Following

19K

Media

779

Statuses

16K

Technical Staff at OpenAI. AI student from USI/ETH. Donate https://t.co/GDSkWG30ZS

San Francisco, CA

Joined April 2008

Happy to release Accelerated Scan, a kernel library for first order parallel associative scans in vanilla @PyTorch, Triton 2.2.0 and CUDA C++. pip install accelerated-scan🧵

5

39

267

this time is the charm

1

0

17

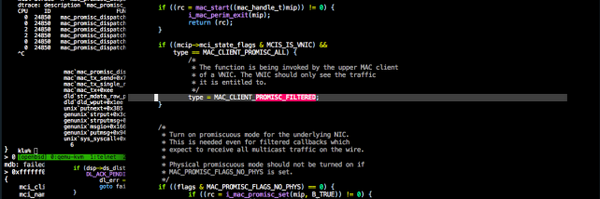

macapptree is an amazing tool for screen perception in gpt-oss!

Wild NeurIPS moment: @darkproger from @OpenAI told me he uses our open-source macapptree as his go-to tool for parsing macOS accessibility 🤯 Made my day!

0

1

25

Excited to be talking about gpt-oss today!

I am excited to be organizing the 8th scaling workshop at @NeurIPSConf this year! Dec 5-6 | 5-8pm PT | Hard Rock Hotel San Diego Co-organized by @cerebras, @Mila_Quebec, and @mbzuai Register:

3

2

23

The Strategic Explorations team @OpenAI is looking to recruit researchers interested in working on the next frontier of language modeling! Feel free to reach out to me by email. @darkproger and I will also be at NeurIPS to connect and discuss in person.

13

20

322

it feels so nice to start sweeping once the implementation is not buggy any more

0

0

9

as of 5 minutes ago, our gpt-oss implementation is merged into torchtitan! thanks to all the work by @jianiw_wang @__tianyu at @pytorch making it clean & scalable for the community ❤️ i hope y'all play around with training gpt-oss, it's great for its sparsity & reasoning

periodic ❤️ open-source! for example, we’ve been collaborating with the @PyTorch team to build the highest MFU gpt-oss training implementation (includes thinky sinky flexattn) here’s a few SFT runs of gpt-oss-20b & 120b, where i get ~24% MFU for 20b and ~8% for 120b

9

7

193

To authenticate codex on spark, do: scp -r .codex vol@spark-abcd.local: Assuming it’s already working on your box with a screen and you are vol

0

0

6

LLMs are winning IOI golds & crushing code gen—but can they verify correctness? In Feb, our benchmark saw single digit scores with o3-mini. We re-ran our evals with the latest open models: GPT-OSS gets 21.6% at demonstrating bugs in code! Progress✅But verification's still hard

3

11

80

gpt-oss-20b with medium effort measures to Gemini 2.5 Pro on Ukrainian competitive programming. Thanks anonymous-researcher-ua for running the experiment and developing the benchmark

1

3

22

👀 we care a lot about correctness, ran many evals and stared at many tensors to compare them. numerics of vLLM on hopper should be solid and verified! if you run into any correctness issue on vLLM, we would love to know and debug them!

Heads-up for developers trying gpt-oss: performance and correctness can vary a bit across providers and runtimes right now due to implementation differences. We’re working with inference providers to make sure gpt-oss performs at its best everywhere, and we’d love your feedback!

5

29

322

Yes, I’m very sure vllm is correct — we spent quite a bit of time on that. 🥹

👀 we care a lot about correctness, ran many evals and stared at many tensors to compare them. numerics of vLLM on hopper should be solid and verified! if you run into any correctness issue on vLLM, we would love to know and debug them!

0

9

145

correctness takes time! Stay patient

Heads-up for developers trying gpt-oss: performance and correctness can vary a bit across providers and runtimes right now due to implementation differences. We’re working with inference providers to make sure gpt-oss performs at its best everywhere, and we’d love your feedback!

0

1

13

Very excited to see this model released to the open-source community. It's still hard to believe that our latest techniques allow it to be so incredibly powerful yet so remarkably small.

super excited to have contributed to gpt-oss. We have put a lot of love into both training the model and making the developer examples, check them out:

3

2

135

HealthBench is the coolest eval I got to run yet. Reproduce it here:

OpenAI’s new gpt-oss models are our “healthiest” models pound-for-pound. 💥 The 120b model outperforms all our other frontier models on HealthBench–GPT-4o, o1, o4-mini–except o3, which it nearly matches despite being much smaller. Even healthier models to come soon! 👇

0

1

9

the model is very smart! after the release we found that the model scores 82.2 on GPQA with tools if we improve answer extraction

gpt-oss is our new open-weight model family! the bigger one runs on a single GPU, you can run the small one on your laptop. Go install it right now, seriously! Telling your laptop to do something and watching it happen made me feel the AGI like nothing since ChatGPT.

0

1

84

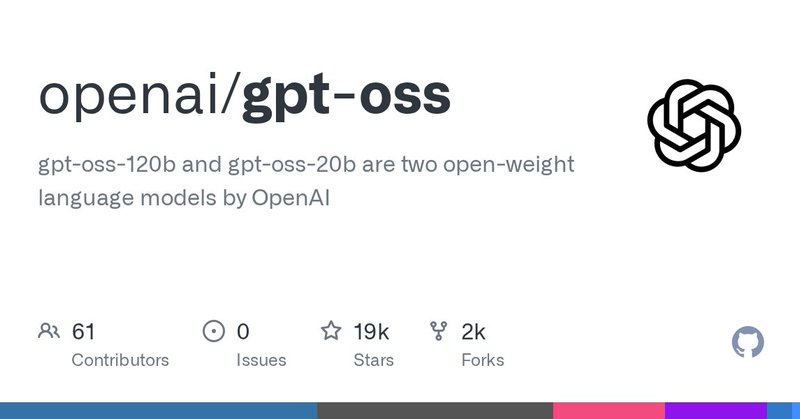

Today we release gpt-oss-120b and gpt-oss-20b—two open-weight LLMs that deliver strong performance and agentic tool use. Before release, we ran a first of its kind safety analysis where we fine-tuned the models to intentionally maximize their bio and cyber capabilities 🧵

109

354

3K

super excited to have contributed to gpt-oss. We have put a lot of love into both training the model and making the developer examples, check them out:

github.com

gpt-oss-120b and gpt-oss-20b are two open-weight language models by OpenAI - openai/gpt-oss

9

9

183