Chris Pal

@chrisjpal

Followers

1K

Following

769

Media

44

Statuses

551

Professor

Montréal, Québec

Joined March 2014

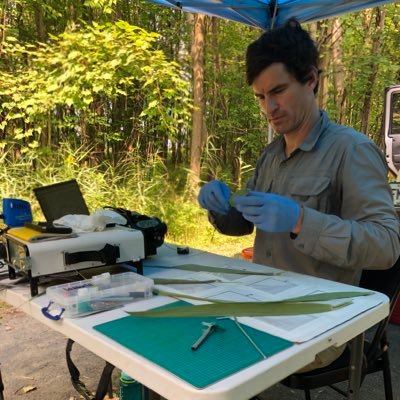

Very proud of my colleague @etnlalib, his group, collaborators and my own students who, as a part of team Limelight have won a ($10M) XPrize - focused on the goal of increasing our understanding of the Amazon Rainforest #XPRIZERainforest & thanks to @IVADO_Qc for the support !

We’re so thrilled to announce the winners of the #XPRIZERainforest competition! 🥇Limelight Rainforest 🥈Map of Life Rapid Assessments 🥉Brazilian Team 🏆Bonus Prize winner: @ETHBiodivX Learn more about the teams in our video below. ⬇️ cc: @ColoradoMesaU

3

7

36

It’s amazing—I’m quite surprised!—how much LLM reasoning has improved in 2025. Main message: current top models—GPT-5, Grok 4, Gemini 2.5 Pro—are way better vs GPT-4o or Llama 3. A careful—even skeptical—formal proof eval by K Arkoudas & @s_batzoglou. https://t.co/rstrVyLMCv

New at EMNLP: PROOFGRID evaluates AI reasoning via formal proofs—can models write, check, and edit proofs? In one year we see striking gains: today’s top systems far surpass last year’s.

13

39

263

In-flight weight updates have gone from a “weird trick” to a must to train LLMs with RL in the last few weeks. If you want to understand the on-policy and throughput benefits here’s the CoLM talk @DBahdanau and I gave:

1

29

140

Generative Point Tracking with Flow Matching My latest project with @AdamWHarley @CSProfKGD @DerekRenderling @chrisjpal Project page: https://t.co/cs4zFEuLYU Paper: https://t.co/sa9NdFlOgP Code: https://t.co/F4Ug3JWkRX

2

25

135

✨ What if we could tune Frontier LLM agents without touching any weights? Meet JEF-Hinter, an agent capable of analyzing multiple offline trajectories to extract auditable and timely hints💡 In our new paper 📄 , we show significant performance gains on downstream tasks ⚡ with

1

14

24

We did lots of good work since PipelineRL release in May: ⚙️ higher throughput, seq parallel training, multimodal, agentic RL 📜 white paper with great explanations and results: https://t.co/F3YsIbNRUy We'll present today at CoLM EXPO, room 524C, 1pm!

2

9

61

Very excited to be presenting Pipeline RL this afternoon at CoLM. Join us if you are interested in fast on policy RL training for LLMs 🚀

We did lots of good work since PipelineRL release in May: ⚙️ higher throughput, seq parallel training, multimodal, agentic RL 📜 white paper with great explanations and results: https://t.co/F3YsIbNRUy We'll present today at CoLM EXPO, room 524C, 1pm!

0

8

21

New paper 📜: Tiny Recursion Model (TRM) is a recursive reasoning approach with a tiny 7M parameters neural network that obtains 45% on ARC-AGI-1 and 8% on ARC-AGI-2, beating most LLMs. Blog: https://t.co/w5ZDsHDDPE Code: https://t.co/7UgKuD9Yll Paper:

arxiv.org

Hierarchical Reasoning Model (HRM) is a novel approach using two small neural networks recursing at different frequencies. This biologically inspired method beats Large Language models (LLMs) on...

140

661

4K

With recursive reasoning, it turns out that “less is more”. A tiny model pretrained from scratch, recursing on itself and updating its answers over time, can achieve a lot without breaking the bank.

5

12

199

Follow Mila researchers on day 2 of #COLM2025. Full schedule of Mila-affiliated presentations here https://t.co/e4298p390Y

0

2

6

Suivez les chercheur·euse·s de Mila au jour 2 de la #COLM2025. Programme complet disponible ici https://t.co/JXvo6ASlhG

0

1

3

with yours truly

🧠 Don’t miss it at #COLM2025! SOCIAL: “Reasoning LLMs – Tips & Tricks Discussion” 📅 Tue, Oct 7 | 🕐 1:00–2:30 PM | 📍 Room 517BC Co-presented by ServiceNow AI Research + NVIDIA with Torsten Scholak (ServiceNow) & Olivier Delalleau (NVIDIA). Join the discussion on advancing

0

2

11

Huan Sun (@hhsun1) on improving safety of AI agents -- capabilities and safety should go hand-in-hand, not an afterthought -- definition: safety is unintentional alignment, and security involves adversarial attacks. For this talk, safety also involves security. -- Safety has to

The IVADO workshop on Agent Capabilities and Safety is happening now at HEC Montreal, Downtown (Oct 3--6) https://t.co/MEL4JAzLRn

#LLMAgents

1

5

34

SLAM Labs presents Apriel-1.5-15B-Thinker 🚀 An open-weights multimodal reasoning model that hits frontier-level performance with just a fraction of the compute.

15

79

336

@alexpiche_ Perfect time for for @OpenAI to talk about reducing hallucinations! Cause you know, our work on learning to abstain by iterative self-reflection with @alexpiche_ @amilios and @chrisjpal has just got accepted at TMLR 😉

0

1

13

Glad to see OpenAI prioritizing abstention responses in their paper! That's a great intro to our TMLR paper in which we developed an iterative self-reflection method for LLM to know when to abstain without ground truth and no additional cost at test time. https://t.co/xwNT68ejqm

New research explains why LLMs hallucinate, through a connection between supervised and self-supervised learning. We also describe a key obstacle that can be removed to reduce them. 🧵 https://t.co/6Lb6xlg0SZ

1

10

18

UI-Vision vs GPT-5: Still holding the crown 👑 and far from saturation. GPT-5 has strengths in coding and reasoning, but when it comes to computer-use tasks, it’s still awkward to rely on it alone. And our team's UI-Vision (ICML 2025) remains a key and still unbeaten multimodal

2

9

19

We built a new 𝗮𝘂𝘁𝗼𝗿𝗲𝗴𝗿𝗲𝘀𝘀𝗶𝘃𝗲 + 𝗥𝗟 image editing model using a strong verifier — and it beats SOTA diffusion baselines using 5× less data. 🔥 𝗘𝗔𝗥𝗟: a simple, scalable RL pipeline for high-quality, controllable edits. 🧵1/

3

26

67

I think listening to this, with an amplifier set to 11, would be appropriate today.

1

1

11

Finally finished a blog post I've been working on (on and off) for months. It builds on a TMLR paper I published last year in model-based optimisation, but I wanted to explain things more clearly this time. More honest, more readable, more reflective. https://t.co/BBlaBDNUAP

beckham.nz

I’ve been thinking about offline model-based optimisation for the past six months, mostly in the background while working full-time, trying to write up something that captures both what I found and...

1

3

9

We’re releasing SelvaBox, the largest tropical tree detection dataset from drone imagery. Our models trained on SelvaBox achieve competitive zero-shot detection performance on unseen tropical tree crown datasets, matching or exceeding competing methods. https://t.co/GGNoRdqHtw

1

10

18