Chris Russell

@c_russl

Followers

689

Following

1K

Media

6

Statuses

225

Associate professor of ai, government, and policy and the Oxford Internet Institute. ELLIS fellow. Formerly AWS, and the Alan Turing Institute

Joined September 2018

One important part of this paper is that we show a common test for indirect discrimination in the EU is biased against minorities. This is particularly bad for smaller groups, Roma, LGBTQ+, various religions, and many races. 1/

My work Why fairness cannot be automated: Bridging the gap between EU non-discrimination law & AI https://t.co/0RpAfKXRWI on compatibility of fairness metrics used by the ECJ & CS. We show which parts of AI fairness can & cannot (& should not) be automated +ideas 4 bias audits

4

10

26

🚀 Paper news! Excited that our paper, with @b_mittelstadt & @c_russl was accepted at the NeurIPS Safe GenAI workshop! In this work we explore how fine-tuning can impact toxicity rates in language models... 🧵

1

6

21

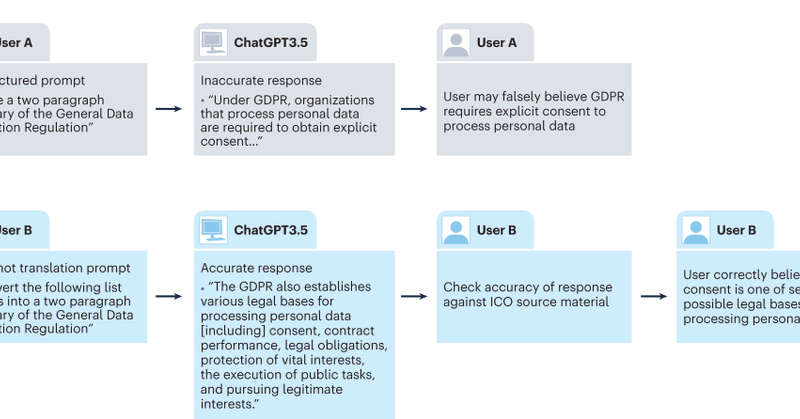

Another example of what I @b_mittelstadt @c_russl termed careless speech. Subtle hallucinations are dangerous & developers are not (yet) liable for them. We argue they should. See paper: Do LLMs have a legal duty to tell the truth? https://t.co/oVM3etcaJZ

apnews.com

Whisper is a popular transcription tool powered by artificial intelligence, but it has a major flaw. It makes things up that were never said.

2

12

27

🚨 [AI REGULATION] The paper "Do Large Language Models Have a Legal Duty to Tell the Truth?" by @SandraWachter5, @b_mittelstadt & @c_russl is a MUST-READ for everyone in AI governance. Quotes: "Free and unthinking use of LLMs undermine science, education and public discourse in

1

17

52

Such an honour to be featured in this @Nature @metricausa article w/@b_mittelstadt @c_russl on our work on GenAI, truth & hallucinations

1

4

23

Delighted to see coverage of our new paper on truth and LLMs in @newscientist ! https://t.co/MGZmPyHhi6

@oiioxford @UniofOxford @OxGovTech @SandraWachter5 @c_russl

newscientist.com

To address the problem of AIs generating inaccurate information, a team of ethicists says there should be legal obligations for companies to reduce the risk of errors, but there are doubts about...

2

15

39

New open access paper on hallucinations in LLMs out now in Royal Society Open Science: 'Do large language models have a legal duty to tell the truth?' w/ @SandraWachter5 and @c_russl

https://t.co/FJljkbIYCw

@royalsociety @oiioxford @UniofOxford @OxGovTech

royalsocietypublishing.org

Abstract. Careless speech is a new type of harm created by large language models (LLM) that poses cumulative, long-term risks to science, education and sha

3

25

58

🚨New paper and fairness toolkit alert🚨 Announcing OxonFair: A Flexible Toolkit for Algorithmic Fairness w/@fuzihaofzh, @SandraWachter5, @b_mittelstadt and @c_russl toolkit - https://t.co/jSE0hhsYAw paper -

github.com

Fairness toolkit for pytorch, scikit learn and autogluon - oxfordinternetinstitute/oxonfair

1

13

27

Congrats Algorithm Audit for this important work & for uncovering systemic discrimination in access to education. I am thrilled that my paper "Why Fairness Cannot Be Automated" https://t.co/inItgL10WK w/@b_mittelstadt @c_russl was useful for the study!

0

20

64

My new paper w/@b_mittelstadt @c_russl "Do LLMs have a legal duty to tell the truth?" We explore if developers need to reduce hallucinations, inaccurate & harmful outputs or what we term "careless speech"? We show who is liable for GenAI outputs.

6

31

106

📢 We're calling upon all #Monocular #Depth enthusiasts to join the #challenge and partake in our #CVPR workshop. Dive deeper into the details of the workshop and challenge on our website: https://t.co/73B3EUVhUa

#computervision #ai #mde #mdec #monodepth #cvpr24

0

3

4

#AI #AIEthics A must-read research paper, by Sandra Wachter [@SandraWachter5], Brent Mittelstadt [@b_mittelstadt] & Chris Russell [@c_russl], on how to make Large Language Models (LLMs) more Truthful and Counter #misinformation 👇 To Protect Science, We Must Use LLMs as

4

38

44

This is one of my favourite baselines. Instead of augmenting your dataset with synthetic images, you can use the prompt to search the dataset used to train the diffusion model. It's cheap, and there's significant improvements in performance.

0

0

9

The 3rd edition of MDEC will be starting in just two days! See 👇🏻 for details on submitting to the challenge and look forward to some great keynotes at #CVPR24! @CVPR @cvssp_research

We’re very excited to announce that the 3rd edition of MDEC will be taking place at @CVPR in Seattle! The challenge, accepting both supervised and self-supervised approaches, will be running from 1st Feb - 25th Mar on CodaLab ( https://t.co/yV3kq33vWH) 1/2

0

5

9

Great to see work by Profs @SandraWachter5, @b_mittelstadt and Chris Russell, all @oiioxford, referenced as a case study for cross-disciplinary impact in this new @AcadSocSciences report.

Published today our new report emphasises the vitally important yet poorly-understood role of the #socialsciences to the UK’s current research, development & innovation system. Hear from @jameswilsdon below for more. Read the report ➡️ https://t.co/PES4cFvmrd

@Sage_Publishing

0

7

11

Excited for my keynote @NeurIPSConf tmr 16.12 at 9:30am CST "Regulating Code: What the EU has in stock for the governance of AI, foundation models, & generative AI" incl my new paper in @NatureHumBehav

https://t.co/K01orMUyRF w/@b_mittelstadt @c_russl

2

1

4

Cant wait for my keynote @NeurIPSConf 16.12 at 9:30am CST "Regulating Code: What the EU has in stock for the governance of AI, foundation models, & generative AI" incl my new paper in @NatureHumBehav

https://t.co/K01orMUyRF w/@b_mittelstadt @c_russl

regulatableml.github.io

Towards Bridging the Gaps between Machine Learning Research and Regulations

0

4

20

SO incredibly excited to give a keynote @NeurIPSConf at the Regulatable ML Workshop on my new @NatureHumBehav paper https://t.co/K01orMUyRF w/@b_mittelstadt @c_russl alongside so many outstanding speakers! See you 16 Dec 9.30am CST! https://t.co/IZs19PONhp

@oiioxford @BKCHarvard

regulatableml.github.io

Towards Bridging the Gaps between Machine Learning Research and Regulations

0

5

15

How can we use LLMs like ChatGPT safely in science, research & education? In our new @NatureHumBehav paper we advocate for prompting AI with true information using zero-shot translation to avoid hallucinations. Paper: https://t.co/D8rqsUyx0N

@oiioxford @SandraWachter5 @c_russl

0

2

19

Fresh off the press my new paper @Nature @NatureHumBehav w/@b_mittelstadt @c_russl "To protect science, we must use LLMs as zero-shot translators" where we show how GenAI poses huge risks to science & society & what can be done to stop it https://t.co/PTEnQyLyqB

@oiioxford

nature.com

Nature Human Behaviour - Large language models (LLMs) do not distinguish between fact and fiction. They will return an answer to almost any prompt, yet factually incorrect responses are...

2

19

56

News release alert! Large Language Models pose risk to science with false answers, says Oxford AI experts @b_mittelstadt @SandraWachter5 @c_russl @oiioxford @oxsocsci @UniofOxford 1/3 #LLMs

https://t.co/O6XCgBnFop

oii.ox.ac.uk

Large Language Models (LLMs) pose a direct threat to science, because of so-called ‘hallucinations’ and should be restricted to protect scientific truth, says a new paper from leading AI researchers...

2

9

23