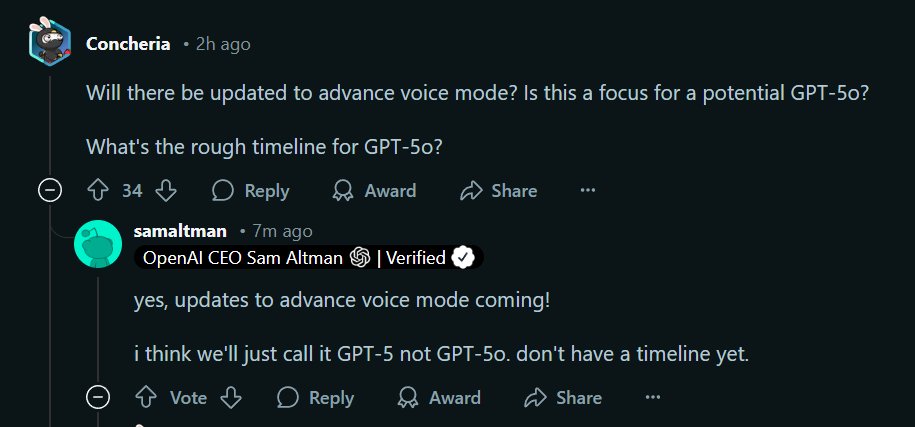

bycloud

@bycloudai

Followers

8K

Following

4K

Media

627

Statuses

1K

I make youtube vids on cool AI research /// AI papers newsletter https://t.co/Xn7GMDbQSd /// paper recap @TheAITimeline /// building @findmypapersAI

Joined January 2020

no model is able to escape the 66% accuracy @ 120k tokens, except Gemini 2.5 Pro which sits at 90%. even the new GPT-4.1 with 1 mil ctx is stuck at 60%. (please tells us your secret gemini🥺).

Long Context benchmark updated with GPT-4.1. Looks like it's the "optimus" version instead of the better performing original quasar. The smaller versions are not usable in long context.

40

64

805

what also intrigued me about this is that @ 120k context window, 2.5 pro did a 90% accuracy while no one else crossed 66% . everyone else starts to fall off hard @ 4k. what new attention technique did google invent???.(and why is there a sudden dip at 16k???????)

small tangent - people always ask about gemini context window, yeah it’s big, it probably uses some sliding window-like architecture too (don’t quote me). most notably though, google has it’s own proprietary accelerators called TPUs. much more GPU memory, so they can fit larger.

30

39

558

> llama-4 series got 0% on ARC-AGI 2.> scout got 0.5% and maverick got 4.38% on ARC-AGI 1

Llama 4 Maverick and Scout on ARC-AGI's Semi Private Evaluation. Maverick:.* ARC-AGI-1: 4.38% ($0.0078/task).* ARC-AGI-2: 0.00% ($0.0121/task). Scout:.* ARC-AGI-1: 0.50% ($0.0041/task).* ARC-AGI-2: 0.00% ($0.0062/task)

9

6

189

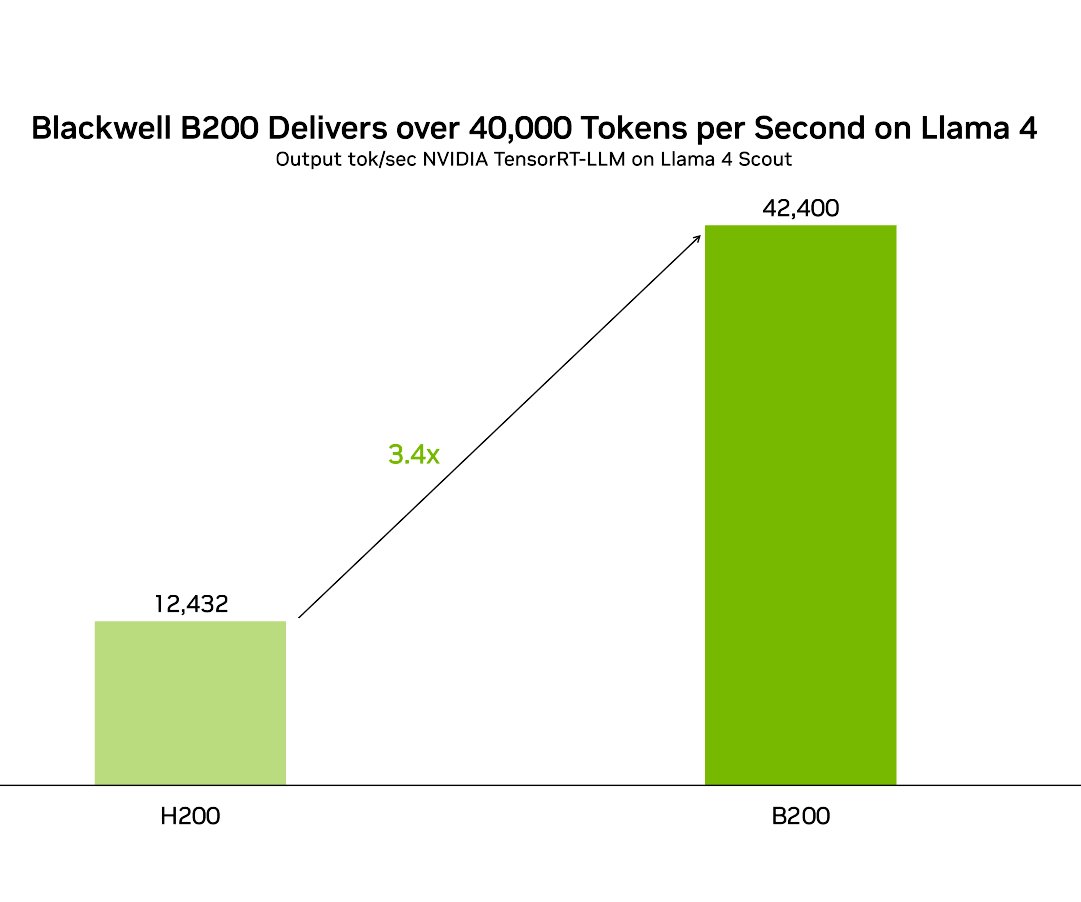

the speed is like generating a harry potter book in 2 seconds 💀.

👀 Accelerate performance of @AIatMeta Llama 4 Maverick and Llama 4 Scout using our optimizations in #opensource TensorRT-LLM.⚡. ✅ NVIDIA Blackwell B200 delivers over 42,000 tokens per second on Llama 4 Scout, over 32,000 tokens per seconds on Llama 4 Maverick. ✅ 3.4X more

4

5

159

no paper, empty github, project page that is unpublished which contained technical details. bruh. please don't normalize this, it's just embarassing

Tencent presents GameGen-O. Open-world Video Game Generation. We introduce GameGen-O, the first diffusion transformer model tailored for the generation of open-world video games. This model facilitates high-quality, open-domain generation by simulating a wide array of game engine

4

14

144

I drew it so the bar might be off by a tiny bit. would be interesting to see across more benchmarks ngl but im editing rn

@bycloudai Add Claude 3.7.

8

6

104

Within 24 hours, we got:.Google - Gemini 1.5 Pro.Meta - V-JEPA.OpenAI - Sora.Mistral - Next. @DrJimFan is NVIDIA really not cooking anything?👀.

6

15

96

> mamba-transformer hybrid reasoning model near on par with DeepSeek-R1. what.

🚀 Introducing Hunyuan-T1! 🌟. Meet Hunyuan-T1, the latest breakthrough in AI reasoning! Powered by Hunyuan TurboS, it's built for speed, accuracy, and efficiency. 🔥. ✅ Hybrid-Mamba-Transformer MoE Architecture – The first of its kind for ultra-large-scale reasoning.✅ Strong

2

3

56

i got UNBANNED???. I did not know that is possible holy shit. W in the chat

> be me.> about to launch my first app ever.> weeks of prep, hyped myself through the roof.> accept that failure is likely, still hyped anyway.> ready to announce to the world.> *deep breath*.> find out brand account on X got banned day b4.> bruh_face.gif.> speedrun fail any%

5

1

56

A small AI Generated Art Competition along with my most recent video Chance to win from a total prize pool of $1500+ USD!. [Details] [Submission Link] #AIart #discodiffusion #midjourney #AiArtwork #aiartist

5

6

52

👑Most Popular AI Research July 2022👑. Measured based on total Twitter likes!.#ArtificialIntelligence #MachineLearning

4

5

47

As im also making a video on model distillation, this is probs one of my favorite paper this week. So u basically distill a transformer into a mamba and it can "retain" its original capabilities. This performs best on benchmarks compared to any "existing" RNN attn hybrid. cope?.

The Mamba in the Llama: Distilling and Accelerating Hybrid Models. Author’s explanation:. Overview:.This work shows that large Transformer models can be distilled into linear RNNs, like Mamba, using a fraction of their attention layers while maintaining

1

4

47

POV: the last thing you see before you get fired.

#ChatDirector is a research prototype that brings 3D avatars and automatic layout transitions to your 2D laptop screen, transforming online meetings to be more immersive and dynamic. Check it out →

1

2

43

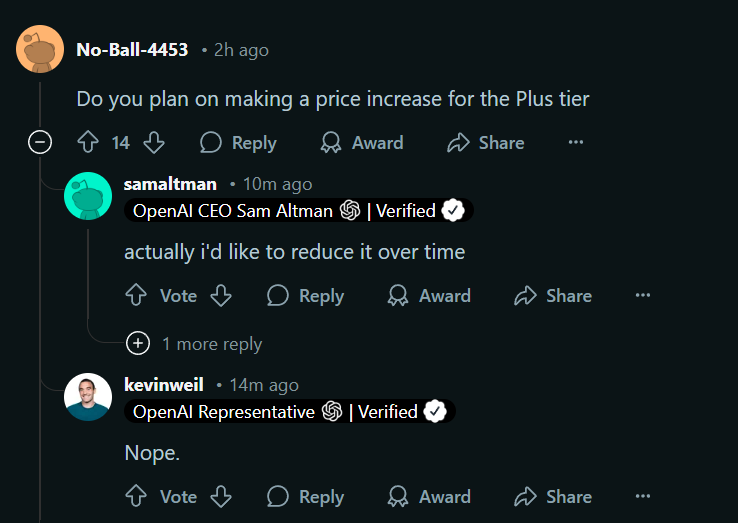

the selling point of the product is now “watch us burn more GPU runtime” because no normies can tell the difference between a $200 a $2000 and a $20000 tier. but unfortunately more runtime ≠ better answers and once normies realize that, the “bubble” will pop.

AI Agenda: OpenAI Plots Charging $20,000 a Month For PhD-Level Agents. OpenAI is planning three types of agents for which it could charge $2,000 to $20,000 a month. Read more from @steph_palazzolo and @coryweinberg👇.

5

4

44