Brian L Trippe

@brianltrippe

Followers

2K

Following

440

Media

17

Statuses

120

Assistant Professor at @Stanford Statistics and @StanfordData | Prev postdoc @UWproteindesign and @Columbia. PhD from @MIT.

Stanford, CA

Joined December 2016

🚨New paper! Generative models are often “miscalibrated”. We calibrate diffusion models, LLMs, and more to meet desired distributional properties. E.g. we finetune protein models to better match the diversity of natural proteins. https://t.co/2c06vD0x2D

https://t.co/9Tbhf6ml8K

3

45

199

Super excited to share this preprint with @nate_diamant and my advisor @brianltrippe on how we can fine-tune diffusion models, language models, and more to match known distributional properties. Check it out!👇

🚨New paper! Generative models are often “miscalibrated”. We calibrate diffusion models, LLMs, and more to meet desired distributional properties. E.g. we finetune protein models to better match the diversity of natural proteins. https://t.co/2c06vD0x2D

https://t.co/9Tbhf6ml8K

1

3

45

This is a first paper from my group, joint with @smithhenryd and @nate_diamant . Paper: https://t.co/2c06vD0x2D Code: https://t.co/9Tbhf6ml8K If you’re interested to try it out on a new problem together, or have ideas for how to improve the method, please reach out!

github.com

Code for "Calibrating Generative Models" by Henry Smith, Nathaniel Diamant, and Brian Trippe - smithhenryd/cgm

0

0

3

For language models: CGM reduces profession-specific gender bias 5× more than prior methods. Bias reduction generalizes somewhat to held-out professions – though model extrapolation remains a challenge.

1

0

1

For image models: CGM fixes severe class imbalance in conditional generation (e.g., more foxes, fewer lions 🦊🦁). Samples stay realistic.

1

0

1

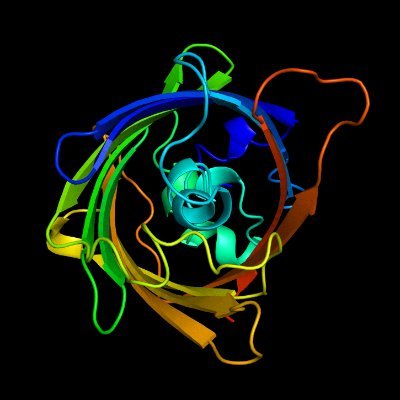

Applied to protein design: Previously, inference-time heuristics traded-off between diversity and quality. CGM increases structural diversity to match proteins from nature, without sacrificing designability!

1

0

1

Two algorithms: – CGM-relax: add a miscalibration penalty – CGM-reward: turns calibration into reward fine-tuning They work on diffusion models (continuous & discrete!), LLMs (autoregressive & masked!), normalizing flows, and more. Only sampling and model likelihoods are needed.

1

0

1

We frame calibration as a constrained optimization problem: Finetune towards the model closest in KL-divergence to the base model that satisfies a set of calibration constraints.

1

0

1

Why does this matter? – Protein design models miss structural diversity – LLMs mirror social biases present in training data – Image models can suffer mode collapse Calibration errors limit fairness and scientific utility, and aren’t solved by finetuning on sample-level rewards.

2

0

1

Super excited about this progress and next steps! Come chat with us at our poster tomorrow morning if you’re at #ICML2025

Predicting how mutations affect protein binding is key for drug design—but deep learning tools lag behind physics. StaB-ddG closes the gap: combining stability models + smart pretraining to match FoldX accuracy at >1000× the speed. Paper: https://t.co/UunWaa1CVs Code:

0

1

13

Actually happening at **11 AM ET** !

Next Tues (4/22) at 4PM ET, we will have @Zhuoqi_Zheng present "MotifBench: A standardized protein design benchmark for motif-scaffolding problems" Paper: https://t.co/UrxNY1ZwlK Sign up on our website for zoom links!

0

2

3

Interested in functional protein design or motif-scaffolding? We propose a standardized protein design benchmark, MotifBench aimed at tackling key challenges in the field. Preprint: https://t.co/3SsdPk8p0c Code: https://t.co/VoF3zdd7BN (1/N)

1

8

36

Have a look at our shiny new benchmark for motif-scaffolding in computational protein design! New (and harder) tasks, including a reproducible evaluation pipeline

🔥 Benchmark Alert! MotifBench sets a new standard for evaluating protein design methods for motif scaffolding. Why does this matter? Reproducibility & consistent evaluation have been lacking—until now. Paper: https://t.co/i2Lk3YZ24N | Repo: https://t.co/Xoun67eE9P A thread ⬇️

0

4

32

We made a new, reproducible, fair, (and much harder) motif scaffolding benchmark! With Zhuoqi Zheng, Bo Zhang, @DidiKieran @json_yim @_JosephWatson Hai-Feng Chen, @brianltrippe

🔥 Benchmark Alert! MotifBench sets a new standard for evaluating protein design methods for motif scaffolding. Why does this matter? Reproducibility & consistent evaluation have been lacking—until now. Paper: https://t.co/i2Lk3YZ24N | Repo: https://t.co/Xoun67eE9P A thread ⬇️

2

11

87

And thanks as well to ChatGPT for turning my first banal attempt at a tweet-thread into a emoji-packed click-bait for real work! 😂

0

0

8

This has been a big team effort – with contributions across 5 timezones 🌍. This is thanks to my fantastic coauthors on our whitepaper:

1

0

2

This is a V0 pilot—we need your input for V1! 🧩 Know of an important motif? Add it to the benchmark! 🏗️ Help improve the pipeline & metrics (sequence-based? Side-chain-level?) Let’s shape the future of motif scaffolding together!

1

0

3

Surprise: A modern baseline (RFdiffusion) fails on motifs scaffolded into de novo enzymes 15 years ago 🤯 This suggests modern deep learning methods aren’t always better than past methods, and there is much room for progress!

2

0

6

MotifBench fixes this by providing: 🧪 A standardized evaluation pipeline 🏆 30 challenging motifs as test cases 📊 Easy-to-use eval scripts and a leaderboard for method comparison Now, results can be easily and consistently measured.

1

0

3

Recent progress in motif scaffolding has been exciting! But… ❌ Current evaluation are inconsistent, and results incomparable ❌ Widely used test cases are too easy ❌ Reproducibility is difficult That’s where MotifBench comes in.

1

0

1