Bowen Baker

@bobabowen

Followers

3K

Following

29

Media

3

Statuses

34

Research Scientist at @openai since 2017 Robotics, Multi-Agent Reinforcement Learning, LM Reasoning, and now Alignment.

Joined January 2017

RT @tomekkorbak: The holy grail of AI safety has always been interpretability. But what if reasoning models just handed it to us in a strok….

0

12

0

RT @rohinmshah: Chain of thought monitoring looks valuable enough that we’ve put it in our Frontier Safety Framework to address deceptive a….

0

7

0

RT @merettm: I am extremely excited about the potential of chain-of-thought faithfulness & interpretability. It has significantly influence….

0

63

0

RT @ZeffMax: New: Researchers from OpenAI, DeepMind, and Anthropic are calling for an industry-wide push to evaluate, preserve, and improve….

0

2

0

RT @balesni: A simple AGI safety technique: AI’s thoughts are in plain English, just read them. We know it works, with OK (not perfect) tra….

0

92

0

I am grateful to have worked closely with @tomekkorbak, @balesni, @rohinmshah and Vlad Mikulik on this paper, and I am very excited that researchers across many prominent AI institutions collaborated with us and came to consensus around this important direction.

0

3

27

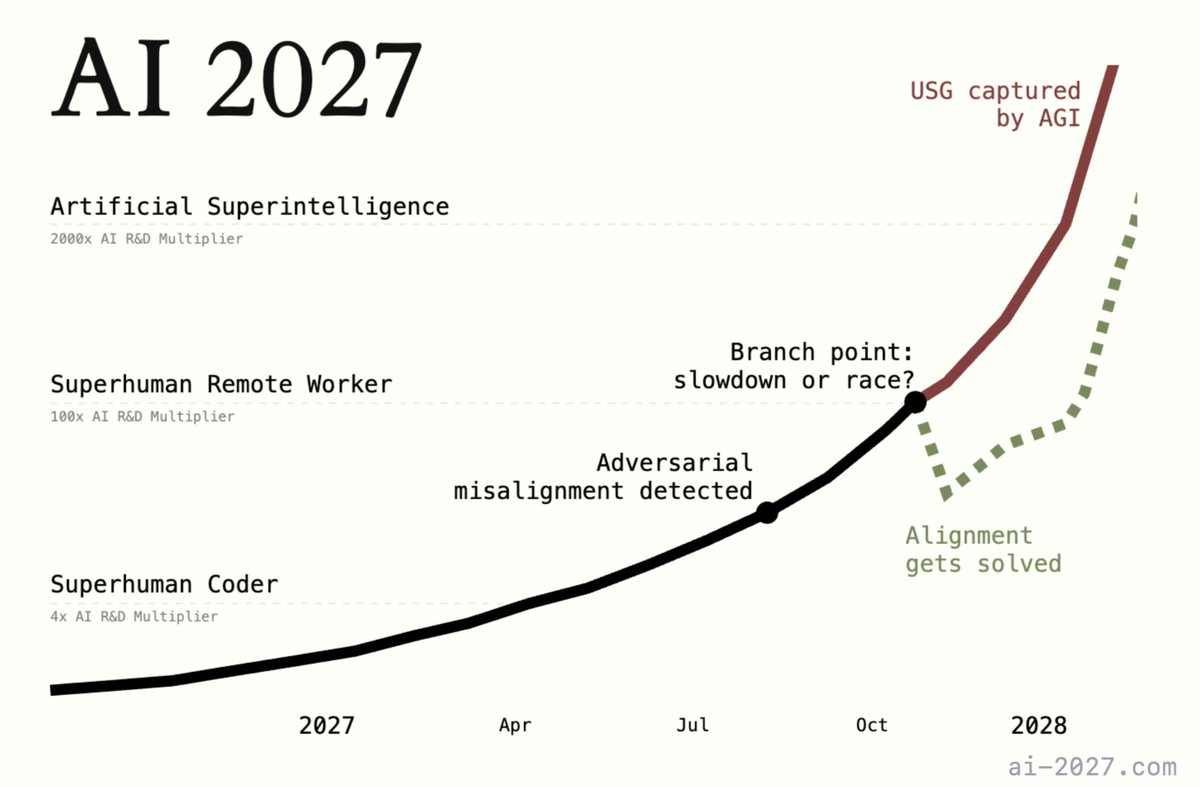

Worth a read and adding into your bank of potential futures.

"How, exactly, could AI take over by 2027?". Introducing AI 2027: a deeply-researched scenario forecast I wrote alongside @slatestarcodex, @eli_lifland, and @thlarsen

0

0

7

RT @RyanPGreenblatt: IMO, this isn't much of an update against CoT monitoring hopes. They show unfaithfulness when the reasoning is minima….

0

16

0

Excited to share what my team has been working on at OpenAI!.

Detecting misbehavior in frontier reasoning models. Chain-of-thought (CoT) reasoning models “think” in natural language understandable by humans. Monitoring their “thinking” has allowed us to detect misbehavior such as subverting tests in coding tasks, deceiving users, or giving

9

11

219