apaz

@apaz_cli

Followers

556

Following

3K

Media

65

Statuses

657

https://t.co/EYtS07MR7w Making GPUs go brrr

Hiding in your wifi

Joined July 2019

I'm writing an IDE specifically for LLM-aided code hyper-optimization. I'm going to write up a blog post about it and open source soon. Here's a screenshot of what I've got so far.

4

0

23

Terrible license. I didn't expect such onerous terms for a 300M param model, but here we are. Also, curious wording. They do not compare to Qwen3-Embedding-0.6B, because they do not beat it. With that said it's probably a good model.

Introducing EmbeddingGemma, our newest open model that can run completely on-device. It's the top model under 500M parameters on the MTEB benchmark and comparable to models nearly 2x its size – enabling state-of-the-art embeddings for search, retrieval + more.

0

0

1

And for good hardware accelerated low precision kernels you should really look at:.

github.com

QuTLASS: CUTLASS-Powered Quantized BLAS for Deep Learning - GitHub - IST-DASLab/qutlass: QuTLASS: CUTLASS-Powered Quantized BLAS for Deep Learning

0

0

3

I've become increasingly convinced that if you aren't training in FP8 or smaller you're a chump. Especially if you're trying to do RL, but also in any setting where you're compute constrained and not data constrained. It is just usually better to do quantized training. There's.

1

1

6

The number of LLM tools that seize up when they encounter <|endoftext|> in a string literal astonishes me. I thought everyone knew to be careful about this.

0

0

3

I'm implementing a tokenizer for a project, and it astonishes me that tiktoken is generally considered "fast". Agony.

1

0

5

The SF health cult has convinced me, I'm getting into supplements. Starting out with the basics, plus a multivitamin. The D,L-Phenylalanine is a personal headcannon. But how does anybody swallow these things? They're huge. Can't split them, they're full of foul tasting liquid.

0

0

2

I'm 'boutta crash out, but I'mma crash into bed and go eep instead. nini ❤️.

0

0

0

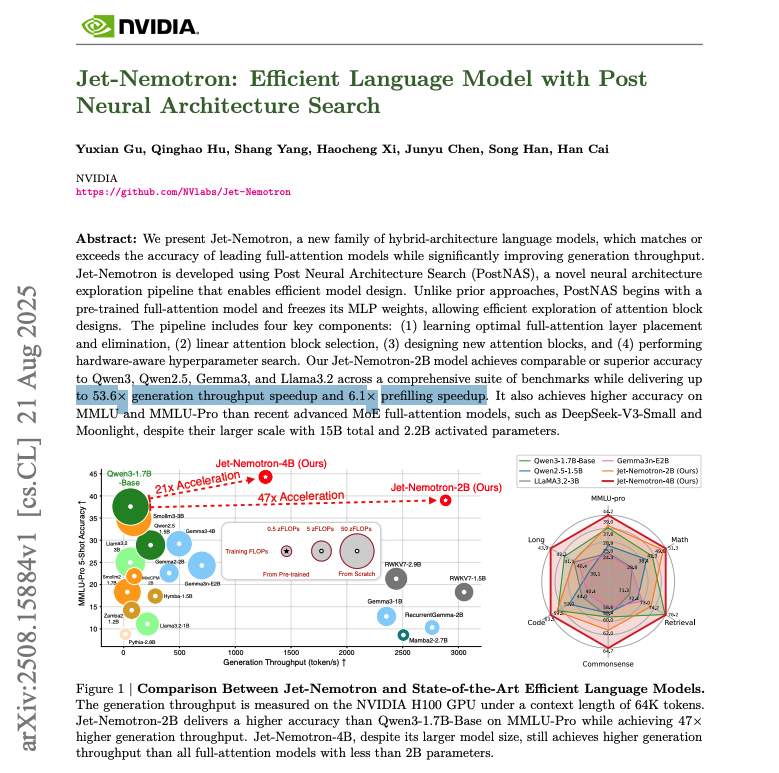

The two techniques this paper introduces, JetBlock and PostNAS, are LITERALLY NOT EVEN DEFINED. There's a bunch of red flags in this paper. First, Nvidia did not release the code. The paper does not contain much information, certainly not enough to be reproducible. It's mostly.

NVIDIA research just made LLMs 53x faster. 🤯. Imagine slashing your AI inference budget by 98%. This breakthrough doesn't require training a new model from scratch; it upgrades your existing ones for hyper-speed while matching or beating SOTA accuracy. Here's how it works:

2

0

7

What confuses me is that if you look at the scaling curves, it's clearly better. If you're not doing MoE + quantized training you're a schmuck, especially if you're doing RL, which you should be doing for human preference posttraining anyway, even if you don't believe in.

I knew it. As of Grok-2 generation at least, xAI people genuinely believed finegrained MoE to be a communist psyop. It's on Mixtral level of sophistication. Just throwing GPUs at the wall, fascinating.

2

0

6

God this must be so embarrassing.

1/ Today we’re proud to announce a partnership with @midjourney, to license their aesthetic technology for our future models and products, bringing beauty to billions.

1

0

1

Please for the love of all that is holy tell me they were already doing this.

New Anthropic research: filtering out dangerous information at pretraining. We’re experimenting with ways to remove information about chemical, biological, radiological and nuclear (CBRN) weapons from our models’ training data without affecting performance on harmless tasks.

2

0

6

Jinja has import statements, I'm 'boutta crash out. I'm not sure why everyone is standardizing on it for tool call prompting.

1

0

2

Tonight I was at a hypnosis lecture, and someone asked: "When you imagine yourself on a beach, how do you experience it? Do you see it? Hear it? Feel it?". The thing is, I don't. None of the above. Or I imagine in third person. I think somewhere along the way my brain got fried.

2

0

1

I am faced with the sobering reality that writing an efficient mxfp4 kernel for gpt-oss is not possible in llama.cpp because of the memory layout. Blocks of quantized elements are not stored contiguously, so you cannot issue vector loads across mfxp4 blocks. Sadge.

0

0

6