Anirbit

@anirbit_maths

Followers

51

Following

202

Media

11

Statuses

159

Lecturer in ML, The University of Manchester Action Editor @ TMLR Associate Editor @ ACM-TOPML

Joined January 2025

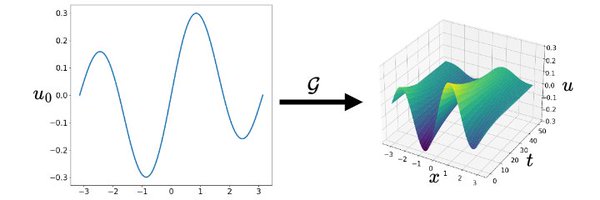

This was the slide where I outlined the 2 key questions which I think are foundational to progress with neural operators & #AI4Science . ACM IKDD #CODS2025, gave a platform for such discussions between new academics and subject stalwarts in the audience 💥

Gave my "new faculty highlight" talk at the ACM IKDD #CODS 2025 - where I outlined a vision for neural operator research - and reviewed our 2 #TMLR papers from 2024, in the theme.

0

1

1

A recent paper improved one of my PhD 1st year results by 0.63. Surprising that our upperbound held for 9 yrs 🤣 These are things only maths people get excited about 😁 Still Open : Are 2 layers sufficient for a net to compute the maximum of n numbers? 💥

0

0

1

Exactly! 💥 I have been thinking the same. Given the strange situation with ML reviewing, it's the AE/ACs who become pivotal to the field having a significant hand in guiding what is valid and good research. This should have happened earlier, rather as a crisis response.

There’s been mostly negative takes on the heavy burden placed on ICLR ACs this year. Here’s my hot take: It’s good. Our top researchers, ACs, are finally playing a real role. Every AC is now acting like an action editor at a journal. Reviewers offer opinions, ACs decide.

0

0

0

This study is quite in line with what we published at #TMLR this year, https://t.co/I2NfXm8nfQ that there exists a careful step-length schedule for SGD which has provable neural training properties and it competes Adam. So, yes "Who is Adam?" 😁

🚨New Blog Alert: Is AdamW an overkill for RLVR? We found that vanilla SGD is 1. As performant as AdamW, 2. 36x more parameter efficient naturally. (much more than a rank 1 lora) 🤯 Looks like a "free lunch". Maybe It’s time to rethink the optimizers for RLVR 🧵

0

2

1

It's very difficult to motivate on most forums that we need *much* better theories of generalization, specifically for LLMs. This requires deep investment in mathematics. Great to see the stalwarts like, @tydsh and @ilyasut mention this puzzle explicitly.

Very inspiring😀. I made similar points in my SilliconValley101 interview a few weeks ago ( https://t.co/C6ikmuSLIF). The points @ilyasut made that really resonate me: 1. We still don't know how the model generalizes well. This is a fundamental missing piece and we need to do

0

0

0

On this day, Einstein presented "The field equations of Gravitation" to the Prussian Academy of Sciences. After ten long years of turmoil he finally had the correct covariant form of the field equations of general relativity.

6

37

288

Fun fact : one of India's leading journalists sometimes publishes papers in number theory 😎

A new paper of mine, I like the form of the result: We show the product over n >0 of (Φn(z))^1/n, (n-th cyclotomic polynomial), is constant for |z| < 1. This translates a result of Ramanujan, equivalent to the prime number theorem, to infinite products. https://t.co/QncBG95NZ1

0

1

1

Thanks to @TmlrOrg for giving me the job of being an AE here. #TMLR is a mission to ensure the highest standards of reviewing are upheld for ML - as say happens when we review for the top journals in statistics and (applied) mathematics.

Mood 🙏: Feeling thankful for thoughtful/critical reviews from @TmlrOrg yet again, and ngl, secretly hoping that the mega-AI/ML conference crowd don't discover this oasis.. 🤐

0

0

1

Hello industries in UK! 🙂 Dr. Tim Tang, Dr. Miguel Beneitez and me have significant combined experience on using ML to solve fluid-dynamics. We have ideas on how to address ocean wave modeling via neural nets. We invite you to get in touch to work with us.

0

2

1

Our university has an official photographer. They agreed to waste some time with me 🤣

0

0

1

What is an analytically specifiable regression task with k-dimensional vector fields on the 2-sphere? 🤔 In-principle we can stack any k periodic functions of (theta,phi) and create an example, but that's not "natural" - not a solution of a PDE on S^2.

1

0

1

Possibly one of singlemost important contributions to ML is @soumithchintala building #PyTorch. In my PhD in neural nets I never wrote any code. In pre-PyTorch era entry barrier to coding nets was just too huge. Now I teach PyTorch and use it daily! 💥

Leaving Meta and PyTorch I'm stepping down from PyTorch and leaving Meta on November 17th. tl;dr: Didn't want to be doing PyTorch forever, seemed like the perfect time to transition right after I got back from a long leave and the project built itself around me. Eleven years

0

0

0

Here's the first LLM PhD project I am joining! 😊 I am helping Prof. Uli Sattler and Dr. Dominik Winterer. We have ideas we believe in about what mathematics research can be accelerared by #LLMs + #Lean - https://t.co/AijUwlfCBK -

lnkd.in

This link will take you to a page that’s not on LinkedIn

0

0

0

I had thought I will keep my X feed fully academic. But this sporting victory by Indian women is just too big. 2nd Nov 2025 goes down in history 🔥 #CWC25

1983 inspired an entire generation to dream big and chase those dreams. 🏏 Today, our Women’s Cricket Team has done something truly special. They have inspired countless young girls across the country to pick up a bat and ball, take the field and believe that they too can lift

0

0

0

I am ready to bet, that OpenAI's historic successes can be traced back to Ilya Sutskever taking differential topology classes as an undergrad. 😁 Seriously, it's not inconceivable that one of the greatest minds in ML history also found G-bundles interesting!

Crazy bit of UofT trivia I just found: Ilya Sutskever and the mathematician Jacob Tsimmerman (who solved the Andre-Oort conjecture) were both in the same grad differential topology class in 2005 as undergrads (labeled 37 and 25 respectively)

0

0

1

With all the discussions about #Muon and "#silversteps" etc, here's my periodic reminder that we invented delta-GClip - the *only* step-size schedule for GD that provably trains deep-nets. This is a logical benchmark for all deep-net training claims 💥

So, the next time you do deep-learning, you might consider having as a baseline the only known adaptive gradient algorithm that provably trains deep-nets, our delta-GClip. It should be a good comparison baseline for all future deep-learning algorithms, heuristics or provable :)

0

0

1

In particular, in this work we identified how in an infinitely wide limit, the autoencoding landscape starts to encode the true "data dictionary" - a fully non-realizable data setup. We were kind-of close to discovering the NTK idea - but we missed it 🫥

With all the discussion about "Sparse AutoEncoders" as a way of doing #MechanisticInterpretability of LLMs, I am resharing a part of my PhD where we proved years ago about how sparsity automatically emerges in autoencoding. @NeelNanda5

https://t.co/eN7buAJRgg

0

0

0

With all the discussion about "Sparse AutoEncoders" as a way of doing #MechanisticInterpretability of LLMs, I am resharing a part of my PhD where we proved years ago about how sparsity automatically emerges in autoencoding. @NeelNanda5

https://t.co/eN7buAJRgg

arxiv.org

In "Dictionary Learning" one tries to recover incoherent matrices $A^* \in \mathbb{R}^{n \times h}$ (typically overcomplete and whose columns are assumed to be normalized) and sparse vectors $x^*...

0

0

0