andpalmier

@andpalmier

Followers

1K

Following

3K

Media

598

Statuses

2K

Threat analyst, eternal newbie / @ASRomaEN 💛❤️ / @andpalmier.com on bsky

internet

Joined May 2019

🆕 I've released a new blog post about #KawaiiGPT, a "malicious #LLM" that popped up recently. I discuss its #jailbreak engine, how it accesses expensive LLMs for free, and some risks it exposes its users to. https://t.co/immjwYc4QF

andpalmier.com

A cool analysis and a shameless plug of repopsy

1

2

1

🆕 I've released a new blog post about #KawaiiGPT, a "malicious #LLM" that popped up recently. I discuss its #jailbreak engine, how it accesses expensive LLMs for free, and some risks it exposes its users to. https://t.co/immjwYc4QF

andpalmier.com

A cool analysis and a shameless plug of repopsy

1

2

1

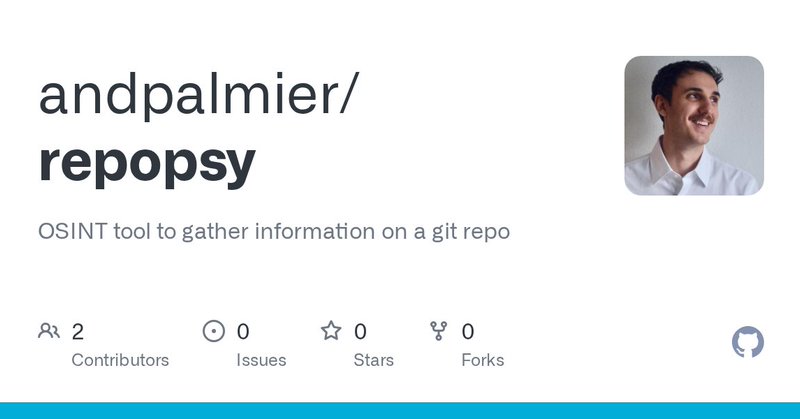

The blog also contains a shameless plug for a small project I've been working on in the last few days 👀 https://t.co/PyHbYC2HbT

github.com

OSINT tool to gather information on a git repo. Contribute to andpalmier/repopsy development by creating an account on GitHub.

0

1

1

🍺 apkingo is available on Homebrew! You can now install it with: ``` brew tap andpalmier/tap brew install apkingo ``` Check out the repo, release notes, and docs: https://t.co/flgHmRHEmc

#Go #Homebrew #APK #APKAnalysis

0

0

3

Hey all, I've published a new blog post titled "Interview preparation for a #cti role"! You can find it here:

andpalmier.com

Ace your CTI job interview

1

0

2

This random document fell off the back of a bus. Weird. This random document which randomly fell off the back of a bus (randomly) says MITRE is no longer supporting the CVE program as of April 16th, 2025. Which is crazy, because this random document is dated April 15th, 2025.

57

183

1K

Cloudflare turns AI against itself with endless maze of irrelevant facts

arstechnica.com

New approach punishes AI companies that ignore “no crawl” directives.

3

22

75

Security researcher @gentoo_python discovered a Prompt Injection on VirusTotal. Could this be used as a form of social engineering to trick users into thinking a file is safe when it's not? File hash: 1d30bfee48043a643a5694f8d5f3d8f813f1058424df03e55aed29bf4b4c71ce

11

56

611

I’ve just pushed an update to my Search Engines AD Scanner (seads)! Feel free to try it out here: https://t.co/Ks9nyNs7ye Feedback is always appreciated! :)

github.com

Search Engines ADs scanner - spotting malvertising in search engines has never been easier! - andpalmier/seads

0

0

1

Today the US Cybersecurity and Infrastructure Security Agency (CISA) reported a backdoor on two patient monitors. As cybersecurity people, we find this deeply troubling. As malware people, we find this cool and badass. https://t.co/6Dnh8WlXgA

bleepingcomputer.com

The US Cybersecurity and Infrastructure Security Agency (CISA) is warning that Contec CMS8000 devices, a widely used healthcare patient monitoring device, include a backdoor that quietly sends...

24

164

980

⚠️ Developers, please be careful when installing Homebrew. Google is serving sponsored links to a Homebrew site clone that has a cURL command to malware. The URL for this site is one letter different than the official site.

253

2K

11K

🚨ALERT: Potential ZERO-DAY, Attackers Use Corrupted Files to Evade Detection 🧵 (1/3) ⚠️ The ongoing attack evades #antivirus software, prevents uploads to sandboxes, and bypasses Outlook's spam filters, allowing the malicious emails to reach your inbox The #ANYRUN team

4

150

462

END OF THE THREAD! Check out the original blog post here: https://t.co/UNdjVb0IfG If that made you curious about AI Hacking, be sure to check out the CTF challenges by @dreadnode at

andpalmier.com

An n00b overview of the main Large Language Models jailbreaking strategies

0

0

0

🤖 LLMs vs LLMs It shouldn't really come as a big surprise that some methods for attacking LLMs are using LLMs. Here are two examples: - PAIR: an approach using an attacker LLM - IRIS: inducing an LLM to self-jailbreak ⬇️

1

0

0

📝 Prompt rewriting: adding a layer of linguistic complexity! This class of attacks uses encryption, translation, ascii art and even word puzzles to bypass the LLMs' safety checks. ⬇️

1

0

0

💉 Prompt injection: embed malicious instructions in the prompt. According to OWASP, prompt injection is the most critical security risk for LLM applications. They break down this class of attacks in 2 categories: direct and indirect. Here is a summary of indirect attacks: ⬇️

1

0

0

😈 Role-playing: attackers ask the LLM to act as a specific persona or as part of a scenario. A common example is the (in?)famous DAN (Do Anything Now): This attacks are probably the most common in the real-word, as they often don't require a lot of sophistication. ⬇️

1

0

0

We interact (and therefore attack) LLMs mainly using language, therefore let's start from there. I used this dataset https://t.co/jwL887zAtu of jailbreak #prompts to create this wordcloud. I believe it gives a sense of "what works" in these attacks! ⬇️

1

0

0

Before we dive in: I’m *not* an AI expert! I did my best to understand the details and summarize the techniques, but I’m human. If I’ve gotten anything wrong, just let me know! :) ⬇️

1

0

0