Aaron Havens

@aaronjhavens

Followers

294

Following

4K

Media

9

Statuses

52

PhD student at @ECEILLINOIS. Control theory, ML and Autonomy. Previously @AIatMeta, @PreferredNet and @TuSimpleAI

Champaign, IL

Joined April 2011

New paper out with FAIR(+FAIR-Chemistry):. Adjoint Sampling: Highly Scalable Diffusion Samplers via Adjoint Matching. We present a scalable method for sampling from unnormalized densities beyond classical force fields. 📄:

1

18

110

RT @guanhorng_liu: Adjoint-based diffusion samplers have simple & scalable objectives w/o impt weight complication. Like many, though, they….

0

39

0

RT @auhcheng: Excited to share Quetzal, a simple but scalable model for building 3D molecules atom-by-atom. 🐉 Named after Quetzalcoatl, th….

0

31

0

RT @RickyTQChen: Reward-driven algorithms for training dynamical generative models significantly lag behind their data-driven counterparts….

0

6

0

RT @RickyTQChen: We are presenting 3 orals and 1 spotlight at #ICLR2025 on two primary topics:. On generalizing the data-driven flow match….

0

28

0

This work was done during a PhD internship at FAIR NYC, thanks to my amazing supervisors @brandondamos ,.@RickyTQChen and Brian Karrer. Special thanks to our core contributors: @bkmi13.@bingyan4science @xiangfu_ml.@guanhorng_liu (and of course @cdomingoenrich).

0

1

7

RT @RickyTQChen: Want to learn continuous & discrete Flow Matching? We've just released:. 📙 A guide covering Flow Matching basics & advance….

0

160

0

Hi friends. I will be at the NYC FAIR office for the next 6 months as a research scientist intern. I’ll be working on all things control theory x generative modeling under the amazing @brandondamos. Please feel free to reach out if you’re around NYC!.

0

0

28

RT @angelaschoellig: Our review paper on **Safe Learning in Robotics** is out, including open-source code. Wondering how model-driven and d….

0

25

0

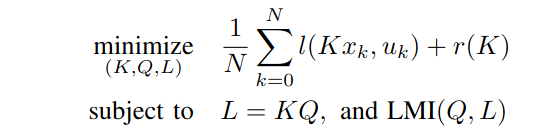

(1/6) Happy to share one of the first papers of my PhD which was done last fall: "On Imitation Learning of Linear Control Policies: Enforcing Stability and Robustness Constraints via LMI Conditions" :

arxiv.org

When applying imitation learning techniques to fit a policy from expert demonstrations, one can take advantage of prior stability/robustness assumptions on the expert's policy and incorporate such...

2

1

6