Saksham Suri

@_sakshams_

Followers

771

Following

2K

Media

13

Statuses

130

Research Scientist @AiatMeta. Previously PhD @UMDCS, @MetaAI, @AmazonScience, @USCViterbi, @IIITDelhi, @IBMResearch. #computervision #deeplearning

California, USA

Joined January 2015

Drop by our oral presentation and poster session to chat and learn about our video tokenizer with learned autoregressive prior. #ICLR2025.

I will be presenting LARP at ICLR today. 🎤 Oral: 11:18 AM – 11:30 AM (UTC+8), Oral Session 3C.🖼️ Poster: 3:00 PM – 5:30 PM (UTC+8), Hall 3 + Hall 2B, Poster #162. You’re very welcome to drop by for discussion and feedback!.

0

1

4

RT @AIatMeta: Today is the start of a new era of natively multimodal AI innovation. Today, we’re introducing the first Llama 4 models: Lla….

0

2K

0

📢 Excited to announce LARP has been accepted to #ICLR2025 ! 🇸🇬 .Code and models are publicly available. Project page:

🚀 Introducing LARP: Tokenizing Videos with a Learned Autoregressive Generative Prior! 🌟. 📄 Paper: 📜 Project page: 🔗 Code: Collaborators: @_sakshams_ , Yixuan Ren, @HaoChen_UMD , @abhi2610 . #GenAI

1

2

34

Checkout Efficient Track Anything from our team. 2x faster than SAM2 on A100 .> 10 FPS on iPhone 15 Pro Max. Paper: demo:

🚀Excited to share our Efficient Track Anything. It is small but mighty, >2x faster than SAM2 on A100 and runs > 10 FPS on iPhone 15 Pro Max. How’d we do it? EfficientSAM + Efficient Memory Attention!. Paper: Project (demo): with:

0

0

10

Checkout LARP, our work on creating a video tokenizer which is trained with an autoregressive generative prior. Code and models are open sourced!.

🚀 Introducing LARP: Tokenizing Videos with a Learned Autoregressive Generative Prior! 🌟. 📄 Paper: 📜 Project page: 🔗 Code: Collaborators: @_sakshams_ , Yixuan Ren, @HaoChen_UMD , @abhi2610 . #GenAI

0

1

9

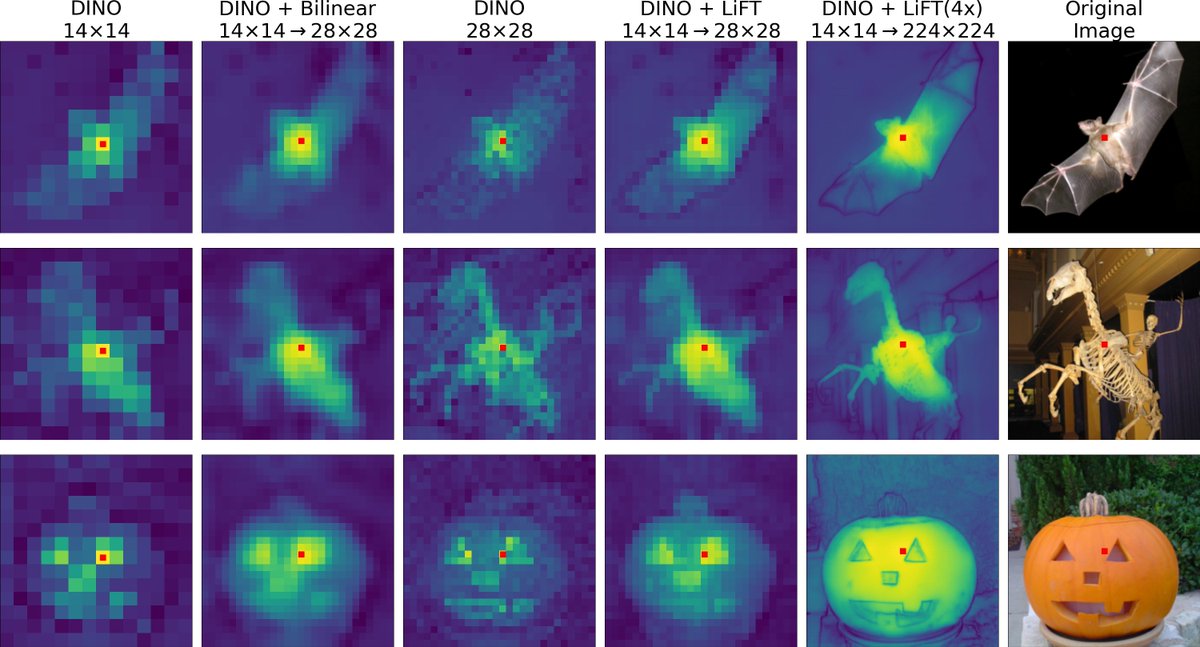

We are happy to release our LiFT code and pretrained models! 📢. Code: Project Page: Here are some super spooky super resolved feature visualizations to make the season scarier 🎃. Coauthors: @MatthewWalmer @kamalgupta09 @abhi2610

We introduce LiFT, an easy to train, lightweight, and efficient feature upsampler to get dense ViT features without the need to retrain the ViT. Visit our poster @eccvconf #eccv2024 in Milan on Oct 1st (Tuesday), 16:30 (local), Poster: 79. Project Page:

2

46

243

RT @YoungXiong1: 🚨VideoLLM from Meta!🚨.LongVU: Spatiotemporal Adaptive Compression for Long Video-Language Understanding. 📝Paper: https://t….

0

73

0

Excited to announce that I have joined @AIatMeta as a Research Scientist where I will be working on model optimization. Also I will be at ECCV to present my work and am excited to meet and learn from everyone. Reach out if you are attending and would like to chat. Ciao 🇮🇹.

17

6

211

That's a wrap! Happy to share that I have defended my thesis. Thankful for the insightful questions and feedback from my committee members @abhi2610,@zhoutianyi, @davwiljac, Prof. Espy-Wilson, and Prof. Andrew Zisserman.

10

0

82

RT @AnthropicAI: Today, we're announcing Claude 3, our next generation of AI models. The three state-of-the-art models—Claude 3 Opus, Cla….

0

2K

0

RT @StabilityAI: Announcing Stable Diffusion 3, our most capable text-to-image model, utilizing a diffusion transformer architecture for gr….

0

1K

0