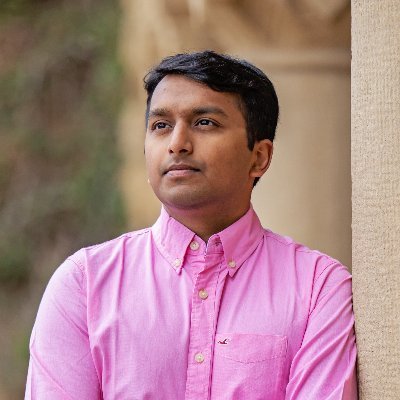

Lama Ahmad لمى احمد

@_lamaahmad

Followers

5K

Following

7K

Media

175

Statuses

2K

@OpenAI. views my own.

San Francisco, CA

Joined December 2014

Third party testing has long been part of our safety work. Our new blog shows how we collaborate on capability evaluations, methodology reviews, and expert probing that brings in domain expertise — all strengthening the broader safety ecosystem.

openai.com

Our approach to third party assessments for frontier AI.

8

25

203

Even before @mmitchell_ai recently raised this discussion, I've had conversation after conversation with students & new grads struggling with this exact dilemma. I want to help! Here's a live thread of AI-related opportunities for those looking to do good & make (enough) money:

9

24

136

We’re adding two new models to the gpt-oss family today! With gpt-oss-safeguard, you can apply your own safety policies at inference time and see how the model reasons through them step-by-step. These models are fine-tuned from gpt-oss and available under Apache-2.0.

Now in research preview: gpt-oss-safeguard Two open-weight reasoning models built for safety classification. https://t.co/4rZLGhBO1w

5

15

151

🧵Today we’re sharing more details about improvements of the default GPT-5 model in responding to sensitive conversations around potential mental health emergencies and emotional reliance. These changes reflect the careful work of many teams within OpenAI and close consultation

Earlier this month, we updated GPT-5 with the help of 170+ mental health experts to improve how ChatGPT responds in sensitive moments—reducing the cases where it falls short by 65-80%. https://t.co/hfPdme3Q0w

260

77

610

AI companies need adaptable, fit-for-purpose approaches to working with third parties on capability and risk assessments. Our collaboration with METR is a strong example of that.

Check out the brief report, now published, on our blog for more details about our review of OpenAI's gpt-oss risk assessment methodology:

0

1

9

Excited to share details on two of our longest running and most effective safeguard collaborations, one with Anthropic and one with OpenAI. We've identified—and they've patched—a large number of vulnerabilities and together strengthened their safeguards. 🧵 1/6

8

62

300

We’ve been working with the US Center for AI Standards & Innovation and the UK AI Security Institute to raise the bar on AI security. From joint red-teaming to end-to-end testing, these voluntary collaborations are already delivering real-world security improvements for widely

openai.com

An update on our collaboration with US and UK research and standards bodies for the secure deployment of AI.

88

102

1K

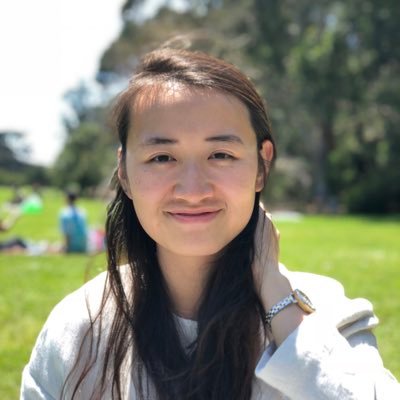

I am looking for students and collaborators for several new projects at the intersection of AI, the economy, and society. If you are interested, please fill out this form! https://t.co/HB0ERyHaPw

2

18

60

It’s rare for competitors to collaborate. Yet that’s exactly what OpenAI and @AnthropicAI just did—by testing each other’s models with our respective internal safety and alignment evaluations. Today, we’re publishing the results. Frontier AI companies will inevitably compete on

openai.com

OpenAI and Anthropic share findings from a first-of-its-kind joint safety evaluation, testing each other’s models for misalignment, instruction following, hallucinations, jailbreaking, and more—hig...

112

386

2K

No single person or institution should define ideal AI behavior for everyone. Today, we’re sharing early results from collective alignment, a research effort where we asked the public about how models should behave by default. Blog here: https://t.co/WT9REAznD7

openai.com

We surveyed over 1,000 people worldwide on how our models should behave and compared their views to our Model Spec. We found they largely agree with the Spec, and we adopted changes from the disagr...

79

106

579

We just launched GPT-5! There has been an unbelievable amount of safety work that went into this model, from factuality, to deception, to brand new safety training techniques. More details and plots in the 🧵

88

93

850

gotta say I'm excited about this: GPT-5 chain of thought access for external assessors (@apolloaievals too!) is an evaluation win (on the heels of the process audit that @METR_Evals engaged with us on for gpt-oss!) that I'm proud of us @OpenAI for and thankful for the collabs!

The good news: due to increased access (plus improved evals science) we were able to do a more meaningful evaluation than with past models, and we think we have substantial evidence that this model does not pose a catastrophic risk via autonomy / loss of control threat models.

0

5

35

@fmf_org @EstherTetruas @SatyaScribbles Ok, back to my honeymoon! Just so proud of this work and thank you especially to @CedricWhitney for leading the way while I’m out🦒

2

0

14

And a nice complement, work from @fmf_org in collaboration with @EstherTetruas , @SatyaScribbles , and many others! https://t.co/rYs7erzqkk

🧵 NEW TECHNICAL REPORT (1 of 3) Our latest technical report outlines practices for implementing, where appropriate, rigorous, secure, and fit-for-purpose third-party assessments. Read more here:

1

1

8

New model, new form of external assessment!

We adversarially fine-tuned gpt-oss-120b and evaluated the model. We found that even with robust fine-tuning, the model was unable to achieve High capability under our Preparedness Framework. Our methodology was reviewed by external experts, marking a step toward new safety

2

2

57

On a more serious note - I am proud to be part of a team that faces the hardest problems head on, with care and rigor.

We’ve activated our strongest safeguards for ChatGPT Agent. It’s the first model we’ve classified as High capability in biology & chemistry under our Preparedness Framework. Here’s why that matters–and what we’re doing to keep it safe. 🧵

0

0

8

Just a little too late for planning my wedding! Taking a break for a month! 💕

ChatGPT can now do work for you using its own computer. Introducing ChatGPT agent—a unified agentic system combining Operator’s action-taking remote browser, deep research’s web synthesis, and ChatGPT’s conversational strengths.

4

1

71

We’ve activated our strongest safeguards for ChatGPT Agent. It’s the first model we’ve classified as High capability in biology & chemistry under our Preparedness Framework. Here’s why that matters–and what we’re doing to keep it safe. 🧵

We’ve decided to treat this launch as High Capability in the Biological and Chemical domain under our Preparedness Framework, and activated the associated safeguards. This is a precautionary approach, and we detail our safeguards in the system card. We outlined our approach on

86

131

1K