Vijay

@__tensorcore__

Followers

2K

Following

8K

Media

59

Statuses

1K

MLIR, CUTLASS,Tensor Core arch @NVIDIA. Mechanic @hpcgarage. Exercise of any 1st amendment rights are for none other than myself.

Joined July 2015

🚨🔥 CUTLASS 4.0 is released 🔥🚨 pip install nvidia-cutlass-dsl 4.0 marks a major shift for CUTLASS: towards native GPU programming in Python slidehelloworld.png https://t.co/pBLMpQAXHW

16

86

424

@vega_myhre nvfp4 is huge, but low level control over NVSwitch domain, cuteDSL and torch integration, and GB300s when i need to scale up. its hard for me to find a place where TPUs win at this moment. Jax is amazing but xla is making it hard to work with and mosaic is still young

3

4

63

Today we are releasing our first public beta of Nsight Python! The goal is to simplify the life of a Python developer by proving a pythonic way to analyze your kernel code! Check it out, provide feedback! Nsight Python — nsight-python

10

49

343

BREAKING: The CUDA moat has just expanded again! PyTorch Compile/Inductor can now target NVIDIA Python CuTeDSL in addition to Triton. This enables 2x faster FlexAttention compared to Triton implementations. We explain below 👇 As we explained in our April 2025 AMD 2.0 piece,

20

78

541

Quick life update. Moved to California to work at NVIDA. Oh I have so much to learn

160

67

3K

Excited to share what friends and I have been working on at @Standard_Kernel We've raised from General Catalyst (@generalcatalyst), Felicis (@felicis), and a group of exceptional angels. We have some great H100 BF16 kernels in pure CUDA+PTX, featuring: - Matmul 102%-105% perf

52

92

1K

Today Thinking Machines Lab is launching our research blog, Connectionism. Our first blog post is “Defeating Nondeterminism in LLM Inference” We believe that science is better when shared. Connectionism will cover topics as varied as our research is: from kernel numerics to

235

1K

8K

“TogetherAI’s chief scientist @tri_dao announced Flash Attention v4 … uses CUTLASS CuTe Python DSL” As always, thanks for being the tip of the spear and pushing us along too 💚

TogetherAI's Chief Scientist @tri_dao announced Flash Attention v4 at HotChips Conference which is up to 22% faster than the attention kernel implementation from NVIDIA's cuDNN library. Tri Dao was able to achieve this 2 key algorithmic changes. Firstly, it uses a new online

2

4

131

Using CUTLASS CuTe-DSL, TogetherAI's Chief Scientist @tri_dao announced that he has written kernels that is 50% faster than NVIDIA's latest cuBLAS 13.0 library for small K reduction dim shapes on Blackwell during today's hotchip conference. His kernels beats cuBLAS by using 2

7

23

287

Cute-DSL is basically perfect (for me). thank you nvidia and cutlass team. i no longer need to wait for long compile times because i underspecified a template param. i hope everyone involved gets an extra chicken nugget in their happy meal

8

6

145

On Sep 6 in NYC, this won't be your typical hackathon where you do your own thing in a corner and then present at the of the day. You'll deploy real models to the market, trades will happen, chaos should be expected. The fastest model is great but time to market matters more.

5

15

99

ariXv gpu kernel researcher be like: • liquid nitrogen cooling their benchmark GPU • overclock their H200 to 1000W "Custom Thermal Solution CTS" • nvidia-smi boost-slider --vboost 1 • nvidia-smi -i 0 --lock-gpu-clocks=1830,1830 • use specially binned GPUs where the number

5

7

121

Part 2: https://t.co/tVfBjAkXwR Covers the design of CUTLASS 3.x itself and how it builds a 2 layer GPU microkernel abstraction using CuTe as the foundation.

developer.nvidia.com

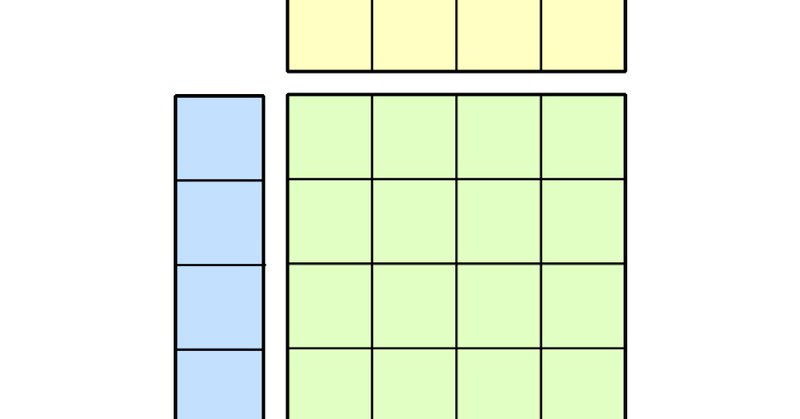

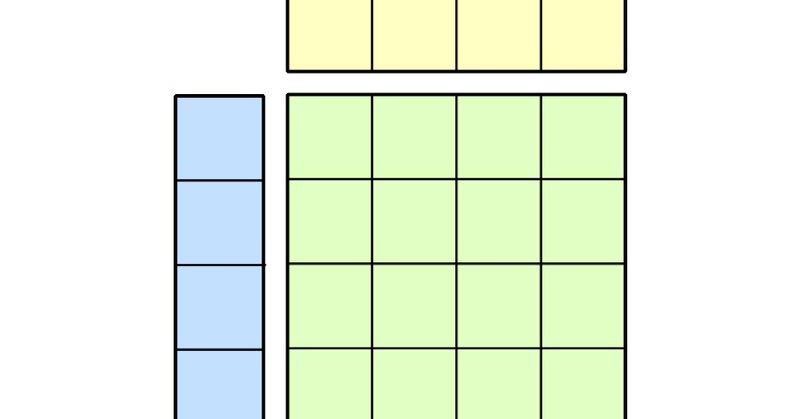

GEMM optimization on GPUs is a modular problem. Performant implementations need to specify hyperparameters such as tile shapes, math and copy instructions, and warp-specialization schemes.

0

4

18

Part 2: https://t.co/tVfBjAkXwR Covers the design of CUTLASS 3.x itself and how it builds a 2 layer GPU microkernel abstraction using CuTe as the foundation.

developer.nvidia.com

GEMM optimization on GPUs is a modular problem. Performant implementations need to specify hyperparameters such as tile shapes, math and copy instructions, and warp-specialization schemes.

0

4

18

CUTLASS 4.1 is now available, which adds support for ARM systems (GB200) and block scaled MMAs

🚨🔥 CUTLASS 4.0 is released 🔥🚨 pip install nvidia-cutlass-dsl 4.0 marks a major shift for CUTLASS: towards native GPU programming in Python slidehelloworld.png https://t.co/pBLMpQAXHW

4

12

122

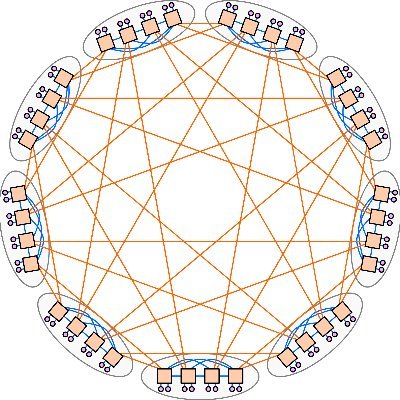

Hierarchical layout is super elegant. Feels like the right abstraction for high performance GPU kernels. FlashAttention 2 actually started bc we wanted to rewrite FA1 in CuTe

https://t.co/UjezOE9WEJ marks the start of a short series of blogposts about CUTLASS 3.x and CuTe that we've been meaning to write for years. There are a few more parts to come still, hope you enjoy!

1

12

154

CuTe is such an elegant library that we stopped working on our own system and wholeheartedly adopted CUTLASS for vLLM in the beginning of 2024. I can happily report that was a very wise investment! Vijay and co should be so proud of the many strong OSS projects built on top 🥳

https://t.co/UjezOE9WEJ marks the start of a short series of blogposts about CUTLASS 3.x and CuTe that we've been meaning to write for years. There are a few more parts to come still, hope you enjoy!

0

4

87

https://t.co/UjezOE9WEJ marks the start of a short series of blogposts about CUTLASS 3.x and CuTe that we've been meaning to write for years. There are a few more parts to come still, hope you enjoy!

developer.nvidia.com

In the era of generative AI, utilizing GPUs to their maximum potential is essential to training better models and serving users at scale. Often, these models have layers that cannot be expressed as…

5

53

301

This is what the internet was made for 🥹

0

0

12

Cosmos-Predict2 meets NATTEN. We just released variants of Cosmos-Predict2 where we replace most self attentions with neighborhood attention, bringing up to 2.6X end-to-end speedup, with minimal effect on quality! https://t.co/S8qHyfcCTS (1/5)

1

8

40

Getting mem-bound kernels to speed-of-light isn't a dark art, it's just about getting the a couple of details right. We wrote a tutorial on how to do this, with code you can directly use. Thanks to the new CuTe-DSL, we can hit speed-of-light without a single line of CUDA C++.

🦆🚀QuACK🦆🚀: new SOL mem-bound kernel library without a single line of CUDA C++ all straight in Python thanks to CuTe-DSL. On H100 with 3TB/s, it performs 33%-50% faster than highly optimized libraries like PyTorch's torch.compile and Liger. 🤯 With @tedzadouri and @tri_dao

7

57

523