Zhiqiu Lin

@ZhiqiuLin

Followers

523

Following

162

Media

22

Statuses

66

PhD Student at Carnegie Mellon University | Computer Vision and Language | Generative AI

Pittsburgh

Joined July 2017

📷 Can AI understand camera motion like a cinematographer?. Meet CameraBench: a large-scale, expert-annotated dataset for understanding camera motion geometry (e.g., trajectories) and semantics (e.g., scene contexts) in any video – films, games, drone shots, vlogs, etc. Links

10

35

188

RT @tarashakhurana: Excited to share recent work with @kaihuac5 and @RamananDeva where we learn to do novel view synthesis for dynamic scen….

0

28

0

RT @roeiherzig: 🚀 Excited to share that our latest work on Sparse Attention Vectors (SAVs) has been accepted to @ICCVConference — see you a….

0

5

0

RT @Haoyu_Xiong_: Your bimanual manipulators might need a Robot Neck 🤖🦒. Introducing Vision in Action: Learning Active Perception from Huma….

0

86

0

RT @qsh_zh: 🚀 Introducing Cosmos-Predict2!. Our most powerful open video foundation model for Physical AI. Cosmos-Predict2 significantly im….

0

62

0

RT @JacobYeung: 1/6 🚀 Excited to share that BrainNRDS has been accepted as an oral at #CVPR2025!. We decode motion from fMRI activity and u….

0

13

0

RT @jasonyzhang2: Delighted to share what our team has been working on at Google!. After working for so long on sparse-view 3D, it's exciti….

0

33

0

RT @anishmadan23: 🚨 The 2nd iteration of our @CVPR Foundational Few-Shot Object Detection Challenge is LIVE!. Can your model think like an….

0

16

0

RT @i_ikhatri: Just over a month left to submit to this year's Argoverse 2 challenges! Returning from previous years, are our motion foreca….

0

9

0

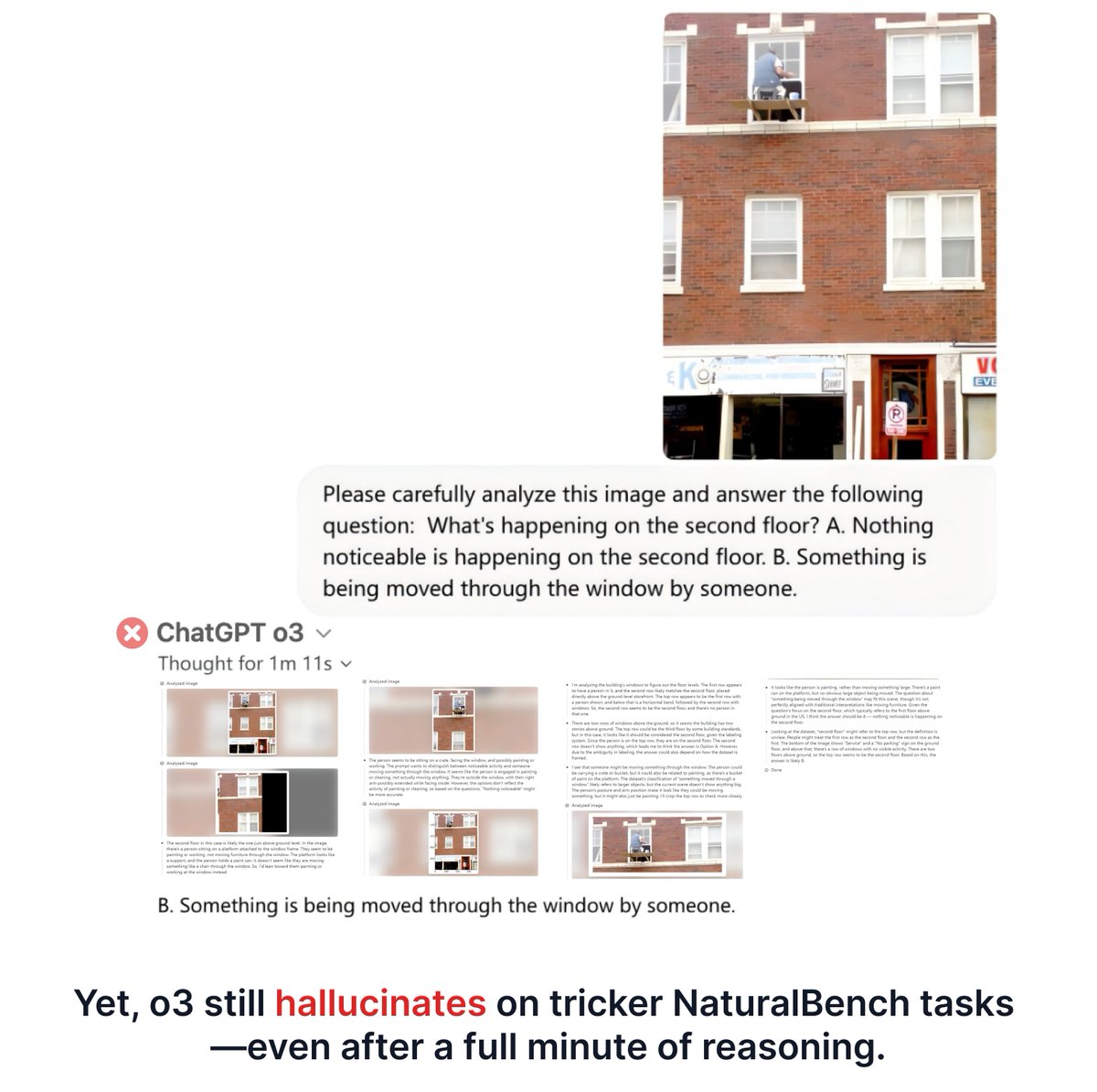

Leaderboard update: GPT‑4o (40%) < Gemini‑2.5 (44%) < GPT‑o3 (55%). Hope to see future models push the limits on #NaturalBench!

6

5

70

Fresh GPT‑o3 results on our vision‑centric #NaturalBench (NeurIPS’24) benchmark! 🎯 Its new visual chain‑of‑thought—by “zooming in” on details—cracks questions that still stump GPT‑4o. Yet vision reasoning isn’t solved: o3 can still hallucinate even after a full minute of

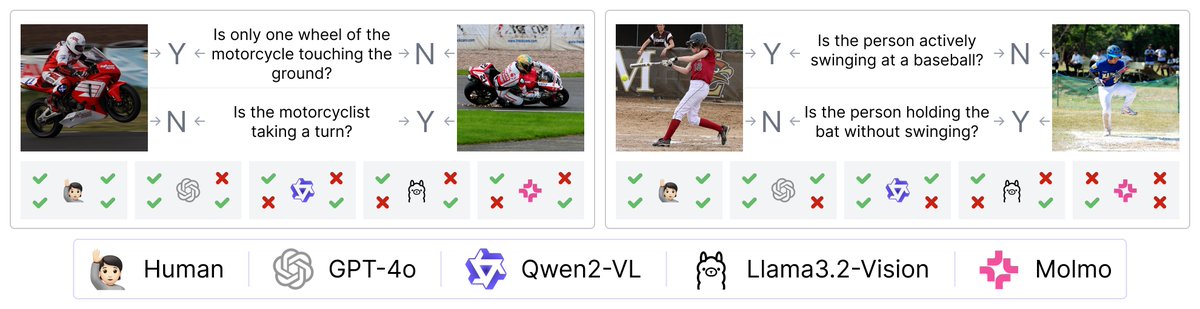

🚀 Make Vision Matter in Visual-Question-Answering (VQA)!. Introducing NaturalBench, a vision-centric VQA benchmark (NeurIPS'24) that challenges vision-language models with pairs of simple questions about natural imagery. 🌍📸. Here’s what we found after testing 53 models

3

23

108

RT @chancharikm: 🎯 Introducing Sparse Attention Vectors (SAVs): A breakthrough method for extracting powerful multimodal features from Larg….

0

39

0