Lizhang Chen

@Tim38463182

Followers

73

Following

73

Media

0

Statuses

53

Student researcher @GoogleResearch Ph.D student at UT Austin

Pasadena, CA

Joined November 2019

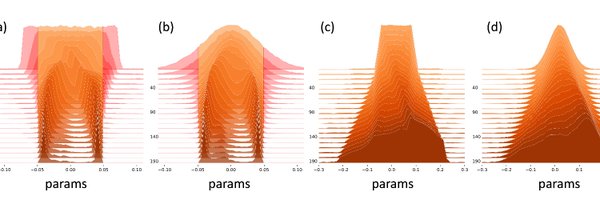

RT @aaron_defazio: Why do gradients increase near the end of training? .Read the paper to find out!.We also propose a simple fix to AdamW t….

0

74

0

RT @ljb121002: Excited to introduce Prior-Informed Preference Alignment (PIPA)🎶! . 🚀Works anywhere DPO/KTO does, with a 3-10% performance….

0

15

0

RT @cranialxix: If you are interested in learning/using flow/diffusion models, please check this thread from the original author of rectifi….

0

3

0

RT @lqiang67: 🚀 New Rectified Flow materials (WIP)!. 📖 Tutorials: 💻 Code: 📜 Notes: https://….

github.com

code based for rectified flow. Contribute to lqiang67/rectified-flow development by creating an account on GitHub.

0

42

0

RT @DrJimFan: We are living in a timeline where a non-US company is keeping the original mission of OpenAI alive - truly open, frontier res….

0

2K

0

RT @wellingmax: Truly excellent piece on entropy. Source: Quanta Magazine

quantamagazine.org

Exactly 200 years ago, a French engineer introduced an idea that would quantify the universe’s inexorable slide into decay. But entropy, as it’s currently understood, is less a fact about the world...

0

73

0

RT @_clashluke: Cautioning gives substantial speedups (see quoted tweet) with a one-line change but also increases the implicit step size….

0

5

0

RT @XixiHu12: 🚀 Excited to share AdaFlow at #NeurIPS2024!. A fast, adaptive method for training robots to act with one-step efficiency—no d….

0

6

0

"this boost appears more consistent than some of the new optimizers -- it's a relatively small addition that can be made to most existing optimizers".

One of the last minute papers I added support for that delayed this release was 'Cautious Optimizers' As I promised, I pushed some sets of experiments at Consider me impressed, this boost appears more consistent than some of the new optimizers -- it's a.

1

0

1

RT @giffmana: Nice, independent verification of the "cautious" one-line change to optimizers by Ross, on separate problems. Seems to consis….

0

16

0

RT @wightmanr: I was going to publish a new timm release yesterday with significant Optimizer updates: Adopt, Big Vision Adafactor, MARS, a….

0

12

0

RT @KyleLiang5: TLDR: 1⃣ line modification, satisfaction (theoretically and empirically) guaranteed 😀😀😀.Core idea: 🚨Do not update if you ar….

0

37

0

RT @konstmish: OpenReview's LaTeX parser seems to be quite bad and it makes it very painful to be a reviewer sometimes. For example:."Assum….

0

2

0