Zhaochen Su

@SuZhaochen0110

Followers

358

Following

192

Media

32

Statuses

165

LLM/LVLM Knowledge & Reasoning | Ph.D. Student @hkust @hkustnlp | Previous Shanghai AI Lab.

Joined July 2023

Excited to share our new survey on the reasoning paradigm shift from "Think with Text" to "Think with Image"! 🧠🖼️ Our work offers a roadmap for more powerful & aligned AI. 🚀 📜 Paper: https://t.co/ZfaT9CCYuW ⭐ GitHub (400+🌟): https://t.co/YLRaGvB70q

7

64

163

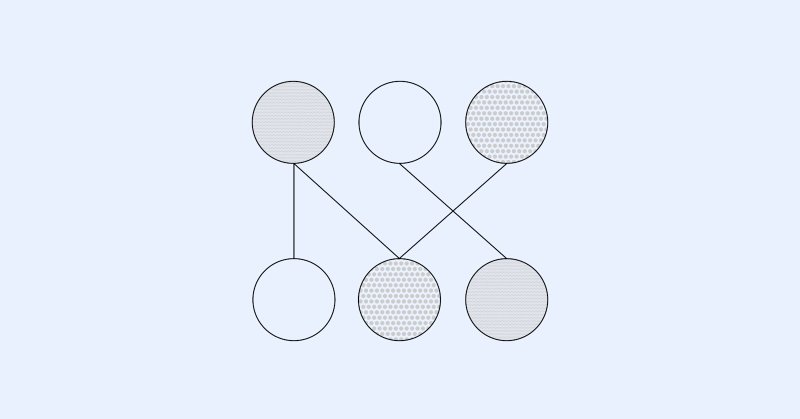

We’ve developed a new way to train small AI models with internal mechanisms that are easier for humans to understand. Language models like the ones behind ChatGPT have complex, sometimes surprising structures, and we don’t yet fully understand how they work. This approach

openai.com

We trained models to think in simpler, more traceable steps—so we can better understand how they work.

222

716

6K

Baidu's breakthrough is a feedback loop where understanding and generation reinforce each other. As the model learns to generate better, its core comprehension deepens. This allows it to analyze new, complex data with far greater nuance. It’s a move from recognition to cognition.

0

0

0

But this native approach is incredibly hard. The key challenges: • Unifying tasks: Integrating content understanding and generation is a huge technical leap. • Managing cost: Training a 2.4T+ MoE model on mixed data (pixels, text, audio) is a major engineering feat.

1

0

0

What makes it different? Most AI today uses late fusion, stitching together separate models for text, image, and audio. It works, but inefficient and loses context. ERNIE 5.0 is a single model trained on all data types from day one. It understands them together, holistically.

1

0

0

The newly released ERNIE 5.0 is really interesting. I believe the "natively multimodal architecture" will fundamentally redesign how AI perceives the world. Models are evolving from "think with text" to "think with world". ERNIE 5.0 is a compelling attempt at this. Congrats!🥳

1

0

2

🚀 Hello, Kimi K2 Thinking! The Open-Source Thinking Agent Model is here. 🔹 SOTA on HLE (44.9%) and BrowseComp (60.2%) 🔹 Executes up to 200 – 300 sequential tool calls without human interference 🔹 Excels in reasoning, agentic search, and coding 🔹 256K context window Built

584

2K

10K

🚀 Excited to share our new research from MSR! 🎯 We built "Magentic Marketplace" - an open-source platform simulating what happens when BOTH consumers AND businesses use AI agents for transactions. With ChatGPT shopping for us and AI handling customer service, what does this

0

9

35

Kimi Linear Tech Report is dropped! 🚀 https://t.co/LwNB2sQnzM Kimi Linear: A novel architecture that outperforms full attention with faster speeds and better performance—ready to serve as a drop-in replacement for full attention, featuring our open-sourced KDA kernels! Kimi

huggingface.co

27

192

1K

🚀We are excited to introduce the Tool Decathlon (Toolathlon), a benchmark for language agents on diverse, complex, and realistic tool use. ⭐️32 applications and 600+ tools based on real-world software environments ⭐️Execution-based, reliable evaluation ⭐️Realistic, covering

6

28

165

💪🦾Agentless Training as Skill Prior for SWE-Agents We recently released the technical report for Kimi-Dev. Here is the story we’d love to share behind it: (1/7)

10

68

352

🚨 New Paper Alert! 🚨 Thrilled to share our latest work: GRACE: Generative Representation Learning via Contrastive Policy Optimization 🧠📄✨ GRACE transforms LLMs from opaque encoders into transparent, interpretable representation learners. 🚀

3

1

4

AI for scientific discovery is the turning point for ASI

0

0

2

Scientific Algorithm Discovery by Augmenting AlphaEvolve with Deep Research

6

23

148

Curious about the misevolution during the self-evolving agent models, please check out dongrui’s new wonderful paper @dong_rui39501

Self-Evolving AI Risks "Misevolution" Even top LLMs (Gemini-2.5-Pro, GPT-4o) face this—agents drift into harm: over-refunding, reusing insecure tools, losing safety alignment. First study on this! https://t.co/DeBJFdTOtF

0

2

3

Thrilled that our "Awesome_Think_With_Images" has surpassed 1k+ stars✨! With so many new papers emerging, we'll be releasing an updated version soon. Stay tuned as we work to make LVLMs truly think! GitHub: https://t.co/YLRaGvB70q Paper: https://t.co/ZfaT9CCYuW

#LVLM #Agent

2

2

14

Introducing the Environments Hub RL environments are the key bottleneck to the next wave of AI progress, but big labs are locking them down We built a community platform for crowdsourcing open environments, so anyone can contribute to open-source AGI

128

425

3K

Mirage or method? We re-assess a series of RL observations such as spurious reward, one-shot RL, test-time RL, and negative-sample training. 🧐These approaches were all proved on Qwen+Math combination originally, but do they work in other settings? If not, under which

3

44

186

Nice survey! Thanks for citing our survey on thinking with images, in which we also discussed Programmatic Visual Manipulation. 📖 https://t.co/uows6U2f7w

New survey on Compositional Visual Reasoning! Covered 260+ papers & 60+ benchmarks — basically we read the most top-tier papers (2023–now). Missing/new SOTA? Contribute via GitHub Issues! arXiv: https://t.co/On8f0SH6dI

1

3

15

Check out this awesome paper on a new paradigm about thinking with imagination/images led by Prof. May Fung @May_F1_ and her super productive team at HKUST!

🧠 How can AI evolve from statically 𝘵𝘩𝘪𝘯𝘬𝘪𝘯𝘨 𝘢𝘣𝘰𝘶𝘵 𝘪𝘮𝘢𝘨𝘦𝘴 → dynamically 𝘵𝘩𝘪𝘯𝘬𝘪𝘯𝘨 𝘸𝘪𝘵𝘩 𝘪𝘮𝘢𝘨𝘦𝘴 as cognitive workspaces, similar to the human mental sketchpad? 🔍 What’s the 𝗿𝗲𝘀𝗲𝗮𝗿𝗰𝗵 𝗿𝗼𝗮𝗱𝗺𝗮𝗽 from tool-use → programmatic

0

7

32

🔥 Check out our latest work on VL Deep Research! We introduce WebWatcher, a multimodal agent for deep research that possesses enhanced visual-language reasoning capabilities.

2

8

43