Stanford MSL

@StanfordMSL

Followers

446

Following

3

Media

19

Statuses

45

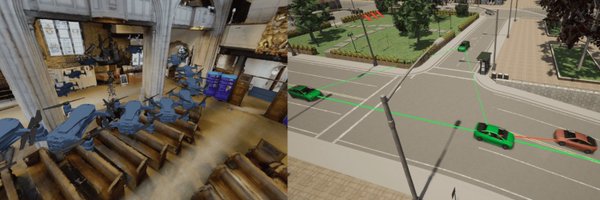

Stanford Multi-robot Systems Laboratory. Endowing groups of robots with the intelligence to collaborate safely and effectively with humans and each other.

Joined December 2020

[5/5] We show in hardware experiments that LatentToM solves tasks with two decentralized arms as well as a fully centralized bi-manual policy. Paper: Project:

arxiv.org

We present Latent Theory of Mind (LatentToM), a decentralized diffusion policy architecture for collaborative robot manipulation. Our policy allows multiple manipulators with their own perception...

0

0

1

Excited to announce Splat-MOVER for multi-stage, open-vocabulary manipulation, with:.- Semantic and affordance scene understanding.- Scene-editing.- Robotic grasp generation!. Find out more at and join us Friday, 11/08/24, at the #CoRL2024 poster session.

0

0

4

RT @simonlc_: We're excited to present a differentiable physics engine for NeRF-represented objects! We augment object-centric NeRFs with d….

0

126

0