SovitRath5

@SovitRath5

Followers

142

Following

1K

Media

304

Statuses

569

Lead SWE (GenAI and LLMs) @ Indegene Blog - https://t.co/Rq2WcIT5QC GitHub - https://t.co/9PmVei4IoP

Joined January 2017

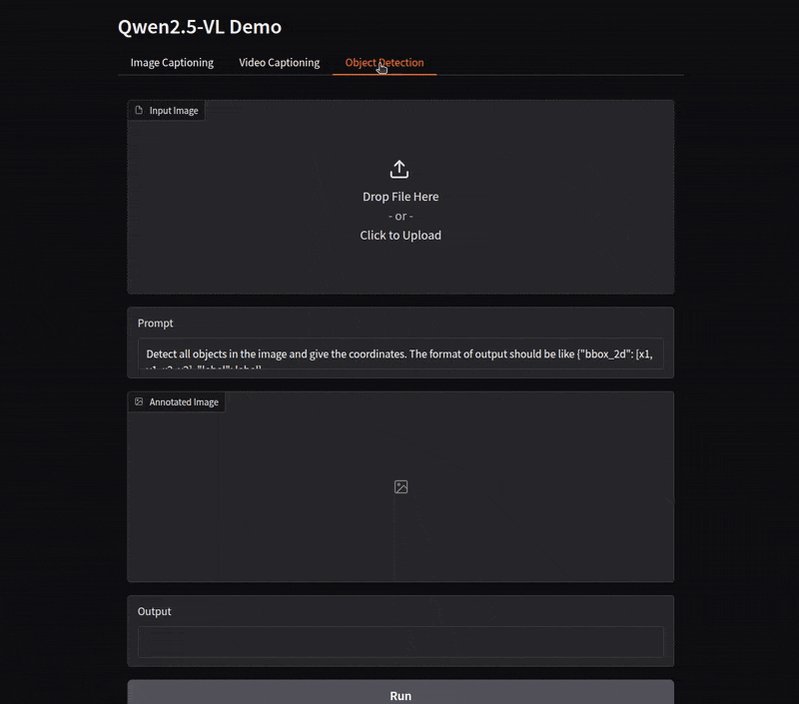

In this week's article on DebuggerCafe, we are covering the Qwen2.5-VL model. Qwen2.5-VL: Architecture, Benchmarks and Inference => #DeepLearning.

0

0

1

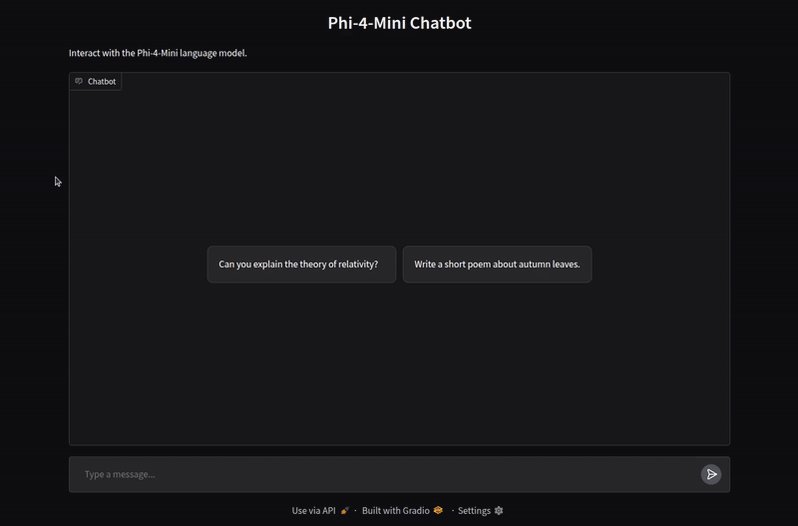

Phi-4 Mini and Phi-4 Multimodal => We cover the following components of Phi-4:.* Phi-4 Mini language model architecture.* Phi-4 multimodal model architecture.* Benchmarks.* Running inference using Phi-4 Mini language model.#DeepLearning

0

0

0

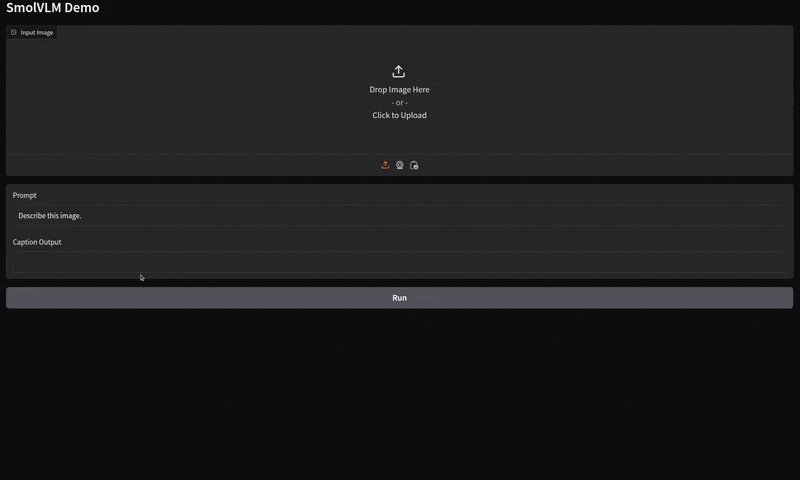

Working on a new project. Fine-tuning SmolVLM for receipt OCR. Initial results after training SmolVLM-256M. Trained adapters are directly pushed to GitHub. Check the notebooks folder. #DeepLearning #HuggingFace #SmolVLM.@huggingface

0

0

1