Somnath Basu Roy Chowdhury

@SomnathBrc

Followers

98

Following

192

Media

27

Statuses

52

Research Scientist at Google Research

Joined January 2024

𝐇𝐨𝐰 𝐜𝐚𝐧 𝐰𝐞 𝐩𝐞𝐫𝐟𝐞𝐜𝐭𝐥𝐲 𝐞𝐫𝐚𝐬𝐞 𝐜𝐨𝐧𝐜𝐞𝐩𝐭𝐬 𝐟𝐫𝐨𝐦 𝐋𝐋𝐌𝐬?. Our method, Perfect Erasure Functions (PEF), erases concepts from LLM representations w/o parameter estimation, achieving pareto optimal erasure-utility tradeoff w/ guarantees. #AISTATS2025 🧵

2

35

153

@snigdhac25 (9/n) I’m attending ICLR in person and presenting our poster on 25th April in Poster session 3 between 10AM-1230PM. Please feel free to stop by our poster if you’re interested. I’m also happy to chat about unlearning or AI safety in general. cc: @uncnlp @unccs.

0

0

1

(8/n) Finally, I would like to thank all my amazing co-authors: Krzysztof, Arijit, Avinava, and @snigdhac25. Code: Paper link:

1

0

1

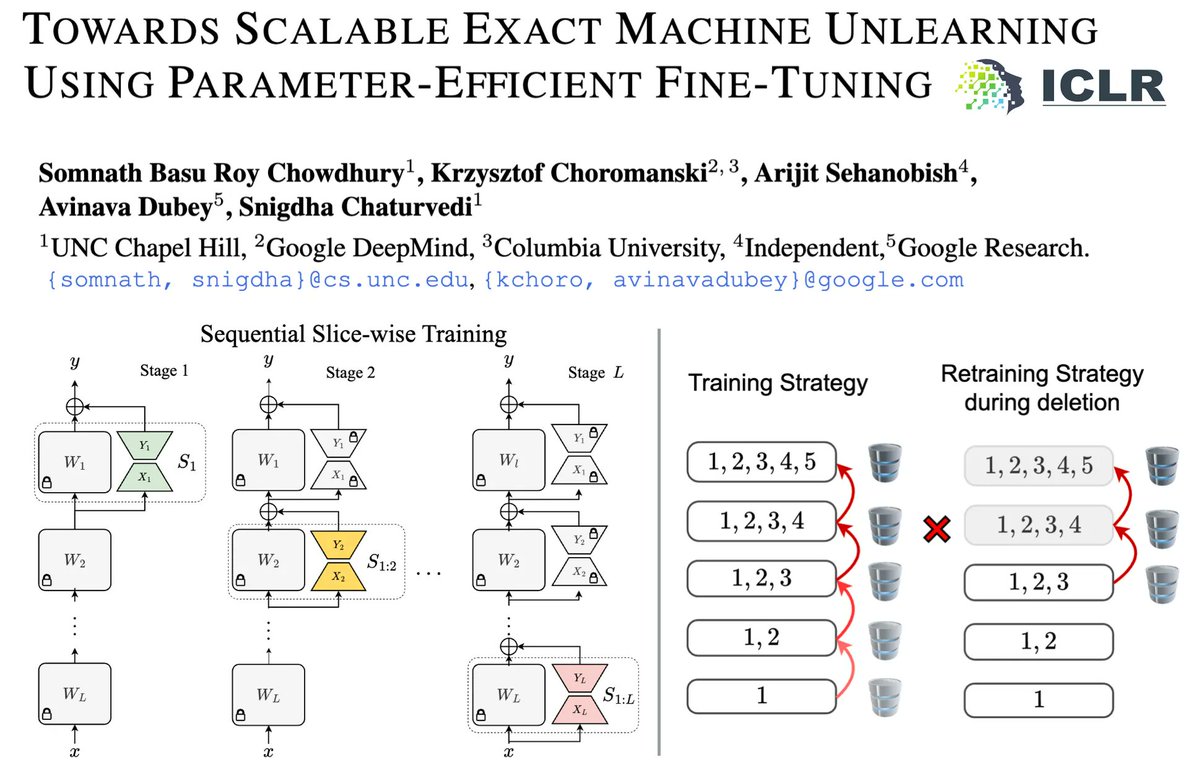

𝐇𝐨𝐰 𝐜𝐚𝐧 𝐰𝐞 𝐩𝐞𝐫𝐟𝐞𝐜𝐭𝐥𝐲 𝐮𝐧𝐥𝐞𝐚𝐫𝐧 𝐝𝐚𝐭𝐚 𝐟𝐫𝐨𝐦 𝐋𝐋𝐌𝐬 𝐰𝐡𝐢𝐥𝐞 𝐩𝐫𝐨𝐯𝐢𝐝𝐢𝐧𝐠 𝐠𝐮𝐚𝐫𝐚𝐧𝐭𝐞𝐞𝐬?. We present S³T, a scalable unlearning framework that guarantees data deletion from LLMs by leveraging parameter-efficient fine-tuning. #ICLR2025 🧵

1

9

32

RT @abeirami: Finally, if you are also going to #AISTATS2025, @SomnathBrc will be presenting 𝐩𝐞𝐫𝐟𝐞𝐜𝐭 𝐜𝐨𝐧𝐜𝐞𝐩𝐭 𝐞𝐫𝐚𝐬𝐮𝐫𝐞. Somnath will be at I….

0

1

0

(9/n) Finally, I would like to thank all my amazing co-authors: Avinava, @abeirami, Rahul, @nicholasmonath, Amr, @snigdhac25. cc: @uncnlp @unccs.

0

0

3

(7/n) We would like to highlight previous great works, like LEACE, that perfectly erase concepts to protect against linear adversaries. In our work, we improve upon this method and present a technique that can protect against any adversary.

Ever wanted to mindwipe an LLM?. Our method, LEAst-squares Concept Erasure (LEACE), provably erases all linearly-encoded information about a concept from neural net activations. It does so surgically, inflicting minimal damage to other concepts. 🧵.

1

0

2

(2/n) We study the fundamental limits of concept erasure. Borrowing from the work of @FlavioCalmon et al. in information theory literature, we characterize the erasure capacity and maximum utility that can be retained during concept erasure.

1

0

3