The Sagacious Society of Smol Model Enjoyers

@SmolModels

Followers

2,154

Following

3

Media

6

Statuses

40

smol is simple, speedy, safe, and scheap.

San Francisco, CA

Joined February 2023

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

América

• 548148 Tweets

Switch

• 238983 Tweets

LINGORM TUKTUK GOGO

• 122378 Tweets

Knicks

• 105998 Tweets

महाराणा प्रताप

• 81562 Tweets

Brunson

• 76106 Tweets

#jjk259

• 73385 Tweets

ドカ食い

• 67458 Tweets

Choso

• 58574 Tweets

もちづきさん

• 58121 Tweets

MAURO AL 9009

• 52897 Tweets

Yuji

• 43244 Tweets

Gege

• 38150 Tweets

アイスの日

• 29509 Tweets

Sukuna

• 28603 Tweets

血糖値スパイク

• 22812 Tweets

頂き女子

• 21668 Tweets

Oilers

• 21374 Tweets

#MaharanaPratapJayanti

• 19837 Tweets

クロステーマスカウト

• 18189 Tweets

シナモン

• 17868 Tweets

タキオン

• 17110 Tweets

Pachuca

• 15729 Tweets

結婚詐欺

• 15014 Tweets

女性向け

• 14267 Tweets

ポチャッコ

• 12740 Tweets

男性向け

• 11998 Tweets

Last Seen Profiles

Pinned Tweet

we are back thanks to

@FanaHOVA

!

first project 📈

1

7

26

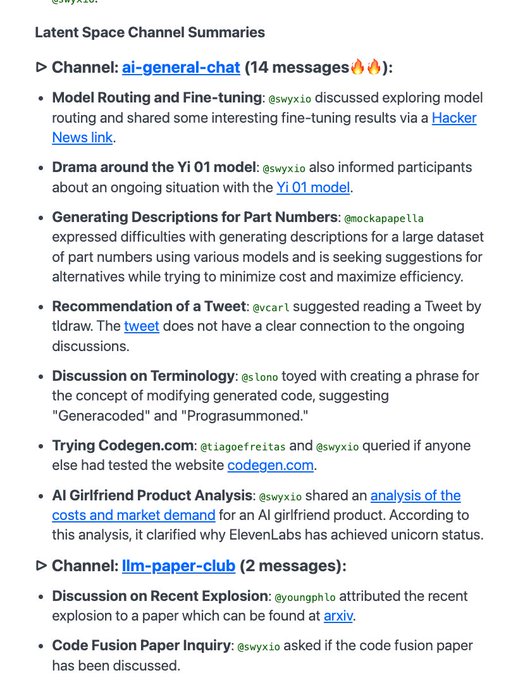

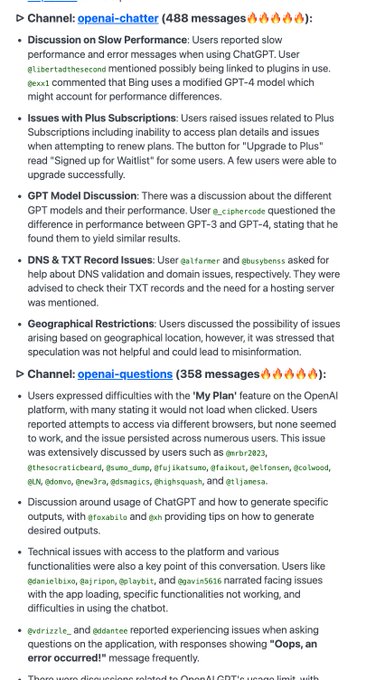

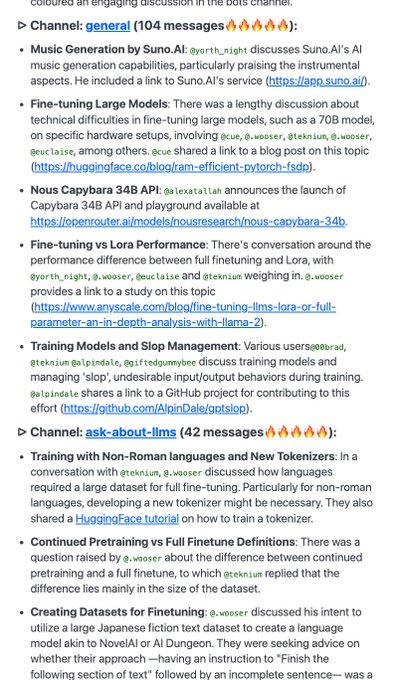

AI Discord overwhelm?

We gotchu.

Coming to smol talk 🔜

(what are the top AI discords we should add? we have

@openai

@langchainai

@nousresearch

@Teknium1

@alignment_lab

@latentspacepod

)

5

4

27

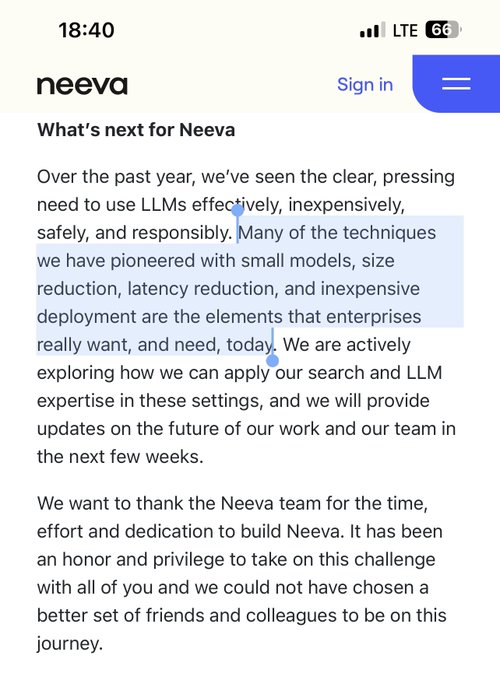

Neeva is shutting down Neeva dot com, and pivoting to smol models for the enterprise 👀

0

0

4

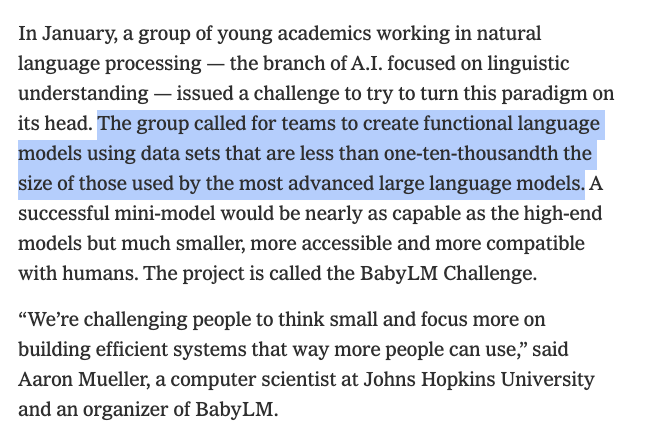

"big things ~~can~~ should always start small" 👏

0

1

3

@n0riskn0r3ward

@swyx

thanks for the feedback! currently working on a rewrite that will hopefully address some of these issues 🐣

0

0

0