search founder

@n0riskn0r3ward

Followers

2K

Following

12K

Media

304

Statuses

4K

Solo entrepreneur passionate about AI and search tech. Building a niche search product and sharing what I learn along the way.

Joined June 2022

In many ways, fine tuning/training good models just feels like ensemble maxxing. Along these lines a DSPy prompt optimizer idea inspired by @dianetc_ ‘s recent paper - instead of - find the best prompt - find the three best prompts who’s outputs, when averaged or ensembled in.

2

0

8

The bootstrapped entreprenuer in me is hella annoyed with the part of me that's following my incentives and switching to the Codex CLI bc it's basically free with my existing monthly sub and somehow 10x better than GPT-5 in @cursor_ai for small single file edits.

1

0

3

In other discoveries today. Fireworks api's will give you 5 log probs. No more log probs for you. @DeepInfra will only give you the log prob of the token generated. And @togethercompute 's docs say they'll give you logprobs but instead they just give you an error. .

2

0

2

I think @FireworksAI_HQ might win the award for dumbest pricing setup. I want to pay you money - maybe just figure out how to let me do that with the least friction possible.

1

0

5

Outperforms Qwen 3's reranker for me which was previously the best reranker in my testing. Also outperforms GPT-5-mini on most metrics. Doesn't quite best Gemini 2.5 Flash Lite, though this is $0.05 per M tokens. It's a Qwen 2.5 train.

📣 Announcing rerank-2.5 and 2.5-lite: our latest generation of rerankers! .• First reranker with instruction-following capabilities.• rerank-2.5 and 2.5-lite are 7.94% and 7.16% more accurate than Cohere Reranker v3.5.• Additional 8.13% and 7.55% performance gain with

1

1

8

When you compare Google’s success in AI to Azure and AWS’s inability to run inference on an open source model released by a company they own 49% of, it’s all the more remarkable.

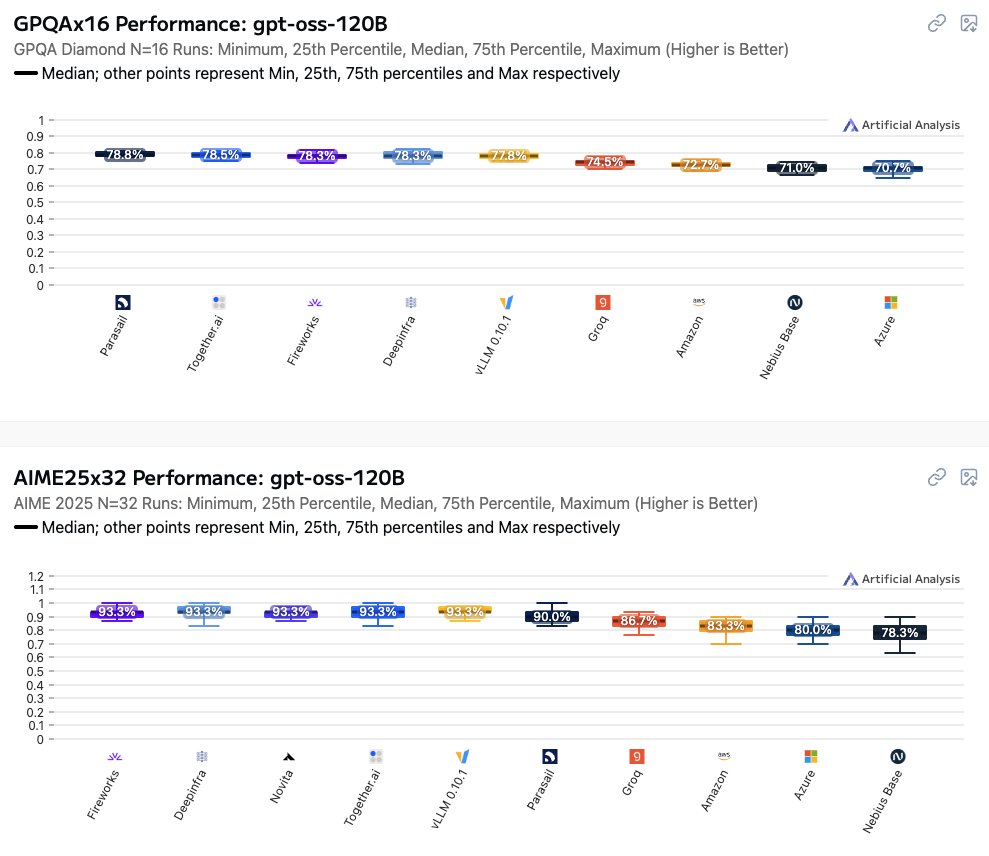

We've launched benchmarks of the accuracy of providers offering APIs for gpt-oss-120b. We compare providers by running GPQA Diamond 16 times, AIME25 32 times, and IFBench 8 times. We report the median score across these runs alongside minimum, 25th percentile, 75th percentile and

1

0

3