Sergio Paniego

@SergioPaniego

Followers

3K

Following

12K

Media

537

Statuses

5K

Machine Learning Engineer @huggingface 🤗 AI PhD. Technology enables us to be more human. 🏳️🌈

Madrid, España

Joined July 2011

Google released a toooon of resources to explore FunctionGemma 👨🍳 For example, this Colab is tailored for fine-tuning the model to handle tool-selection ambiguity using TRL. Since it’s built for fine-tuning, it’s a great way to get started

1

1

9

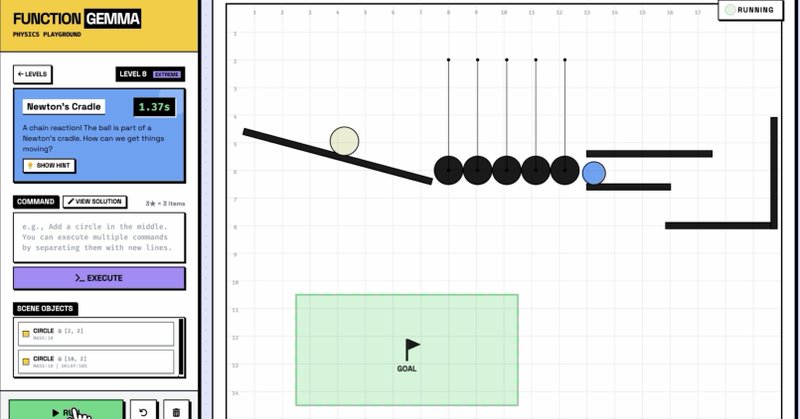

this game by @xenovacom is just amazing! 🤩 Play with FunctionGemma running locally on the browser, using natural language to solve the levels https://t.co/g2PlkggEQb

huggingface.co

0

0

5

Google DeepMind releases FunctionGemma, a 240M model specialized in 🔧 tool calling, built for fine-tuning TRL has day-0 support. To celebrate, we’re sharing new resources: - Colab guide to fine-tune it for 🌐 browser control with BrowserGym OpenEnv - Standalone training script

1

7

49

NEW: Google releases FunctionGemma, a lightweight (270M), open foundation model built for creating specialized function calling models! 🤯 To test it out, I built a small game: use natural language to solve fun physics simulation puzzles, running 100% locally in your browser! 🕹️

12

107

782

Fine tune Google's FunctionGemma for Mobile, with agents, on colab, locally, or Hugging Face. Google Deepmind Have just release FunctionGemma and anyone can finetune it with TRL. This is the model: - uses the Gemma 3 270M architecture + adapted chat template - specifically for

8

57

322

- Colab notebook: https://t.co/Lr9CfCTloc - Training script: https://t.co/KDs7dbP3Io (command to run it inside the script) - More notebooks in TRL:

huggingface.co

2

2

8

Google DeepMind releases FunctionGemma, a 240M model specialized in 🔧 tool calling, built for fine-tuning TRL has day-0 support. To celebrate, we’re sharing new resources: - Colab guide to fine-tune it for 🌐 browser control with BrowserGym OpenEnv - Standalone training script

1

7

49

Tokenization is almost always overlooked and then becomes THE reason why your LLM pipeline errors out. In this blog we talk about: > tokenization > 🤗 tokenizers and 🤗 transformers > different tokenization algorithms > train a tokenizer on your custom dataset

5

40

378

Steering LLMs behavior in real-time using 🤗Transformers library. I just published a video on @huggingface channel to explain what steering is, why it is analogous to electrical brain stimulation 🧠⚡️, and how it can easily be achieved with just a few lines of code. ⬇⬇⬇

3

8

43

Seems like @GoogleColab is adding H100 support! no luck allocating one yet 🥲 probably a supply problem Colab is easily one of the most impactful tools in AI EVER, and I don’t think we can fully measure how many lives it has already changed Santa Claus came early this year 🎅

4

0

14

💡 ICYMI you can now deploy @AIatMeta Segment Anything Model 3 (SAM 3) on @Microsoft Foundry with @huggingface! SAM 3 claims the gold 🥇 with state-of-the-art segmentation, combining image and video understanding with unified, promptable intelligence. - SoTA performance across

1

3

8

Microsoft TRELLIS.2 is here 🔥 • Single image → textured 3D mesh • 4B params, flow-matching transformer • Up to 1536³ resolution • Open weights, MIT licensed ⬇️ Demo available on Hugging Face

63

399

3K

Fine-tune Nemotron 3 Nano in TRL with coding agents like claude code, colab, locally or on the hub. To fine tune, pick one of these tools: - Combine HF skills with a coding agent like claude code. - Use this colab notebook. - Train it on HF jobs using the Hugging Face hub - If

9

51

345

love the level of detail and transparency in this blog about the new nvidia open release of Nemotron 3 Nano (h/t @llm_wizard et al.) learned a lot, especially loved the section on NeMo Gym and rl for agentic models https://t.co/uwobYaxxje

0

3

36

Open models year in review What a year! We're back with an updated open model builder tier list, our top models of the year, and our predictions for 2026. First, the winning models: 1. DeepSeek R1 (@deepseek_ai): Transformed the AI world 2. Qwen 3 Family (@AlibabaGroup): The new

60

262

2K

we challenged Claude Code and Codex to fine-tune an open source LLM. Claude Code won by surpassing the base model score on its fourth run in 138k tokens. We gave both agents the same task: - Fine-tune Qwen3-0.6B on OpenR1/codeforces-cot - Beat the base model on HumanEval - 5

32

57

484

🎄 last talk of the year about open AI and HF today at @urjc for undergrad students always a pleasure to be back at my alma mater 🎅 slides: https://t.co/Pv9pTzyEzl

0

0

10