Rogerio Feris

@RogerioFeris

Followers

1K

Following

394

Media

1

Statuses

48

Principal scientist and manager at the MIT-IBM Watson AI Lab

Joined February 2020

RT @__YuWang__: 🎉 Our paper “M+: Extending MemoryLLM with Scalable Long-Term Memory” is accepted to ICML 2025!.🔹 Co-trained retriever + lat….

arxiv.org

Equipping large language models (LLMs) with latent-space memory has attracted increasing attention as they can extend the context window of existing language models. However, retaining information...

0

23

0

RT @chancharikm: 🎯 Introducing Sparse Attention Vectors (SAVs): A breakthrough method for extracting powerful multimodal features from Larg….

0

39

0

RT @Yikang_Shen: Granite 3.0 is our latest update for the IBM foundation models. The 8B and 2B models outperform strong competitors with si….

0

29

0

RT @NasimBorazjani: 🚨 OpenAI's new o1 model scores only 38.2% in correctness on our new benchmark of combinatorial problems, SearchBench (h….

0

2

0

RT @WeiLinCV: Welcome to join our workshop to figure out what is next in Multimodal foundation models! Tuesday 08:30 Pacific Time, Summit 4….

0

2

0

RT @leokarlin: Thanks for the highlight @_akhaliq!.We offer a simple and nearly-data-free way to move (large quantities) of custom PEFT mod….

0

6

0

We have a cool challenge on understanding document images in our 2nd #CVPR2024 workshop on “What is Next in Multimodal Foundation Models?”, (. This is a great opportunity to showcase your work in front of a large audience (pic below from our 1st workshop)

0

14

39

RT @ylecun: IBM & Meta are launching the AI Alliance to advance *open* & reliable AI. The list of over 50 founding members from industry, g….

ai.meta.com

AI Alliance Launches as an International Community of Leading Technology Developers, Researchers, and Adopters Collaborating Together to Advance Open, Safe, Responsible AI

0

792

0

Our team is hiring 2024 summer interns. We are doing research towards augmenting large language models with memory, multiple modalities (vision, speech, sound, …), and specializing LLMs for enterprise domains. @MITIBMLab.

19

55

321

RT @JunmoKang: 🚨Can we self-align LLMs with an expert domain like biomedicine with limited supervision?. Introducing Self-Specialization, u….

0

24

0

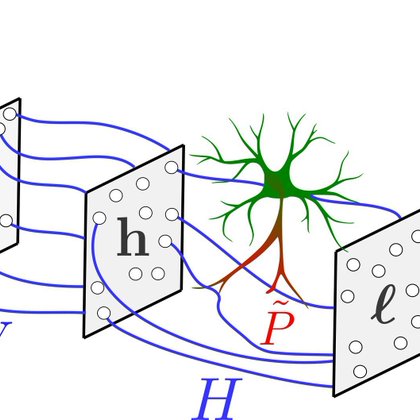

RT @DimaKrotov: What could be the computational function of astrocytes in the brain? We hypothesize that they may be the biological cells t….

pnas.org

Glial cells account for between 50% and 90% of all human brain cells, and serve a variety of important developmental, structural, and metabolic fun...

0

79

0

RT @ZexueHe: (1/3)🤔Wondering what's transferred between the pre-training and fine-tuning? Our ACL finding looks into this question with syn….

0

6

0

RT @DimaKrotov: Recent advances in Hopfield networks of associative memory may be the guiding theoretical principle for designing novel lar….

nature.com

Nature Reviews Physics - Dmitry Krotov discusses recent theoretical advances in Hopfield networks and their broader impact in the context of energy-based neural architectures.

0

174

0

RT @dariogila: We can all agree we’re at a unique and evolutionary moment in AI, with enterprises increasingly turning to this technology’s….

0

52

0

RT @MITIBMLab: New technique from the @MITIBMLab and its collaborators learns to "grow" a larger machine-learning model from a smaller, pre….

0

4

0

We are looking for a summer intern (MSc/PhD) to work on large language models for sports & entertainment, with the goal of improving the experience of millions of fans as part of major tournaments (US Open/Wimbledon) @IBMSports @MITIBMLab Apply at:

0

12

26

RT @johnjnay: Multi-Task Prompt Tuning Enables Transfer Learning. -Learn single prompt from multiple task-specific prompts.-Learn multiplic….

0

46

0

RT @jamessealesmith: Happy to share that we had two papers accepted to #CVPR2023! Both are on continual adaptation of pre-trained models (V….

0

15

0

RT @SivanDoveh: Our paper "Teaching Structured VL Concepts to VL Models" just got accepted to #CVPR2023 🤩🤩🤩.https:….

0

9

0