Refael Tikochinski

@R_Tikochinski

Followers

37

Following

5

Media

10

Statuses

17

NLP and Neuroscience PhD student

Joined September 2011

Very excited to share our new paper published in Nature Communications @NatureComms (link below). This work is part of my PhD research under the supervision of @roireichart (Technion), @HassonUri (@HassonLab), and @ArielYGoldstein, in collaboration with @YoavMeiri.

1

3

9

Our paper has been accepted for publication in Cerebral Cortex. Here is the link: . The latest version is also available for free in our bioarxiv link below. @roireichart @ArielYGoldstein @HassonLab @YeshurunYaara.

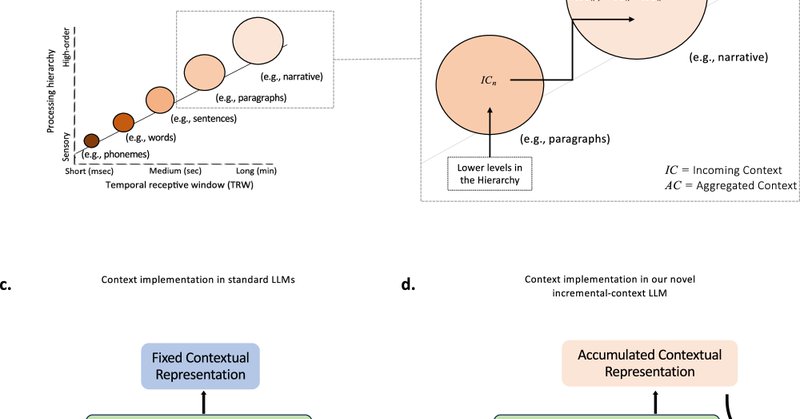

Very excited to share our new preprint, part of my PhD research under the supervision of @roireichart (Technion), @HassonUri and @ArielYGoldstein (@HassonLab), and in collaboration with @YeshurunYaara.

0

3

8

Very excited to share our new preprint, part of my PhD research under the supervision of @roireichart (Technion), @HassonUri and @ArielYGoldstein (@HassonLab), and in collaboration with @YeshurunYaara.

biorxiv.org

Computational Deep Language Models (DLMs) have been shown to be effective in predicting neural responses during natural language processing. This study introduces a novel computational framework,...

2

5

18