Hasson Lab

@HassonLab

Followers

4K

Following

172

Media

71

Statuses

239

The latest news from Uri Hasson's cognitive neuroscience research group at Princeton University

Princeton, NJ

Joined April 2016

How do different languages converge on a shared neural substrate for conceptual meaning? We’re excited to share our latest preprint that specifically addresses this question, led by @zaidzada_

2

12

43

I'm recruiting PhD students to join my new lab in Fall 2026! The Shared Minds Lab at @USC will combine deep learning and ecological human neuroscience to better understand how we communicate our thoughts from one brain to another.

5

100

429

Music is an incredibly powerful retrieval cue. What is the neural basis of music-evoked memory reactivation? And how does this reactivation relate to later memory? In our new study, we used Eternal Sunshine of the Spotless Mind to find out.

biorxiv.org

Music is a potent cue for recalling personal experiences, yet the neural basis of music-evoked memory remains elusive. We address this question by using the full-length film Eternal Sunshine of the...

1

10

31

Finally, we developed a set of interactive tutorials for preprocessing and running encoding models to get you started. Happy to hear any feedback or field any questions about the dataset! https://t.co/pKYrVRRC36

0

1

6

We validated both the data and stimulus features using encoding models, replicating previous findings showing an advantage for LLM embeddings.

1

0

0

We also provide word-level transcripts and stimulus features ranging from low-level acoustic features to large language model embeddings.

1

0

0

We recorded ECoG data in nine subjects while they listened to a 30-minute story. We provide a minimally preprocessed derivative of the raw data, ready to be used.

1

0

0

We’re excited to share our unique ECoG dataset for natural language comprehension. The paper is now on Scientific Data ( https://t.co/4sNAx1XqGn) and data is on OpenNeuro ( https://t.co/941hX6GPDl).

@zaidzada_ @samnastase

2

17

65

We're really excited to share this work and happy to hear any comments or feedback! Preprint: https://t.co/vmSeHXOJUF Code:

0

0

4

These findings suggest that, despite the diversity of languages, shared meaning emerges from our interactions with one another and our shared world.

1

0

2

Our results suggest that neural representations of meaning underlying different languages are shared across speakers of various languages, and that LMs trained on different languages converge on this shared meaning.

1

0

1

We then tested the extent to which each of these 58 languages can predict the brain activity of our participants. We found that languages that are more similar to the listener’s native language, the better the prediction:

1

0

3

What about multilingual models? We translated the story from English to 57 other languages spanning 14 families, and extracted embeddings for each from multilingual BERT. We visualized the dissimilarity matrix using MDS and found clusters corresponding to language family types.

1

0

3

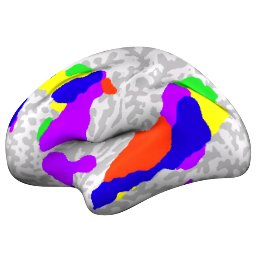

We found that models trained to predict neural activity for one language generalize to different subjects listening to the same content in a different language, across high-level language and default-mode regions.

1

0

2

We then used the encoding models trained on one language to predict the neural activity in listeners of other languages.

1

0

2

We then aimed to find if a similar shared space exists in the brains of native speakers of the three different languages. We used voxelwise encoding models that align the LM embeddings with brain activity from one group of subjects listening to the story in their native language.

1

0

1

We extracted embeddings from three unilingual BERT models—trained on entirely different languages)—and found that (with a rotation) they converge onto similar embeddings, especially in the middle layers:

1

0

1

We used naturalistic fMRI and language models (LMs) to identify neural representations of the shared conceptual meaning of the same story as heard by native speakers of three languages: English, Chinese, and French.

1

0

1

We hypothesized that the brains of native speakers of different languages would converge on the same supra-linguistic conceptual structures when listening to the same story in their respective languages:

1

0

1

Previous research has found that language models trained on different languages learn embedding spaces with similar geometry. This suggests that internal geometry of different languages may converge on similar conceptual structures:

1

0

2

There are over 7,000 human languages in the world and they’re remarkably diverse in their forms and patterns. Nonetheless, we often use different languages to convey similar ideas, and we can learn to translate from one language to another.

1

0

1