Richard Antonello

@NeuroRJ

Followers

359

Following

441

Media

10

Statuses

224

Postdoc in the Mesgarani Lab at Columbia University. Studying how the brain processes language by using LLMs. (Formerly @HuthLab at UT Austin)

Joined May 2020

RT @katie_kang_: LLMs excel at fitting finetuning data, but are they learning to reason or just parroting🦜?. We found a way to probe a mode….

0

122

0

RT @sparse_emcheng: We'll be presenting this at #ACL2025 ! Come find me and @tomjiralerspong in Vienna :).

0

2

0

RT @yufan_zhuang: 🤯Your LLM just threw away 99.9 % of what it knows. Standard decoding samples one token at a time and discards the rest o….

0

7

0

RT @karansdalal: Today, we're releasing a new paper – One-Minute Video Generation with Test-Time Training. We add TTT layers to a pre-trai….

0

938

0

RT @mariannearr: 🚨Announcing our #ICLR2025 Oral!. 🔥Diffusion LMs are on the rise for parallel text generation! But unlike autoregressive LM….

0

133

0

RT @DanielCohenOr1: Vectorization into a neat SVG!🎨✨ .Instead of generating a messy SVG (left), we produce a structured, compact representa….

0

126

0

RT @GretaTuckute: Our @CogCompNeuro GAC paper is out! We focus on two main questions: . 1⃣ How should we use neuroscientific data in model….

0

9

0

RT @TomerUllman: "The Illusion Illusion" . vision language models recognize images of illusions. but they also say non-illusions are illu….

0

16

0

RT @GeelingC: Catch me and @czlwang presenting our poster today (12/14) 3:30-5:30pm at the #NeurIPS2024 NeuroAI Workshop! 🧠 .

0

1

0

RT @patrickmineault: New post! What do brain scores teach us about brains? Does accounting for variance in the brain mean that an ANN is br….

0

67

0

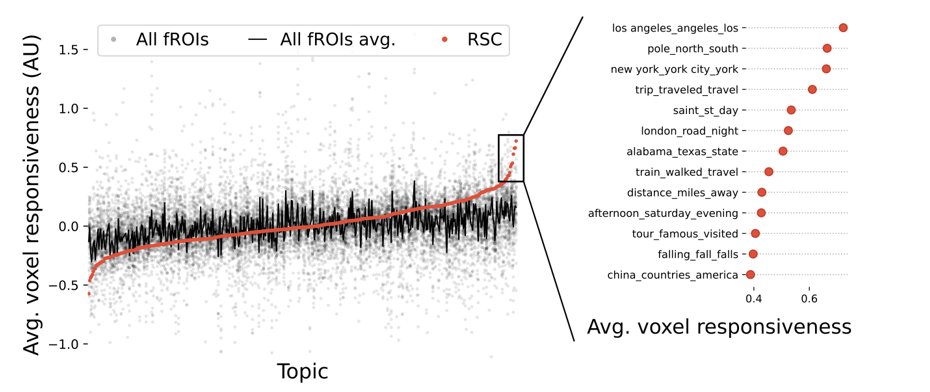

Come by our poster (#3801), exploring how we can use the question-answering abilities of LLMs to build more #interpretable models of language processing in the 🧠, starting in one hour at #NeurIPS !.

LLM embeddings are opaque, hurting them in contexts where we really want to understand what’s in them (like neuroscience). Our new work asks whether we can craft *interpretable embeddings* just by asking yes/no questions to black-box LLMs. 🧵

0

1

9