Michael J. Proulx

@MichaelProulx

Followers

2K

Following

21K

Media

516

Statuses

15K

Threads @mikeproulx2 + Research Scientist @RealityLabs + Professor @BathPsychology (Tweets are my own) Formerly @QMUL & @HHU_de + alum of @JohnsHopkins & @ASU

Redmond, WA

Joined January 2012

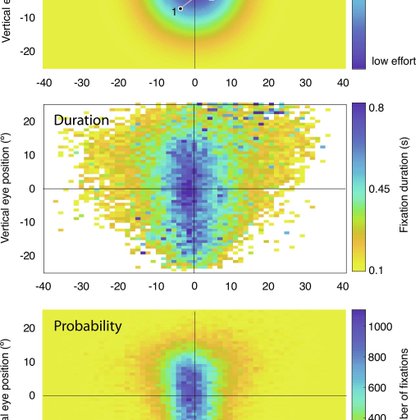

I'm thrilled to share our new paper just out in @PNASNews ! Motor “laziness” constrains fixation selection in real-world tasks -- amazing work by former @RealityLabs intern @csburlingham with @olegkomo @tsmurdison & Naveen Sendhilnathan https://t.co/hP0yCZldED 1/n

pnas.org

Humans coordinate their eye, head, and body movements to gather information from a dynamic environment while maximizing reward and minimizing biome...

7

13

54

#ImagesOfResearch2025 are back at #BathSpaTrainStation celebrating #RealImpact. Our poster from @UniofBath, @BathPsychology captures a part of a journey exploring how a #VR event can be #accessible for youth with #VisionImpairment. @newcollworc @MichaelProulx #KarinPetrini

0

2

2

Such an exciting set of open source models and data!

🚀New from Meta FAIR: today we’re introducing Seamless Interaction, a research project dedicated to modeling interpersonal dynamics. The project features a family of audiovisual behavioral models, developed in collaboration with Meta’s Codec Avatars lab + Core AI lab, that

0

0

2

Using the dataset, the team developed lightweight and flexible model for reading recognition with high precision and recall by utilizing RGB, eye gaze, and head pose data. Detailed performance analysis and capabilities of the model are available in our technical report.

0

3

4

Reading Recognition in the Wild features video, eye gaze, and head pose sensor outputs, created to help solve the task of reading recognition from wearable devices. Notably, this is the first egocentric dataset to feature high-frequency eye-tracking data collected at 60 Hz.

1

2

4

Reading Recognition in the Wild -- open source! What's great about @RealityLabs? Interdisciplinary collaboration, industry-academic partnerships, breakthrough findings, and advancing the field by sharing open source data. It's all here with @meta_aria - check it out!

Reading Recognition in the wild is a large-scale multimodal dataset comprising 100 hours of reading and non-reading videos captured in diverse and realistic scenarios using Project Aria from 100+ participants. Download the dataset and model at https://t.co/FJEuPtJ7w8.

0

1

6

Exciting to see the new Aria generation 2 features shared!

meta.com

Today, we’re excited to share more about the technology inside Aria Gen 2. This includes an in-depth overview of the form factor, audio capabilities, battery life, upgraded cameras and sensors,...

0

1

9

Starting session 3 of the #GenEAI workshop at @ETRA_conference with a paper presentation by Oleg Komogortsev titled Device-Specific Style Transfer of Eye-Tracking Signals #ETRA2025 🇯🇵

0

4

7

Stein Dolan giving a workshop Keynote at #GenEAI workshop at @ETRA_conference titled "Attention" is All You Need #ETRA2025 🇯🇵

0

4

8

Great to be able to have @RealityLabs sponsor a great meeting like @ETRA_conference !

0

0

3

Such a cool conference shirt!

1

1

3

This is the Sensorama - the first multisensory personal immersive theatre, from 1962. There is only one left in the world.. a priceless piece of VR history. An exclusive tour to see it is one of many items in the Virtual World Society auction. See https://t.co/DZqZjzI9Yb

1

7

22

Why some are saying AI is just normal technology: https://t.co/xPi8UtBQ5h

#GenerativeAI #AI #artificialintelligence #forbes #techbrium #chatgpt #openAI

forbes.com

A heated discussion in the AI community is whether AI ought to be treated as a normal technology. Some say yes, some say heck no. Here's the inside scoop.

0

1

1

🔬 8 specialized workshops at #ETRA2025: • Eye Movements in Programming • Generative AI meets Eye Tracking • Pervasive Eye Tracking • and more! Dive deep into your area of interest with global experts in Tokyo, May 26-29. See full list: https://t.co/bSSmAm5aY9

#Research

etra.acm.org

The 2025 ACM Symposium on Eye Tracking Research & Applications (ETRA) will be held in Tokyo, Japan from May 26 to May 29, 2025.

1

4

3

Visual experience affects neural correlates of audio-haptic integration: A case study of non-sighted individuals https://t.co/BM2nKqOFQV by @meike_scheller @MichaelProulx @AnnegretNoor et al.

0

2

2

We're thrilled to announce that our paper "RetroSketch: A Retrospective Method for Measuring Emotions and Presence in VR" received an Honorable Mention Award at CHI 2025! 🎊 📖 Feel free to check out the lead author’s website (@d_potts2) to learn more:

0

1

4

Workshop at University of Exeter (UK), September 8, 2025: Immersive VR to understand human cognition, perception & action https://t.co/FiPh8sdm7k via @DrGBuckingham with @MichaelProulx; #AR/#VR

eventbrite.co.uk

Join us for a free, day-long workshop exploring the cutting-edge intersection of immersive virtual reality (VR) and experimental psychology.

1

3

3

🎉 We're delighted to introduce EmoSense - an innovative tool, designed to enhance Extended Reality (XR) experiences through advanced emotion recognition technology. #Research #Innovation 📖 If you want to learn more, please check out our website below: https://t.co/wHEkYjnzrT

0

1

5

🔍 Explore Tokyo while attending #ETRA2025! From the futuristic attractions of Odaiba to historic Asakusa, Tokyo offers endless experiences. Conference venue Miraikan is located in vibrant Odaiba with easy access to city highlights! #TokyoTravel Photo by Moiz K. Malik on Unsplash

0

2

3

Imagine walking through a city, asking your AI glasses, “What’s this building?”—and instantly getting accurate, real-time information. 📍 No hallucinations. No misinformation. Just facts. 🚀 That’s what the Meta CRAG-MM Challenge aims to build. 🔗

aicrowd.com

Improve RAG with Real-World Benchmarks | KDD Cup 2025

7

26

100