Manos Zaranis

@ManosZaranis

Followers

103

Following

194

Media

12

Statuses

49

PhD student @istecnico | 2020 Alumni ECE NTUA

Joined March 2018

📂 Code & Data Release.Read the full paper here 👉 🚀 Our code is openly available: 📁 Full Dataset on @huggingface 🤗: MF² is open and ready — now it’s your turn!.Can your model truly understand a full.

0

0

5

This project is the result of an amazing collaboration between researchers at @istecnico, @illc_amsterdam, @IRI_robotics, @IRI_robotics, @UNC, @UCPH_Research, Pioneer Center for AI, @HeriotWattUni, Free University of Bozen-Bolzano, @Unbabel, @Ellis_Amsterdam, @Lisbon_ELLIS,.

1

2

10

🚀 Big news! Tower+ is here — our strongest open-weight multilingual model yet!.

🚀 Tower+: our latest model in the Tower family — sets a new standard for open-weight multilingual models!.We show how to go beyond sentence-level translation, striking a balance between translation quality and general multilingual capabilities. 1/5.

0

0

5

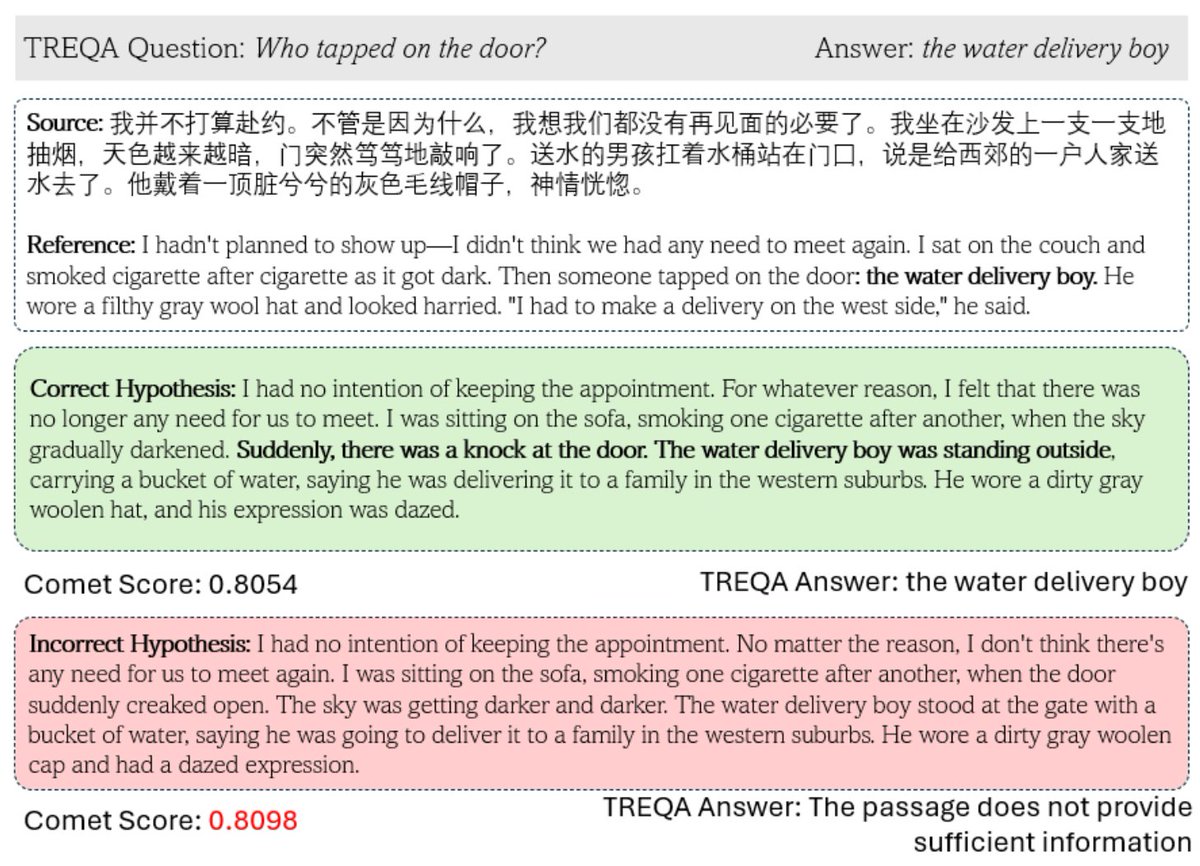

Check out TREQA! TL;DR: We evaluate translation quality of complex content through QA using LLMs.

MT metrics excel at evaluating sentence translations, but struggle with complex texts. We introduce *TREQA* a framework to assess how translations preserve key info by using LLMs to generate & answer questions about them. (co-lead @swetaagrawal20). 1/15

0

0

1

RT @zmprcp: New paper out 🚀 Zero-shot Benchmarking: A Framework for Flexible and Scalable Automatic Evaluation of Language Models: https://….

0

7

0

RT @NafiseSadat: The position is advertised for 12 months, but it has the possibility of a further 2-year extension.

0

3

0

RT @Saul_Santos1997: 🚀 New paper alert! 🚀. Ever tried asking an AI about a 2-hour movie? Yeah… not great. Check: ∞-Video: A Training-Free….

0

5

0

RT @nunonmg: The second, even better and bigger model is now out: EuroLLM-9B 🇪🇺. Ranks as the best open EU-made LLM of its size, proving co….

lnkd.in

This link will take you to a page that’s not on LinkedIn

0

5

0

RT @zmprcp: We built the best EU-made LLM of its size! It supports all EU languages (and more), and beats Meta's Llama-3.1 on multilingual….

0

2

0