LangWatch

@LangWatchAI

Followers

617

Following

182

Media

20

Statuses

135

Open Source platform for LLM observability, evaluation and agent https://t.co/CrsnEmal2g ➡️ DSPy Optimizations ➡️ Scenario Agent Simulations

Joined April 2024

LangWatch Launch Week Day 5: Better Agents Today we are officially releasing Better Agents, a CLI tool and a set of standards for building agents the right way. No, it doesn’t replace your agent framework (Agno, Mastra, LangGraph, etc), it builds on top of them, to push your

1

5

12

Launch Week Day 4 Scenario MCP Most teams want to test their agents… but writing good tests takes time. So testing gets skipped until something breaks. Today we’re fixing that. Scenario MCP now auto-writes agent tests inside your editor. In @cursor_ai (and other MCP editors)

1

0

0

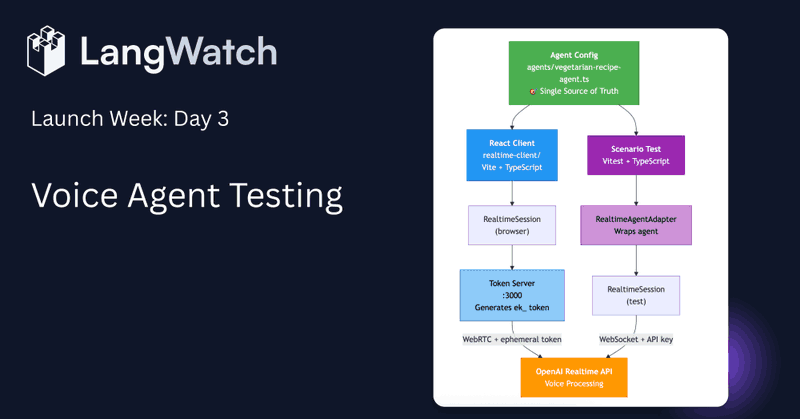

LangWatch Launch Week Day 3: Testing Voice Agents with LangWatch Scenario in Real Time Today’s launch won’t be a surprise for those who watched our last webinar, but here it is: Scenario can now test your realtime voice agent by using another real time user simulator voice agent

3

2

4

Launch Week Day 3 🎤 Voice models made huge leaps this year. But one thing was still missing: a way to test real voice agents automatically, not manually. Today we shipped it. Scenario now supports end-to-end testing for OpenAI Realtime voice agents, real audio, real timing, real

1

0

0

LangWatch Launch Week Day 2: RBAC Custom Roles & Permissions It takes a village to raise an AI Agent to production. You need the AI devs building the agents, the domain experts annotating and validating its quality, the product manager defining the scenarios the agent should

0

1

1

🚀 Day 1 of Launch Week: The new Prompt Playground is live. Write, test, compare, and evaluate prompts using real production context, not synthetic guesses. But here’s the part we're most excited about: - Continue editing directly from an agent simulation - Iterate fast →

1

1

3

Did you know that 95% of enterprise AI agent projects fail to reach production? The reasons: Lack of reliability, evaluation discipline, and trust. LangWatch has a CLI toolkit to tackle this problem called Better Agents CLI, and you can now use it in Kilo Code.

2

3

33

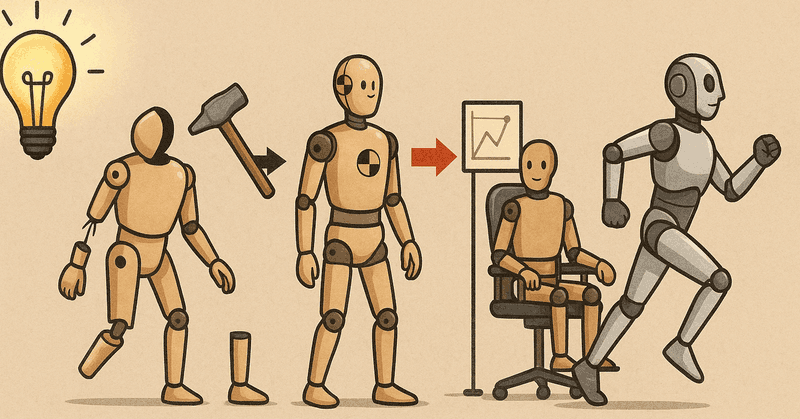

In agent demos, everything’s smooth. In prod? You get messy inputs, long chains, weird edge cases — that’s when things snap. We treat agents like code → write scenario tests first, simulate full workflows, then iterate until green. Think TDD, but for LLMs. More on how we do it

langwatch.ai

Discover Scenario: a domain-driven framework for AI agent testing & LLM evaluations with real-world simulations.

0

1

2

“Do I really need evals?” The real q: how do you know your AI agents will behave in prod? Prototypes don’t need them. Scaling products do. That’s why we built Agent Simulations; Unit tests for AI. The only way to know if you can ship reliably. OSS:

github.com

Agentic testing for agentic codebases. Contribute to langwatch/scenario development by creating an account on GitHub.

0

1

1

We’re hosting a Meetup in our office in Amsterdam on Sept 18 all about agentic AI. 👀 👀 👀 Talks from: • @_rchaves_ (CTO, LangWatch) → Beyond Unit Tests: why agent simulations are redefining AI agent testing. • Deepak Grewal (Kong) → Agentic AI -> powering the next wave

0

0

2

In Amsterdam and want to spend an evening networking and learning all about agentic AI? Come to our @Meetup with @LangWatchAI on September 18th! RSVP to save your spot > https://t.co/GlXJfuDi3l

0

2

1

The gap between model release hype and production reality is always bigger than it looks. OpenAI’s new GPT-5 headlines focus on the measurable: fewer hallucinations, better reasoning, faster responses. All great gains. But the real story? How it works in your workflows, with

langwatch.ai

OpenAI has released its newest flagship model, GPT-5 - Start evaluating the performance within LangWatch available now.

0

0

0