Kyle🤖🚀🦭

@KyleMorgenstein

Followers

16K

Following

196K

Media

2K

Statuses

35K

Full of childlike wonder. Teaching robots manners. UT Austin PhD candidate. 🆕 RL Intern @ Apptronik. Past: Boston Dynamics AI Institute, NASA JPL, MIT ‘20.

he/him

Joined September 2018

RT @EugeneVinitsky: If you have the luxury to choose to do good I think you sort of have noblesse oblige to do so. If you can't, who else c….

0

1

0

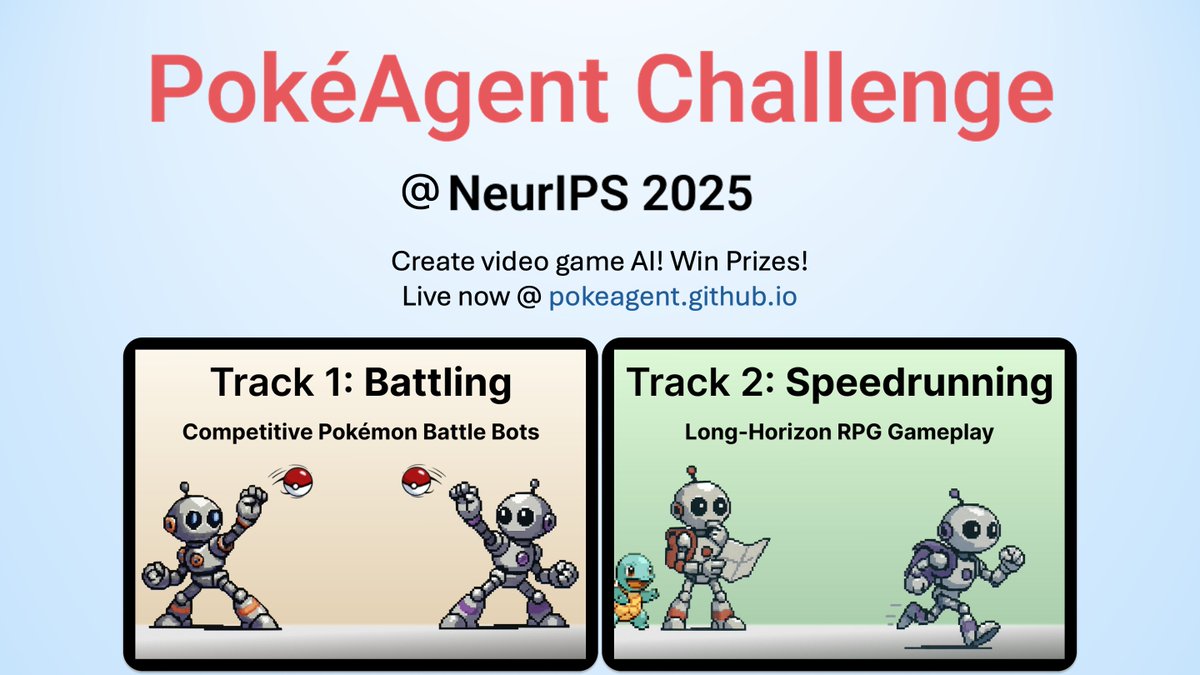

maybe I can finally make my quagsire-gastrodon mono-water dreams come true… really excited to see what approaches end up being successful!.

🚀 Launch day! The NeurIPS 2025 PokéAgent Challenge is live. Two tracks:.① Showdown Battling – imperfect-info, turn-based strategy.② Pokemon Emerald Speedrunning – long horizon RPG planning.5 M labeled replays • starter kit • baselines. Bring your LLM, RL, or hybrid

3

1

27

I know some software purists disagree but IsaacLab’s manager-based system is this. If you goal is to get RL working on hardware very fast, IsaacLab exposes all the right knobs without sacrificing the ability to hack it and add a huge amount of customization for your use case.

nice trend over the last year is that folks in AI have finally produced a few libraries with the right abstractions. finally our code can be both hackable and fast, not just one or the other. this never used to happen. vLLM, sglang, verl. this is the dawn of Good Software in AI.

5

0

34

RT @observie: Pure gold from @KyleMorgenstein . Setting soft PID gains would allow an RL policy to achieve compliance, if needed, and when….

0

3

0

Super cool work! I have some similar methods coming out this fall using tactile estimation for various control tasks :).

We interact with dogs through touch -- a simple pat can communicate trust or instruction. Shouldn't interacting with robot dogs be as intuitive? Most commercial robots lack tactile skins. We present UniTac: a method to sense touch using only existing joint sensors!.[1/5]

2

0

17

RT @chris_j_paxton: @gietema Thanks! You can follow @TheHumanoidHub for robotics news . @ChongZitaZhang @KyleMorgenstein post lots of good….

0

6

0

RT @EugeneVinitsky: Personally I would have trouble getting up in the morning if my job was "make sure the bot can be antisemitic" but that….

0

1

0