GPU MODE

@GPU_MODE

Followers

6K

Following

203

Media

21

Statuses

164

Your favorite GPU community

Joined September 2024

Really grateful to @GPU_MODE for the opportunity to talk about my recent Tiny TPU project: 🧵 https://t.co/9hqftJY82z.

1

1

11

btw today at 3pm PST (in ~4 hours) we're having Vicki Wang from NVIDIA giving a @GPU_MODE talk on CuTe DSL, its features, and how to use the most of it if you're currently competing in the NVFP4 Blackwell competition this will be very helpful, but it's open to anyone!

3

21

203

Training LLMs with NVFP4 is hard because FP4 has so few values that I can fit them all in this post: ±{0, 0.5, 1, 1.5, 2, 3, 4, 6}. But what if I told you that reducing this range even further could actually unlock better training + quantization performance? Introducing Four

6

38

235

Sign up! https://t.co/J6umUfPeia we're at over 1,300 registrations and still going strong!

luma.com

Overview Join the Developer Kernel Hackathon, a four-part performance challenge hosted by NVIDIA in collaboration with GPU MODE. This event invites developers…

0

0

10

▓▓▓░░░░░░░░░ 25% We just concluded the GEMV problem for the Blackwell NVFP4 competition. And we've started on a new GEMM problem. You can still sign up and be eligible for prizes per problem and the grand prize. glhf!

1

7

91

CuteDSL 4.3.1 is here 🚀 Major host overhead optimization (10-40µs down to a 2µs in hot loops_, streamlined PyTorch interop (pass torch.Tensors directly, no more conversions needed) and export and use in more languages and envs. All powered by apache tvm-ffi ABI

9

63

327

Today we are releasing our first public beta of Nsight Python! The goal is to simplify the life of a Python developer by proving a pythonic way to analyze your kernel code! Check it out, provide feedback! Nsight Python — nsight-python

10

49

344

My Triton version for the NVFP4 gemv kernel competition @GPU_MODE 🧵 https://t.co/4u3hAFIlpS

gist.github.com

GitHub Gist: instantly share code, notes, and snippets.

6

13

151

AI has been built on one vendor’s stack for too long. AMD’s GPUs now offer state-of-the-art peak compute and memory bandwidth — but the lack of mature software / the “CUDA moat” keeps that power locked away. Time to break it and ride into our multi-silicon future. 🌊 It's been a

13

97

581

1,000 registrations so far!

Ready, Set, Go! 🏎️ Create something amazing at our Blackwell NVFP4 Kernel Hackathon with @GPU_MODE. 🎊 🏆 Compete in a 4-part performance challenge to optimize low-level kernels on NVIDIA Blackwell hardware. 🥇 3 winners per challenge will receive top-tier NVIDIA hardware.

1

9

171

updates to https://t.co/asKuaIFf5P. working on the runtime and eager kernels now. picograd is taking longer than other "hobby" autograds i've seen. but our plan is to be the *definitive* resource on building your own pytorch. we agree with @karpathy that course building is a

0

2

23

The most intuitive explanation of floats I've ever come across, courtesy of @fabynou

https://t.co/XNiZNZTNlf

20

188

2K

Surprising properties of low-precision floating point numbers are in the news again! These numerical formats are ubiquitous in large NNs but new to most programmers. So I worked with @klyap_ last week to put together this little visualizer: https://t.co/ntHlazyDmK.

8

23

204

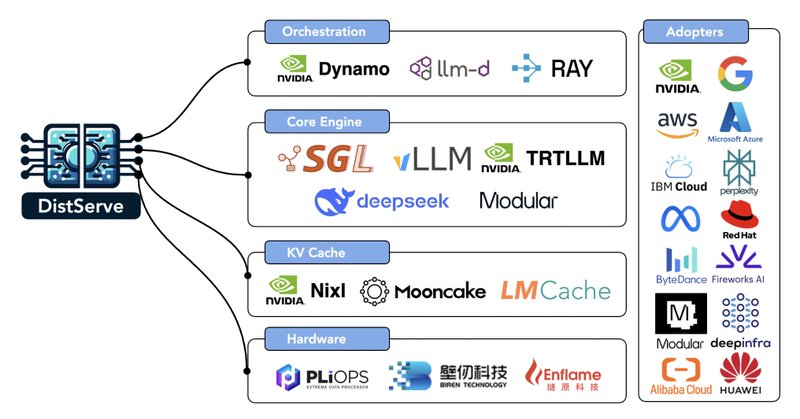

🔥 New Blog: “Disaggregated Inference: 18 Months Later” 18 months in LLM inference feels like a new Moore’s Law cycle – but this time not just 2x per year: 💸 Serving cost ↓10–100x 🚀 Throughput ↑10x ⚡ Latency ↓5x A big reason? Disaggregated Inference. From DistServe, our

hao-ai-lab.github.io

Eighteen months ago, our lab introduced DistServe with a simple bet: split LLM inference into prefill and decode, and scale them independently on separate compute pools. Today, almost every product...

7

48

175

If you are a student and you want a career follow @GPU_MODE, attend events, and do the completions. You WILL be employable.

It's that time of the year again and we're coming with another @GPU_MODE competition! This time in collaboration with @nvidia focused on NVFP4. Focused on NVFP4 and B200 GPUs (thanks to @sestercegroup ) we'll release 4 problems over the following 3 months: 1. NVFP4 Batched GEMV

5

6

198