Ling-Hao (Evan) CHEN

@Evan_THU

Followers

350

Following

736

Media

56

Statuses

556

Ph.D. student @Tsinghua_Uni, Intern @IDEACVR, Vision, Graphics, and Motion Synthesis! ex: @XDUofChina

HongKong, P.R. China

Joined February 2022

@SIGGRAPHAsia 2025, we introduce a lightweight motion retargeting (transfer) system, Motion2Motion. 🔥Beyond deep learning, Motion2Motion is a motion-matching-based system, which runs on CPU-only devices in REAL time. See more at https://t.co/8ONOhywta5

#SIGGRAPH

1

3

11

🌟Motion2Motion supports diverse applications: SMPL-to-any character retargeting, cross-species motion transfer, key-frame transfer... Motion2Motion Blender add-on is also developed for industrial usage.

0

0

0

🚨SIGGRAPH Asia 2025 Paper Alert 🚨 ➡️Paper Title: Motion2Motion: Cross-topology Motion Transfer with Sparse Correspondence 🌟Few pointers from the paper 🎯This work studies the challenge of transfer animations between characters whose skeletal topologies differ substantially.

0

4

18

Happy birthday to the finest father — whose grace in triumph, indefatigable strength in adversity, and quiet courage to rise above the fray continue to guide me everyday!

732

100

2K

@docmilanfar Computer vision was driven by machine learning long before 2012. What happened with AlexNet was a shift to deep neural networks as the dominant form of ML. And, with this, came the focus on training data at scale. But this didn't just transform CV. It transformed everything.

4

3

71

#ICML2025

https://t.co/aM98fshdBh We surveyed 100+ courses across 19 departments at Tsinghua University. With expert and model filtering, we curated a graduate-level, Olympiad-difficulty, multi-disciplinary benchmark R-Bench. Even GPT-4o struggles (33.4% on multimodal)!

1

5

19

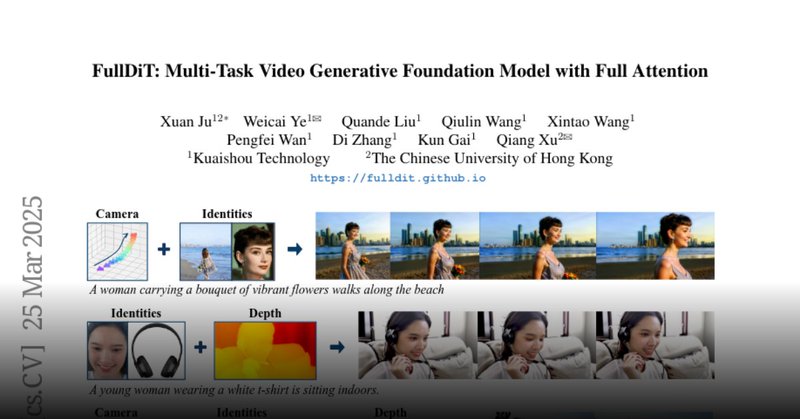

🥳Excited to share our work #FullDiT: Multi-Task Video Generative Foundation Model with Full Attention https://t.co/aExynj80Hn, a unified foundation model for video generation that seamlessly integrates multiple conditions via unified full-attention mechanisms.

huggingface.co

0

8

60

A concise and comprehensive survey of 2D, 3D, and 4D generation.

0

16

89

Lost in various AI generations? Check out this survey. The first attempt to systematically unify the study of 2D, 2D+Time(video), 3D, and (3D+Time)4D generation within a single framework. Arxiv: https://t.co/MEkMO4bUbm All relevant links: https://t.co/vqj104c5Ax Simulating the

0

2

8

thanks for sharing our work❤️

🚨CVPR 2025 Paper Alert 🚨 ➡️Paper Title: HumanMM: Global Human Motion Recovery from Multi-shot Videos 🌟Few pointers from the paper 🎯In this paper, authors have presented a novel framework designed to reconstruct long-sequence 3D human motion in the world coordinates from

1

0

5

Project: https://t.co/hV20cNQufz Paper: https://t.co/qN0efxaIOB Demo: https://t.co/IkPGUMY11x ❤️Work mainly done by @YuhongZhan31576 @GuanlinWu0930 when their internship at @IDEACVR . Happy to contribute to this project with all coauthors jointly. #pingpong #Olympics #cvpr

0

0

3

🚩 Lifting from 2D to video/to 3D, to 4D. This is the latest survey about the world simulator.

🚀 How can we bridge the gap between 2D, video, 3D, and 4D generation for real-world simulation? Our new survey presents the first unified framework for multimodal generative models! ✨ GitHub: https://t.co/KT0DZkmsrg arXiv: https://t.co/Zvx3V5Op4S

#GenerativeAI #survey

0

0

3

incredible

0

0

1

Interested in: ✅Humanoids mastering scalable motor skills for everyday interactions ✅Whole-body loco-manipulation w/ diverse tasks and objects ✅Physically plausible HOI animation Meet InterMimic #CVPR2025 ArXiv: https://t.co/GvsfJ0y8b2 Project: https://t.co/6onZLPp7bx 🧵[1/9]

13

46

266

Input a video to generate 3D dance animations in real time and connect to UE5 for anime-style animation output. The free version 1.6.2 is now available!

5

32

207