Elana Simon

@ElanaPearl

Followers

3K

Following

998

Media

15

Statuses

68

exploring the inner workings of bio ML models @StanfordBiosci

Joined October 2013

Beautiful technical debugging detective longread that starts with a suspicious loss curve and ends all the way in the Objective-C++ depths of PyTorch MPS backend of addcmul_ that silently fails on non-contiguous output tensors. I wonder how long before an LLM can do all of this.

New blog post: The bug that taught me more about PyTorch than years of using it started with a simple training loss plateau... ended up digging through optimizer states, memory layouts, kernel dispatch, and finally understanding how PyTorch works!

203

309

4K

https://t.co/aOWYsXdYRs it's a debugging detective story where you follow along the reasoning behind each step and solve it as we go it also explains ML & PyTorch concepts as they become necessary to understand what's breaking, why, and how to fix it🔎

elanapearl.github.io

a loss plateau that looked like my mistake turned out to be a PyTorch bug. tracking it down meant peeling back every layer of abstraction, from optimizer internals to GPU kernels.

15

49

537

New blog post: The bug that taught me more about PyTorch than years of using it started with a simple training loss plateau... ended up digging through optimizer states, memory layouts, kernel dispatch, and finally understanding how PyTorch works!

47

183

2K

New paper! We reverse engineered the mechanisms underlying Claude Haiku’s ability to perform a simple “perceptual” task. We discover beautiful feature families and manifolds, clean geometric transformations, and distributed attention algorithms!

44

316

2K

Published! 🎉 Paper now has more feature analysis and higher quality figures - thanks to great reviewer feedback! Code also got a major upgrade - v1.0.0 is way more modular so you can easily swap in different protein embeddings or SAE architectures:

github.com

Discovering Interpretable Features in Protein Language Models via Sparse Autoencoders - ElanaPearl/InterPLM

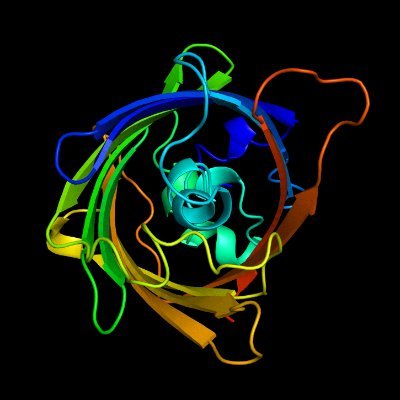

How do protein language models (PLM) think about proteins?🧬 We answer this w/ #InterPLM, just published in @naturemethods! Using sparse autoencoders + LLM agent, we identify 1000s of interpretable concepts learned by PLMs, pointing to new biology 🧵

11

36

255

(4/8) @jack_merullo @RajuSrihita @_MichaelPearce @ElanaPearl examined spikes in the curvature of the loss WRT each input embedding to try and understand memorized sequences:

Could we tell if gpt-oss was memorizing its training data? I.e., points where it’s reasoning vs reciting? We took a quick look at the curvature of the loss landscape of the 20B model to understand memorization and what’s happening internally during reasoning

1

2

38

New research update! We replicated @AnthropicAI's circuit tracing methods to test if they can recover a known, simple transformer mechanism.

2

53

503

if you really understand a neural network you should be able to explain and edit anything in the model by directly manipulating the activation tensor. we made a demo of this with diffusion models

We created a canvas that plugs into an image model’s brain. You can use it to generate images in real-time by painting with the latent concepts the model has learned. Try out Paint with Ember for yourself 👇

14

23

392

New research project: Lluminate - an evolutionary algorithm that helps LLMs break free from generating predictable, similar outputs. Combining evolutionary principles with creative thinking strategies can illuminate the space of possibilities. https://t.co/lNdFtvjlcm

33

186

1K

A First Step Towards Interpretable Protein Structure Prediction With SAEFold, we enable mechanistic interpretability on ESMFold, a protein structure prediction model, for the first time. Watch @NithinParsan demo a case study here w/ links for paper & open-source code 👇

4

20

93

Another awesome example of SAEs uncovering interpretable concepts in bio ML models - from DNA frameshift mutations to CRISPR arrays, prophages, protein secondary structures, and genomic organization features!!

(5/) Our analysis revealed that Evo 2 has learned to recognize key biological elements, including: - Coding sequences (the parts of DNA that contain instructions for building proteins) - α-helices and β-sheets (common shapes that proteins fold into) - tRNAs (molecules that help

1

7

50

Super cool analysis of ESM SAE features!! • Showed that these features can create interpretable linear predictors of protein properties (e.g., thermostability, localization) • Quantified how feature types vary across layers, helping to explain layer-specific probe quality

Can we learn protein biology from a language model? In new work led by @liambai21 and me, we explore how sparse autoencoders can help us understand biology—going from mechanistic interpretability to mechanistic biology.

0

4

31

We propose a novel causal inference method to measure biases in clinical decisions in large medical datasets, and our results highlight real-world examples of known implicit biases. Presented originally at PSB 2025, and full version can be found here:

0

2

2

You can now download the sparse autoencoders from InterPLM via HuggingFace 🤗 This includes 6 additional SAEs trained on ESM-2-650M which find >1.7x more concepts than previously found in ESM-2-8M (more details in the updated preprint) https://t.co/4ooK2d6CSU

1

11

73

Next Tuesday, 1/21 @ 4 pm EST, @ElanaPearl will present "InterPLM: Discovering Interpretable Features in Protein Language Models via Sparse Autoencoders" Paper: https://t.co/jgZC2Yiit9 Sign up on our website to receive Zoom links!

biorxiv.org

Protein language models (PLMs) have demonstrated remarkable success in protein modeling and design, yet their internal mechanisms for predicting structure and function remain poorly understood. Here...

0

13

62

📢 Excited that #unitox is selected as a #NeurIPS2024 spotlight!💡 We created #LLM agent to analyze >100K pages of FDA docs from all approved drug ➡️ new database annotating 8 toxicity types for 2400 drugs. Validated by clinicians. https://t.co/4aEZsGKUT5 Data

2

19

78

Visualizing transformer model attention in the UCSC genome browser (🧵). I've been exploring how DNA sequence might influence genome organization in the nucleus using transformer models. Started by pretraining a model on reference genomes from multiple species 1/7

5

14

152

How are AI Assistants being used in the real world? Our new research shows how to answer this question in a privacy preserving way, automatically identifying trends in Claude usage across the world. 1/

3

28

163

🛠️ Want to analyze your own protein models? (8/9) - Code: https://t.co/W8D7Sa9acs - Full framework for PLM interpretation - Methods for training, analysis, and visualization

github.com

Discovering Interpretable Features in Protein Language Models via Sparse Autoencoders - ElanaPearl/InterPLM

2

5

28