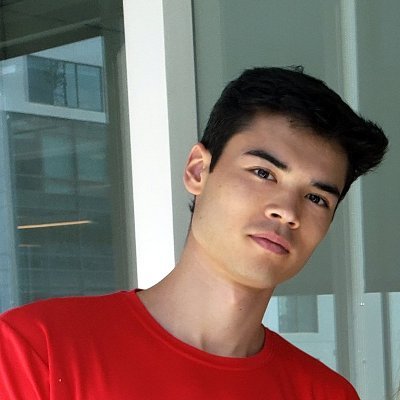

Cyrille Combettes

@CyrilleCmbt

Followers

75

Following

163

Media

2

Statuses

23

Quantitative researcher @CFM_AM Ph.D. @MLatGT Optimization & machine learning

Joined March 2020

Is it possible to find better descent directions for Frank-Wolfe while remaining projection-free? We propose an intuitive and generic boosting procedure to speed up FW and variants, accepted at ICML! Check out the presentation of our paper

0

2

10

The greatest man you've never heard of died this week on Wednesday, September 6th. Marcel Boiteux built the French nuclear fleet as head of national utility EdF, making superb, far-sighted decisions against powerful entrenched interests. Decisions such as abandoning the

167

3K

12K

Carlini got fed up breaking every adversarial defense that gets published (rightfully so), and is now using GPT4 to automate it. This is a legit statement paper, with transparency on methods, that should be read as a criticism of the related literature https://t.co/FtjMNBg5uc

4

48

260

Looking for a post-doctoral candidate in optimization at Toulouse School of Economics (Toulouse, France). Funded by U.S. Air Force, renewable once, supervised by E. Pauwels and myself. Topic: deep learning, large scale optimization

1

22

32

Why Beauty Matters (and how it has been destroyed by "usability") A short thread...

1K

27K

139K

@gabrielpeyre That self-concordant barrier based interior-point methods cannot be strongly polynomial has been shown recently. https://t.co/HQb6DsTW0T Nevertheless fantastic work of NN. Thanks to @HAFriberg for the link.

1

3

18

There's no evidence that SGD plays a fundamental role in generalization. With totally deterministic full-batch gradient descent, Resnet18 still gets >95% accuracy on CIFAR10. With data augmentation, full-batch Resnet152 gets 96.76%. https://t.co/iwIqQd7U1O

29

166

859

ML model saves 90% on bandwidth during video-calls by using just an image of your face & some basic motion data. Paper: https://t.co/AK5zxCRl9L (v/@nvidia) Video: https://t.co/kuTiC4QoNQ (v/@twominutepapers) #CVPR2021 #Compression #DeepFakes #KindOf

6

181

716

A review of the complexity bounds of linear minimizations and projections on several sets: https://t.co/yl5R7N9oGD w/ @spokutta The Frank-Wolfe audience was clearly in mind, hopefully it is also of interest to the broader optimization community!

1

3

10

Short informal summary of our recent paper "Projection-Free Adaptive Gradients for Large-Scale Optimization" with @CyrilleCmbt and Christoph Spiegel whether one can combine Frank-Wolfe with adaptive gradients: yes you can. https://t.co/xWppgZ6dcB

#ml #opt #frankwolfe

0

1

11

The lottery ticket hypothesis 🎲 states that sparse nets can be trained given the right initialisation 🧬. Since the original paper (@jefrankle & @mcarbin) a lot has happened. Checkout my blog post for an overview of recent developments & open Qs. ✍️: https://t.co/R46SCEYf8p

6

114

471

Every once in awhile a paper comes out that makes you breathe a sigh of relief that you don't publish in that field... https://t.co/56heAufhGA "Our results show that when hyperparameters are properly tuned via cross-validation, most methods perform similarly to one another"

31

423

2K

Interesting paper showing that separating the architecture of a neural network into individual networks for each feature can still be very competitive while, most importantly, offering interpretability

my new paper with @rishabh_467 @geoffreyhinton, Rich Caruana and Xuezhou Zhang is out on arxiv today! It’s about interpretability and neural additive models. Don't have time to read the paper? Read this tweet thread instead :) https://t.co/eGPsL55v8G 1/7

0

6

9

A fun read studying the impact of *fine-tuning random seeds* in BERT https://t.co/TWbxspJbq8 A familiar hyperparameter some would argue

Your SOTA code may only be SOTA for some random seeds. Nonsense or new reality? I suppose there are trivial ways to close the gap using restarts and validation data. https://t.co/nhuJrOlqgs

0

0

5

"Behind every great theorem lies a great inequality" This inequalities cheat sheet has been really helpful to keep on hand while working - passing it along in case others find it useful https://t.co/MzWhElyksV

https://t.co/VxTE4sUzKC

3

157

638

Glad to share our new paper "Boosting Frank-Wolfe by Chasing Gradients" with @spokutta We propose to speed-up FW by moving in directions better aligned with the gradients. Turns out the progress obtained overcomes the cost of multiple oracle calls!

0

1

7

About everybody seems to be working under the hypothesis that those who recover from the virus are permanently in the SAME condition as those who never got it.

98

745

3K

First Lightning Talk by Cyrille W Combettes @FieldsInstitute talking about approximate Carathéodory

0

1

7