Bertrand Charpentier

@Bertrand_Charp

Followers

539

Following

711

Media

37

Statuses

324

Founder, President & Chief Scientist @PrunaAI | Prev. @Twitter research, Ph.D. in ML @TU_Muenchen @bertrand-sharp.bsky.social @[email protected]

Joined January 2019

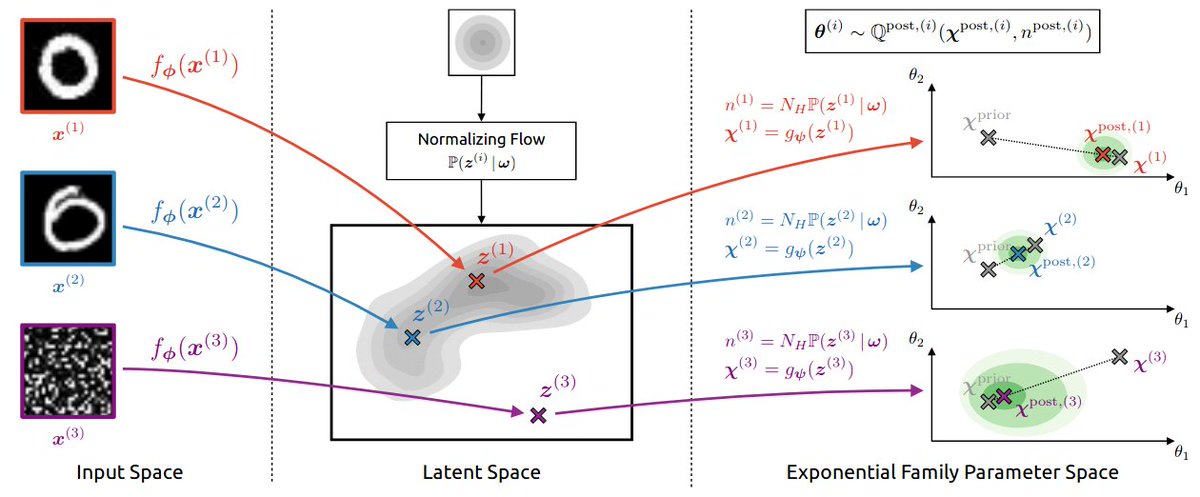

Happy to announce NatPN at #ICLR2022 (Spotlight) ! . - It predicts uncertainty for many supervised tasks like classification & regression. - It guarantees high uncertainty for far OOD. - It only needs one forward pass at testing time. - It does not need OOD data for training.

1

45

200

After releasing a bunch of models optimized with @PrunaAI on @replicate, we received a lot of cool reactions. - People did not believe it was possible.- People want more.- People like the fast speed and low price.- People use them in their products. 💜This means a lot for the

1

2

16

RT @PrunaAI: 🧑🏫 AI Efficiency Fundamentals - Week 5: Fine-tuning. Most people know LoRA but these don’t work for prune-retraining. Do you….

0

2

0

We are now close to real-time video generation: 5s video generated in 5s. Try it here:

replicate.com

The fastest Wan 2.2 text-to-image and image-to-video model

@cb_doge 10 days ago, the 6 sec video took 60 secs to render, then 45 secs, then 30, now 15. We might be able to get it below 12 secs this week. This is with no reduction in visual quality. We’re working on a major upgrade to the audio track. I think real-time video rendering is.

0

2

4

How much energy is required to fuel the hardware to generate one video with Grok Imagine?. Speed can be tricked with more hardware, energy is more reliable efficieny measure, which directly impacts money (and environmental) costs.

Grok Imagine is now making *videos* in 1/2 to 1/4 the time that major competitors take to make a single image!.

0

0

2

RT @PrunaAI: Qwen-Image 3x faster on @replicate. The new Qween of open source is here and we can't wait to see what you build with it 🤩. Mo….

0

6

0

After Wan 2.1 Image, we release Wan 2.2 Image: it is faster and cheaper!.

📸 Introducing Wan 2.2 Image: Generate 2-megapixel images for $0.02 in 3.1s only! . • Accessible on @replicate: - -.• Check details, examples, and benchmarks in our blog: - -.• Use

0

0

3

RT @PrunaAI: ⚡️ The hype is real, generate 5s SOTA videos at $0.06 per video with Wan 2.2 Juiced! . We just optimized the Wan 2.2 video mod….

0

8

0

In the past months, we smashed all the state-of-the-art image and video models to make them x2-x10 faster!. - All models from @bfl_ml: Flux-kontext, Flux-dev, Flux-schnell.- All models from @vivago_ai: Hidream e1.1, Hidream l1 full, Hidream l1 fast, Hidream l1 dev.- All models

1

3

6

You can now generate Wan 2.2 videos in seconds with @replicate and @PrunaAI!. We will release more details and models soon ;).

Replicate and @PrunaAI join forces to bring Wan2.2 to life!. A major upgrade in cinematic quality, smoother movements, and instruction following.

0

0

5

RT @PrunaAI: 🧑🏫 AI Efficiency Fundamentals - Week 4: Quantization. We see quantization everywhere, but do you know the difference between….

0

4

0

RT @PrunaAI: 🔥 Deploy custom AI models with Pruna optimization speed + @lightningai LitServe serving engine! Lightning-Fast AI Deployments!….

0

3

0

From Wan video to Wan Image: We built the fastest endpoint for generating 2K images!. - Accessible on @replicate : - Check details, examples, and benchmarks in our blog: - Use Pruna AI to compress more AI models:.

lnkd.in

This link will take you to a page that’s not on LinkedIn

📷 Introducing Wan Image – the fastest endpoint for generating beautiful 2K images!. From Wan Video, we built Wan Image which generates stunning 2K images in just 3.4 seconds on a single H100 📷. Try it on @replicate: Read our blog for details, examples,

0

2

6

RT @PrunaAI: 🚀 𝗣𝗿𝘂𝗻𝗮 𝘅 @𝗴𝗼𝗸𝗼𝘆𝗲𝗯 𝗣𝗮𝗿𝘁𝗻𝗲𝗿𝘀𝗵𝗶𝗽 𝗨𝗽𝗱𝗮𝘁𝗲!. 🔥 Early adopters are reporting great results from our lightning-fast inference platfor….

0

3

0

Super happy to welcome @sdiazlor in the team!.

Say hello to Sara Han, the newest member of our Developer Advocacy team!. With a laptop and his puppy on her lap, she'll help build connections between Pruna and developers to make models faster, cheaper, smaller and greener. Her time at @argilla_io and @huggingface, combined

0

0

2

How to make AI endpoints having less CO2 emissions? 🌱. One solution is to use endpoints with compressed models. This is particularly important when endpoints run at scale.

🌱 Compressing a single AI model endpoint can save 2t CO2e per year! In comparison, a single EU person consumes ~10t CO2 per year. Last week, our compressed Flux-Schnell endpoint on @replicate has run 𝟮𝗠 𝘁𝗶𝗺𝗲𝘀 𝗼𝗻 𝗛𝟭𝟬𝟬 𝗼𝘃𝗲𝗿 𝟮 𝘄𝗲𝗲𝗸𝘀. For each run, the model

0

0

0

RT @PrunaAI: 🧑🏫 AI Efficiency Fundamentals - Week 1: Large Language Architectures. Do you know the difference between Autoregressive, Diff….

0

1

0