All In Memory

@AllInRam

Followers

106

Following

105

Media

4

Statuses

82

Exploring the implications of diskless distributed systems and their open source implementations.

Joined May 2015

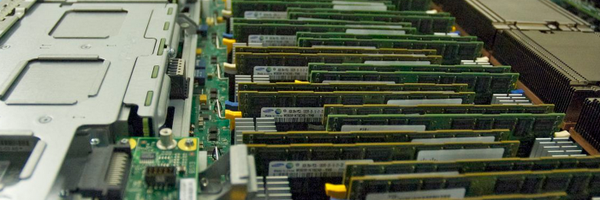

X1 instances from EC2: 2TB of in-memory computing (doubling the price of their earlier most expensive machine):

aws.amazon.com

0

0

0

Better Memory, from CACM, nice overview of NVRAM and its future impact: https://t.co/DTdNhfvRjn

0

1

0

Non-volatile Storage: Implications of the Datacenter's Shifting Center: https://t.co/d3K9VML7UH

0

5

1

You might want to re-read this every year: What Every Programmer Should Know About Memory, by Ulrich Drepper

0

0

0

MarketsAndMarkets predicts the growth of in-memory computing to $23.5 Billion by 2020: http://t.co/E2YrJbWny3

@marketsandmarkets

0

0

0

Other big in-memory news from #awsreinvent - the launch of X1 instances with 2TB of RAM - built with dbs like Apache Spark and HANA in mind

0

0

0

Amazon SPICE: "columnar storage, in-memory technologies... machine code generation, & data compression" http://t.co/Yxq2UjYTII

#awsreinvent

0

1

0

Amazon SPICE: Super-fast Parallel In-Memory Computation Engine, backs the newly launched AWS QuickSight. #awsreinvent

0

0

0

Amazon could cut prices and launch a new in-memory database at RE Invent: http://t.co/wS3dEjvMu8

0

0

0

These days, "in-memory" can mean (1) using memory-optimized techniques, or (2) being too lazy to implement the out-of-core code paths...

0

10

13

"...the server should have anywhere from 512 GB to 1 TB of main memory as a cache for the GPU memory" -@toddmostak

http://t.co/SSH5ZGdo77

0

0

0

In-memory computing "expected to grow from $2.21 billion in 2013 to $13.23 billion in 2018", compounded growth of 43% http://t.co/LmGdhNGcLn

0

2

2

Feral Concurrency Control: http://t.co/mnlM9GbAS5 from @pbailis at SIGMOD2015

0

1

3

Thanks to everyone who came to my talk at @spark_summit about Spark performance! Slides now available here: http://t.co/lIFL3iAMCY

1

22

26

0

1

0

Investigating @ApacheSpark's performance: http://t.co/g0llIqAEhq

0

0

0

DBx1000 - a single-node OLTP DBMS to develop concurrency schemes on future systems with many, many cores: http://t.co/w4UTkbU2kW

0

0

0