Akhil Vaid

@AkhilVaidMD

Followers

159

Following

63

Media

26

Statuses

71

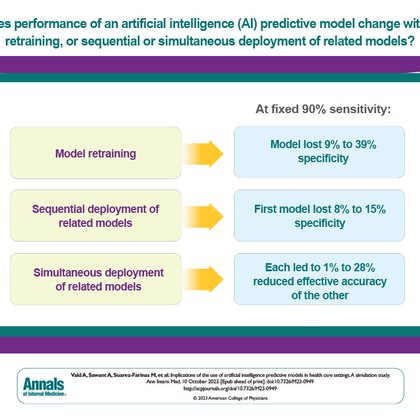

It's a model eat model world: ANY deployed model can confound the current operation and future development of other models, and eventually render itself unusable. Out now in the Annals of Internal Medicine:.

acpjournals.org

Background: Substantial effort has been directed toward demonstrating uses of predictive models in health care. However, implementation of these models into clinical practice may influence patient...

3

10

24

RT @girish_nadkarni: Thank you @NIHDirector for highlighting our #ai study for right ventricular assessment using #ecg @AkhilVaidMD Sonny D….

0

9

0

RT @LancetDigitalH: NEW Comment from Akhil Vaid and colleagues: "Using fine-tuned large language models to parse clinical notes in musculos….

thelancet.com

Musculoskeletal disorders like lower back, knee, and shoulder pain create a substantial health burden in developed countries—affecting function, mobility, and quality of life1. These conditions are...

0

3

0

Out now in Lancet Digital Health, we demonstrate the utility of special purpose Large Language Models for parsing clinical text:.

thelancet.com

Musculoskeletal disorders like lower back, knee, and shoulder pain create a substantial health burden in developed countries—affecting function, mobility, and quality of life1. These conditions are...

1

3

10

RT @IcahnMountSinai: .@AkhilVaidMD discusses research suggesting that predictive models can become a victim of their own success — sending….

0

3

0

RT @IcahnMountSinai: Researchers from @IcahnMountSinai and @UMich find that using predictive models to adjust how care is delivered can alt….

0

6

0

RT @EricTopol: When #AI models eat models: to avoid data drift, retraining can degrade performance, based on simulation of 130,000 ICU admi….

0

24

0