Mehmet Akdel

@AkdelMehmet

Followers

135

Following

223

Media

3

Statuses

53

Joined September 2020

Lots of cool DL tools for PPI complex prediction, but are we evaluating them fairly across practical use-cases? 🤔 We (@vant_ai, @nvidia, @MIT_CSAIL) built PINDER to address this. Can we generalize for novel interfaces, and binding modes, etc.? Let's find out! (1/n)

2

11

36

Rewriting Protein Alphabets with Language Models 1. A groundbreaking study introduces TEA (The Embedded Alphabet), a novel 20-letter alphabet derived from protein language models, enabling highly efficient large-scale protein homology searches. This method achieves sensitivity

2

24

100

We're thrilled to partner with @HaldaTx to expand the reach of their groundbreaking RIPTAC platform. Together, we're working to identify new therapeutic target-effector combinations that will help enable this innovative proximity-based approach to unlock new treatment

0

9

17

Excited to finally share what we’ve been working on for the past year: Neo-1, a unified model for all-atom structure prediction and generation of both proteins and other molecules! 🧬🔬

Announcing Neo-1: the world’s most advanced atomistic foundation model, unifying structure prediction and all-atom de novo generation for the first time - to decode and design the structure of life 🧵(1/10)

5

7

90

Announcing Neo-1: the world’s most advanced atomistic foundation model, unifying structure prediction and all-atom de novo generation for the first time - to decode and design the structure of life 🧵(1/10)

39

373

2K

Join us tomorrow to hear @peter_skrinjar talk about 🌹Runs N' Poses🌹! Curious to hear your thoughts on how to keep up with benchmarking in this field. More info:

We’re hosting @peter_skrinjar for a webinar to discuss his latest paper “Have protein co-folding methods moved beyond memorization?” 🚀 Peter will discuss the limitations of current co-folding models, the challenges of memorization in ligand pose prediction, and what is needed

0

2

3

🚀 Don't miss this week's talk with @jeremyWohlwend and @GabriCorso where they will talk about their new model Boltz-1. 🗓️Friday - 4pm CET / 10am ET

📢 Join us for a talk with @jeremyWohlwend & @GabriCorso on their recent paper Boltz-1 - a new open source SOTA for 3D structure prediction of biomolecular complexes. As always with @mmbronstein & @befcorreia When: Fri, Dec 06 - 4p CET/10a ET Sign-up: https://t.co/lBY0bTsTtM

0

9

14

Great lecture today in our @biozentrum class by @janani_hex — teaching us how #AlphaFold works and how computational structure prediction can help us explore the vast expanse of the Protein Universe 🚀✨. Thanks Jay!!🙌

1

5

34

Thrilled to receive an @snsf_ch #Ambizione grant to continue my research @biozentrum! Huge thanks to @TorstenSchwede and the team for all the support. The "Picky Binders" will be tackling context-specific interactions in the challenging world of viruses and more!

Congrats to our new SNSF #Ambizione Fellows, Janani Durairaj and Fengjie Wu! @janani_hex will explore variations in viral proteomes & virus evolution. Wu will investigate the dynamics of an important family of cell receptors #GPCR. @UniBasel_en @snsf_ch

https://t.co/iDw34CWvLn

8

10

63

📢 Join us next week for a talk with @ZhonglinJC (@nvidia), @janani_hex (@UniBasel_en) & @dannykovtun (@vant_ai) on P(L)INDER: new benchmarks for protein-protein & protein-ligand interactions Hosted by @mmbronstein and @befcorreia 📅Fri, Aug 30 - 5p CET/11a ET 📝Sign-up:

0

9

35

Meet us tomorrow at #ICML at 4pm in front of Hall A to chat AI x Bio evals & data, we have good chunk of the PINDER & PLINDER team here from @nvidia @vant_ai @mmbronstein

💥 Introducing PINDER & PLINDER With existing evals saturating without clear advances in real life downstream tasks, current progress in AI x Bio is primarily rate-limited by better datasets & evals. In two back-to-back preprints, we address this via fantastic academic-industry

0

10

48

Come talk to us at #ICML2024!

💥 Introducing PINDER & PLINDER With existing evals saturating without clear advances in real life downstream tasks, current progress in AI x Bio is primarily rate-limited by better datasets & evals. In two back-to-back preprints, we address this via fantastic academic-industry

0

3

13

Often the optimal training set and data sampling strategy needs to be figured out iteratively by tracking & understanding the reasons behind errors made by models on test samples spanning diverse ranges of difficulty / similarity to training sets etc. This is not trivial.

1

1

6

#PLINDER eval scripts measure the performance enrichment as a function of multiple similarity metrics (protein, ligand, interactions, etc). We make this easy, because reviewers should demand it and readers should not need to guess. https://t.co/fx96zh1ajq

📈 One standard graph in all bio deep learning papers should be: max similarity to anything in training set vs performance. (Reviewers shouldn't have to guess if there might be overfitting issues).

0

6

28

Thanks @dannykovtun, @agoncear, @ShinaYusuf_, @ZhonglinJC, @OleinikovasV, @xavier_robin, @clemensisert, @GabriCorso, @hannesstaerk, @emaros96, @akdelmehmet, @zwcarpenter, @mtt_gra, @mmbronstein, @TorstenSchwede, @NaefLuca! stay tuned for news from @workshopmlsb!

0

3

8

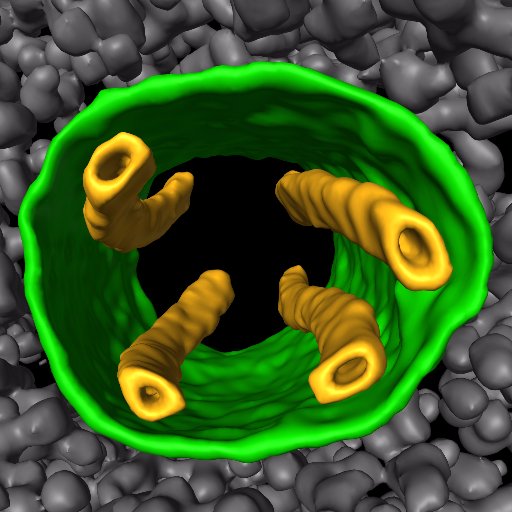

💡Performance decreases on test systems with similar chains as train systems, indicating a lack of generalizability to novel modes of binding (C: green vs blue) 💡Physics-based methods dropped substantially in real-world scenarios using unbound/AF2 input (D) (8/n)

1

0

1

5️⃣With @NVIDIA’s BioNeMo team we showed 💡Training on structure splits = more generalizable models (A: pink vs green) 💡Training diversity is crucial for success (A: blue vs pink) 💡AFmm struggles with unseen interfaces (B) (7/n)

1

0

1

4️⃣Robust Evaluation Harness: We enable fast calculation of CAPRI metrics across diverse inputs (unbound, predicted and bound) and varying difficulty classes. (6/n)

1

0

0

We also provide smaller subsets for expensive inference scenarios, or deleaked with respect to AF-multimer training, allowing a fair comparison without expensive compute. (5/n)

1

0

0