Eric Zhang

@16BitNarwhal

Followers

2K

Following

14K

Media

154

Statuses

419

making music @suno prev @meta @ideogram_ai @ibm

Boston

Joined December 2015

took a break from coding and decided to spontaneously make a song with @16BitNarwhal - check it out! https://t.co/LTwpDrOCFY

0

2

8

detailed paper on LMCache internals just dropped. covers kv-cache optimization, compute/i/o overlap, dynamic offloading, kv-cache connector interface and overall inference infra design. a must-read if you’re into ml systems and inference.

7

28

220

pushed a change to pytorch that makes cudagraphs 1ms faster. turns out for every module pytorch checks if its in a list. i made it a set and now prod is 5% faster lol

2

1

12

if you have a paper at #NeurIPS2025 you should try listening to your paper as a song

paper2song.com

Hear research differently. 5000+ NeurIPS 2025 papers transformed into songs. Search by topic, discover ideas through music, and share what inspires you.

0

3

13

gonna be in san diego for neurips with the suno team pls dm, id love to meet new friends and talk about inference or music or just hang out :)

1

0

14

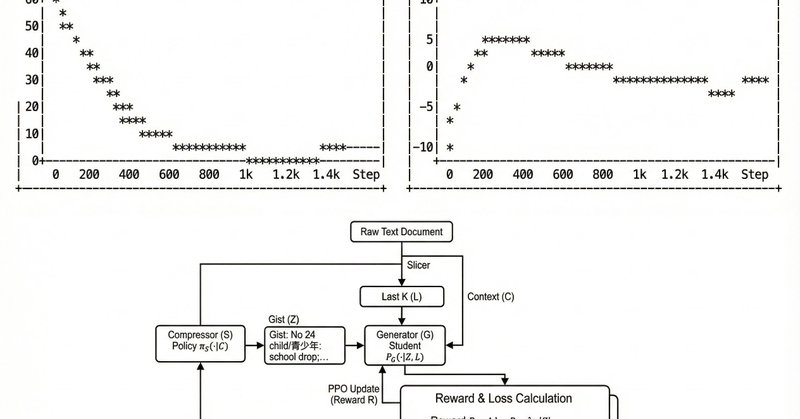

in order to have research agents that can run for days, we need context compaction i used RL to have LLMs naturally learn their own 10x compression! Qwen learned to pack more info per token (ie use Mandarin tokens, prune text) read the technical blog:

rajan.sh

As a constrained optimization problem, LLMs can use RL to invent their own compression schemes to increase its context window.

23

50

786

i must move to nyc

NYSRG reads about data systems :) — thanks Gim and Marek from @SunoMusic for the space to host us, it was the first time at the suno nyc office

2

0

37

Training LLMs end to end is hard. Very excited to share our new blog (book?) that cover the full pipeline: pre-training, post-training and infra. 200+ pages of what worked, what didn’t, and how to make it run reliably https://t.co/iN2JtWhn23

122

895

6K

introducing gpuup: you no longer have to put any effort into setting up CUDA toolkit + drivers on a node (single or multi gpu). just copy paste a short command (in replies)

24

57

745