Zirui Chen

@ziruichen44

Followers

53

Following

10

Media

2

Statuses

10

RT @michaelfbonner: Excited to announce that this paper is now out in @ScienceAdvances

science.org

Probing neural representations reveals universal aspects of vision in artificial and biological networks.

0

8

0

RT @michaelfbonner: Can we gain a deep understanding of neural representations through dimensionality reduction? Our new work shows that th….

arxiv.org

How does the human brain encode complex visual information? While previous research has characterized individual dimensions of visual representation in cortex, we still lack a comprehensive...

0

35

0

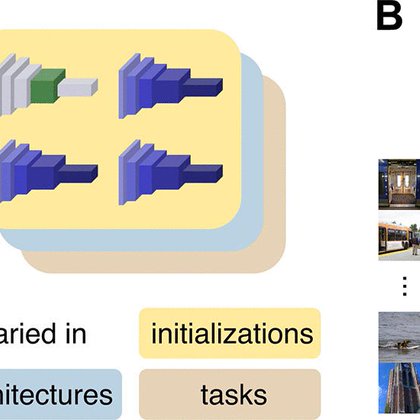

Why do varied DNN designs yield equally good models of human vision? Our preprint with @michaelfbonner shows that diverse DNNs represent images with a shared set of latent dimensions, and these shared dimensions turn out to also be the most brain-aligned.

arxiv.org

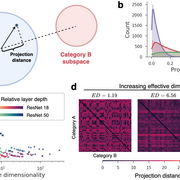

Do neural network models of vision learn brain-aligned representations because they share architectural constraints and task objectives with biological vision or because they learn universal...

3

43

127

RT @michaelfbonner: New paper out in @PLOSCompBiol. The best deep neural network models of visual cortex do not reduce representations to l….

journals.plos.org

Author summary The effective dimensionality of neural population codes in both brains and artificial neural networks can be far smaller than the number of neurons in the population. In vision, it has...

0

47

0