Yu-Xiang Wang

@yuxiangw_cs

Followers

3K

Following

973

Media

171

Statuses

873

Faculty @hdsiucsd, director of S2ML lab. Visitor @awscloud. Prev @ucsbcs @SCSatCMU. Researcher in #machinelearning, #reinforcementlearning, #differentialprivacy

Joined August 2021

Open educational materials can often go a long way. I benefitted from countless such materials myself (e.g., those from @GilStrangMIT, Steve Boyd & @AndrewYNg). Now it's time to contribute back. Here we go -- 52 videos from three grad-level courses I taught at UCSB. Enjoy.

4

62

314

RT @lyang36: 🚨 Olympiad math + AI:. We ran Google’s Gemini 2.5 Pro on the fresh IMO 2025 problems. With careful prompting and pipeline desi….

0

118

0

Okay. So the proposed safe AGI are like the “Trisolarans” from the Three-Body problem and human being’s last stand is our ability to plot and scheme? 🤣 .(Sorry for the spoilers to those who haven’t read the series yet… ).

A simple AGI safety technique: AI’s thoughts are in plain English, just read them. We know it works, with OK (not perfect) transparency!. The risk is fragility: RL training, new architectures, etc threaten transparency. Experts from many orgs agree we should try to preserve it:

2

1

12

Wonder how online learning helps statistical estimation? Sunil Madhow and @_dheeraj_b will present AKORN at West Exhibition Hall B2-B3 W-1006. On a high level, it provides an appropriate **attention map** that optimally balances bias-variance locally. Come see us now!

1

0

9

I am offering a course on *Safety in Generative AI* at UCSD next fall. I'd like to focus on the most important problems today. Mighty #AcademicTwitter: what are some topics that you think I must cover?.

5

3

29

RT @jxmnop: new paper from our work at Meta!. **GPT-style language models memorize 3.6 bits per param**. we compute capacity by measuring t….

0

383

0

RT @xuandongzhao: 🚀 Excited to share the most inspiring work I’ve been part of this year:. "Learning to Reason without External Rewards"….

0

512

0

RT @rui_xin31: Think PII scrubbing ensures privacy? 🤔Think again‼️ In our paper, for the first time on unstructured text, we show that you….

0

21

0

An upcoming workshop on "Inference Optimization for Generative AI" organized by a few awesome people I worked with at AWS. If you are going to #KDD2025, don't miss the workshop! Link to CfP:

2

2

11

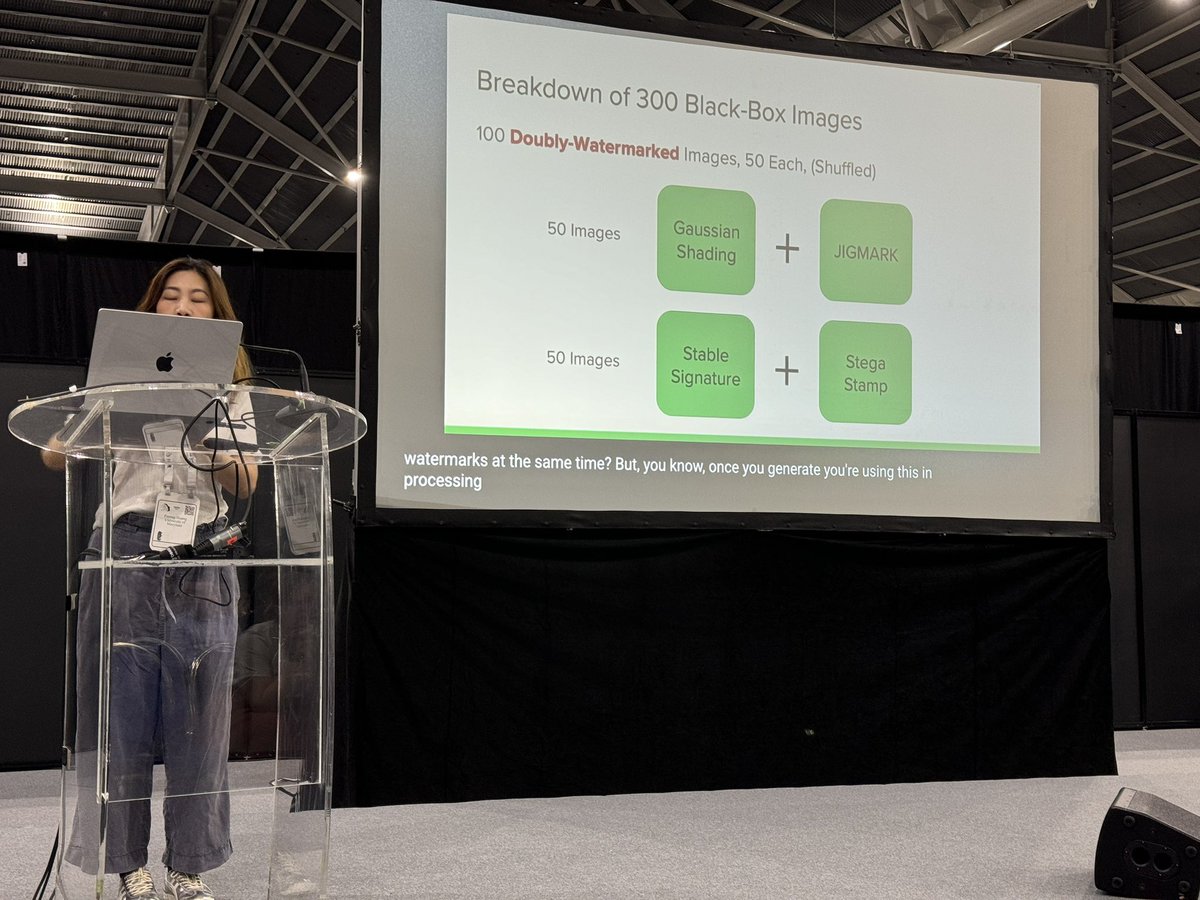

Okay. Time to reveal. The winning attacks for the black-box track involve humans eyeballing the images by their unique artifacts (small blurry patterns), guessing the associated watermark, and applying the known effective attacks from the white-box track.

The secret is revealed! The six ways images were watermarked in the blackbox track of the #NeurIPS2024 competition for watermark removal attacks. Guess what the most successful attacks were?

2

3

14

The secret is revealed! The six ways images were watermarked in the blackbox track of the #NeurIPS2024 competition for watermark removal attacks. Guess what the most successful attacks were?

0

0

9

It’s very fitting that the watermarking workshop at #ICLR starts with Scott Aaronson. Interesting program for the rest of the day too.

0

0

11

Curious about the state of watermarking and its role in AI safety? I will be at #ICLR Poster 237 (Hall 3) from 3pm this afternoon. Come and have a chat!

0

4

26