Yihong Chen

@yihong_thu

Followers

213

Following

199

Media

1

Statuses

47

The constant happiness is curiosity.

London, UK

Joined May 2015

RT @LiuChunan: Presented our project "AsEP: Benchmarking Deep Learning Methods for Antibody-specific Epitope Prediction" at #ISMB2024 in Mo….

0

2

0

RT @karpathy: @maurosicard This information was never released but I'd expect it was a lot more. In terms of multipliers let's say 3X from….

0

14

0

RT @kchonyc: we all want to and need to be prepared to train our own large-scale language models from scratch. why?. 1. transparency or….

0

101

0

RT @xinchiqiu: This is a great collaboration with 2 amazing collaborators from Cambridge, UCL and FAIR Meta.@williamfshen and @yihong_thu ;….

0

2

0

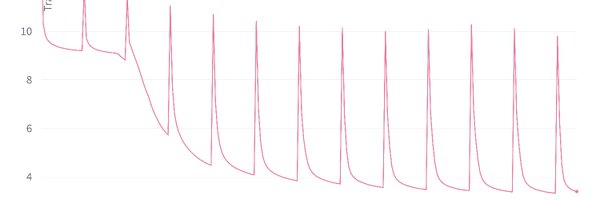

Active forgetting 2307.01163 ( finds its new frontier with @surajk610 et al's cool work on structural in-context learning for LMs! Scaling makes LMs powerful but forgetting gives them plasticity -- accepting and adapting to novel XYZ quickly. #AI.

How robust are in-context algorithms? In new work with @michael_lepori, @jack_merullo, and @brown_nlp, we explore why in-context learning disappears over training and fails on rare and unseen tokens. We also introduce a training intervention that fixes these failures.

1

0

9

RT @niclane7: This week at @iclr_conf catch up with Wanru (@Renee42581826), PhD student @CaMLSys and @Cambridge_CL, who has led some import….

0

2

0

RT @robertarail: I’ll be at #ICLR2024 on Saturday for the LLM Agents Workshop Panel 🚀. Some of my collaborators will also be there through….

0

10

0

RT @flwrlabs: 🌼 Flower AI Summit 2024: The world's largest federated learning conference is about to start 🏃🏼♀️🏃🏽. 📺 Watch live now: https….

0

3

0

RT @Kseniase_: How to teach a language model a new language without retraining?. The key: periodic forgetting. A recent research paper dem….

0

86

0

Quanta covers our work on regular embedding resetting for language plasticity. Worth noting that language plasticity is not the only type of plasticity current language models need. Wish to see more insights into AI plasticity from @nikishin_evg @oh_that_hat @clarelyle.

To learn more flexibly, a new machine learning model selectively forgets what it already knows. @settostun reports:

1

7

23

I am very sure @riedelcastro beats AI in this sense!.

I often feel that the main reason I made it through school was my quite extraordinary forgetfulness. I really had to rederive everything all the time. @yihong_thu showed how this trait can also help language models in her NeurIPS paper Now on Quanta!.

0

0

10

RT @ylecun: @shimon8282 If you really want a large collection of ugly slide decks, look here:.

0

27

0

RT @zhu_zhaocheng: 📜 Check out our latest blog post where we reflect on the past year's research in tool use and reasoning with Large Langu….

towardsdatascience.com

It's the beginning of 2024 and ChatGPT just celebrated its one-year birthday. One year is a super long time for the community of large...

0

11

0

for curious mind, here are some slides

docs.google.com

Improving Language Plasticity via Pretraining with Active Forgetting NeurIPS 2023 Yihong Chen 1

1

1

6

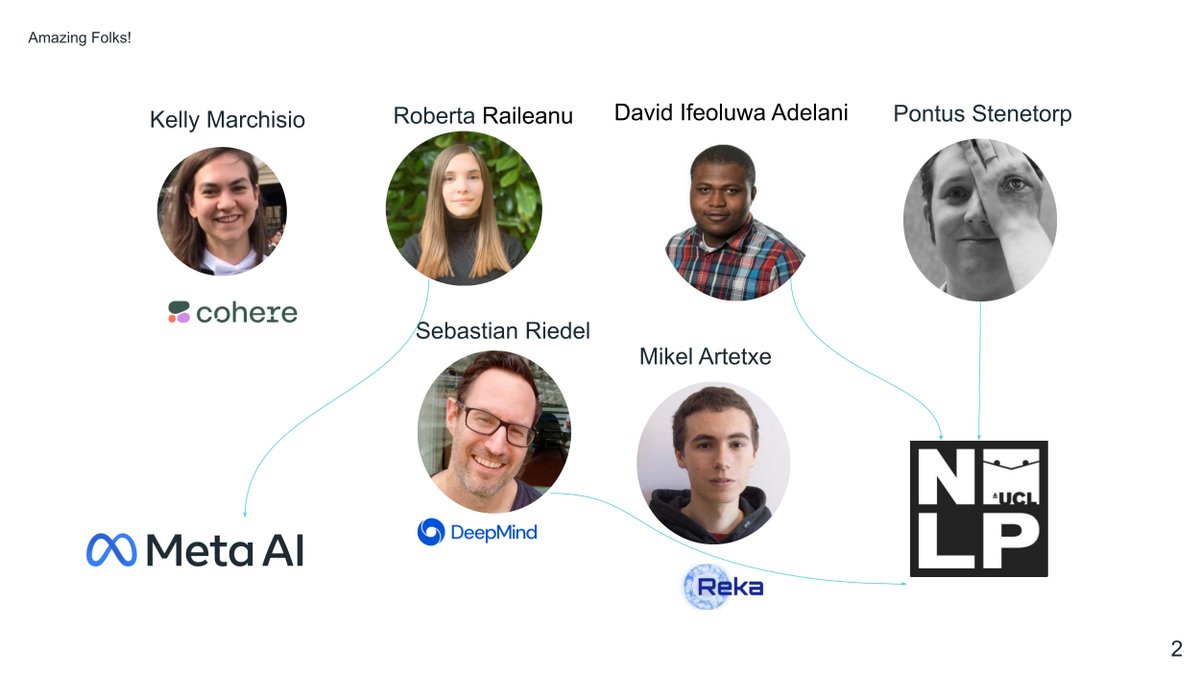

work done with a couple of amazing folks: @cheeesio, @robertarail, @davlanade, Pontus Stenetorp, @riedelcastro and @artetxem.

1

0

5

If you're deciding which #NeurIPS23 poster to check out tomorrow, don't forget our forgetting paper! Visit poster #328 Thursday morning to dive into the world of active forgetting. Discover how it enhances language models with greater language plasticity. See you there!

2

14

48

RT @oanacamb: 🚨💫We are delighted to have @shishirpatil_ at our @uclcs NLP Meetup *Monday 1st Nov 6:30pm GMT* . The event will be *hybrid*….

0

7

0

RT @lena_voita: [1/7] A new paper (and a blog post!) with @javifer_96 and @christofernal!. Neurons in LLMs: Dead, N-gram, Positional. Blog:….

0

65

0